AlexNet

(AlexNet image size should be 227×227×3, instead of 224×224×3, so the math will come out right. The original paper said different numbers, but Andrej Karpathy, the former head of computer vision at Tesla, said it should be 227×227×3 (he said Alex didn't describe why he put 224×224×3). The next convolution should be 11×11 with stride 4: 55×55×96 (instead of 54×54×96). It would be calculated, for example, as: [(input width 227 - kernel width 11) / stride 4] + 1 = [(227 - 11) / 4] + 1 = 55. Since the kernel output is the same length as width, its area is 55×55.)

AlexNet is the name of a convolutional neural network (CNN) architecture, designed by Alex Krizhevsky in collaboration with Ilya Sutskever and Geoffrey Hinton, who was Krizhevsky's Ph.D. advisor at the University of Toronto.[1][2]

AlexNet competed in the

Historic context

AlexNet was not the first fast GPU-implementation of a CNN to win an image recognition contest. A CNN on GPU by K. Chellapilla et al. (2006) was 4 times faster than an equivalent implementation on CPU.

According to the AlexNet paper,

In 2015, AlexNet was outperformed by a Microsoft Research Asia project with over 100 layers, which won the ImageNet 2015 contest.[15]

Network design

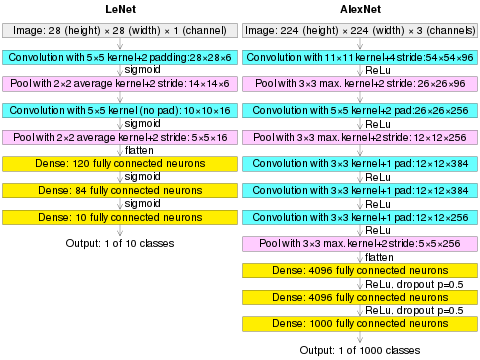

AlexNet contains eight layers: the first five are convolutional layers, some of them followed by max-pooling layers, and the last three are fully connected layers. The network, except the last layer, is split into two copies, each run on one GPU.[2] The entire structure can be written as:

- CNN = convolutional layer (with ReLU activation)

- RN = local response normalization

- MP = maxpooling

- FC = fully connected layer (with ReLU activation)

- Linear = fully connected layer (without activation)

- DO = dropout

It used the non-saturating

Influence

AlexNet is considered one of the most influential papers published in computer vision, having spurred many more papers published employing CNNs and GPUs to accelerate deep learning.[16] As of early 2023, the AlexNet paper has been cited over 120,000 times according to Google Scholar.[17]

References

- ^ Gershgorn, Dave (26 July 2017). "The data that transformed AI research—and possibly the world". Quartz.

- ^ S2CID 195908774.

- ^ "ImageNet Large Scale Visual Recognition Competition 2012 (ILSVRC2012)". image-net.org.

- ^ Kumar Chellapilla; Sidd Puri; Patrice Simard (2006). "High Performance Convolutional Neural Networks for Document Processing". In Lorette, Guy (ed.). Tenth International Workshop on Frontiers in Handwriting Recognition. Suvisoft.

- ^ Cireșan, Dan; Ueli Meier; Jonathan Masci; Luca M. Gambardella; Jurgen Schmidhuber (2011). "Flexible, High Performance Convolutional Neural Networks for Image Classification" (PDF). Proceedings of the Twenty-Second International Joint Conference on Artificial Intelligence-Volume Volume Two. 2: 1237–1242. Retrieved 17 November 2013.

- ^ "IJCNN 2011 Competition result table". OFFICIAL IJCNN2011 COMPETITION. 2010. Retrieved 2019-01-14.

- ^ Schmidhuber, Jürgen (17 March 2017). "History of computer vision contests won by deep CNNs on GPU". Retrieved 14 January 2019.

- ^ S2CID 2309950.

- S2CID 2161592.

- OCLC 364746139.

- S2CID 14542261. Retrieved October 7, 2016.

- .

- S2CID 206775608. Retrieved 16 November 2013.

- ^ Weng, J; Ahuja, N; Huang, TS (1993). "Learning recognition and segmentation of 3-D objects from 2-D images". Proc. 4th International Conf. Computer Vision: 121–128.

- S2CID 206594692.

- ^ Deshpande, Adit. "The 9 Deep Learning Papers You Need To Know About (Understanding CNNs Part 3)". adeshpande3.github.io. Retrieved 2018-12-04.

- ^ AlexNet paper on Google Scholar