F-score

In

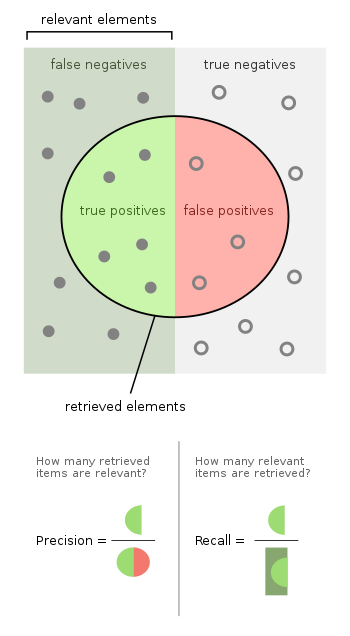

The F1 score is the harmonic mean of the precision and recall. It thus symmetrically represents both precision and recall in one metric. The more generic score applies additional weights, valuing one of precision or recall more than the other.

The highest possible value of an F-score is 1.0, indicating perfect precision and recall, and the lowest possible value is 0, if either precision or recall are zero.

Etymology

The name F-measure is believed to be named after a different F function in Van Rijsbergen's book, when introduced to the Fourth Message Understanding Conference (MUC-4, 1992).[1]

Definition

This section needs additional citations for verification. (December 2018) |

The traditional F-measure or balanced F-score (F1 score) is the harmonic mean of precision and recall:[2]

- .

Fβ score

A more general F score, , that uses a positive real factor , where is chosen such that recall is considered times as important as precision, is:

- .

In terms of Type I and type II errors this becomes:

- .

Two commonly used values for are 2, which weighs recall higher than precision, and 0.5, which weighs recall lower than precision.

The F-measure was derived so that "measures the effectiveness of retrieval with respect to a user who attaches times as much importance to recall as precision".[3] It is based on Van Rijsbergen's effectiveness measure

- .

Their relationship is where .

Diagnostic testing

This is related to the field of binary classification where recall is often termed "sensitivity".

| Predicted condition | Sources: [4][5][6][7][8][9][10][11][12] | ||||

| Total population = P + N |

Predicted Positive (PP) | Predicted Negative (PN) | Informedness, bookmaker informedness (BM) = TPR + TNR − 1 |

Prevalence threshold (PT) = √TPR × FPR - FPR/TPR - FPR | |

| Positive (P) [a] | False negative (FN), miss, underestimation |

power = TP/P = 1 − FNR |

type II error [c] = FN/P = 1 − TPR | ||

| Negative (N)[d] | False positive (FP),

false alarm, overestimation |

type I error [f] = FP/N = 1 − TNR |

specificity (SPC), selectivity = TN/N = 1 − FPR | ||

| Prevalence = P/P + N |

precision = TP/PP = 1 − FDR |

False omission rate (FOR) = FN/PN = 1 − NPV |

Positive likelihood ratio (LR+) = TPR/FPR |

Negative likelihood ratio (LR−) = FNR/TNR | |

| Accuracy (ACC) = TP + TN/P + N |

False discovery rate (FDR) = FP/PP = 1 − PPV |

Negative predictive value (NPV) = TN/PN = 1 − FOR |

Markedness (MK), deltaP (Δp) = PPV + NPV − 1 |

Diagnostic odds ratio (DOR) = LR+/LR− | |

| Balanced accuracy (BA) = TPR + TNR/2 |

F1 score = 2 PPV × TPR/PPV + TPR = 2 TP/2 TP + FP + FN |

Fowlkes–Mallows index (FM) = √PPV × TPR |

Matthews correlation coefficient (MCC) = √TPR × TNR × PPV × NPV - √FNR × FPR × FOR × FDR |

Threat score (TS), critical success index (CSI), Jaccard index = TP/TP + FN + FP | |

- ^ the number of real positive cases in the data

- ^ A test result that correctly indicates the presence of a condition or characteristic

- ^ Type II error: A test result which wrongly indicates that a particular condition or attribute is absent

- ^ the number of real negative cases in the data

- ^ A test result that correctly indicates the absence of a condition or characteristic

- ^ Type I error: A test result which wrongly indicates that a particular condition or attribute is present

Dependence of the F-score on class imbalance

Precision-recall curve, and thus the score, explicitly depends on the ratio of positive to negative test cases.[13] This means that comparison of the F-score across different problems with differing class ratios is problematic. One way to address this issue (see e.g., Siblini et al, 2020[14] ) is to use a standard class ratio when making such comparisons.

Applications

The F-score is often used in the field of

Earlier works focused primarily on the F1 score, but with the proliferation of large scale search engines, performance goals changed to place more emphasis on either precision or recall[16] and so is seen in wide application.

The F-score is also used in

The F-score has been widely used in the natural language processing literature,

Properties

The F1 score is the

- The F1-score of a classifier which always predicts the positive class converges to 1 as the probability of the positive class increases.

- The F1-score of a classifier which always predicts the positive class is equal to 2 * proportion_of_positive_class / ( 1 + proportion_of_positive_class ), since the recall is 1, and the precision is equal to the proportion of the positive class.[21]

- If the scoring model is uninformative (cannot distinguish between the positive and negative class) then the optimal threshold is 0 so that the positive class is always predicted.

- F1 score is concave in the true positive rate.[22]

Criticism

David Hand and others criticize the widespread use of the F1 score since it gives equal importance to precision and recall. In practice, different types of mis-classifications incur different costs. In other words, the relative importance of precision and recall is an aspect of the problem.[23]

According to Davide Chicco and Giuseppe Jurman, the F1 score is less truthful and informative than the

Another source of critique of F1 is its lack of symmetry. It means it may change its value when dataset labeling is changed - the "positive" samples are named "negative" and vice versa. This criticism is met by the P4 metric definition, which is sometimes indicated as a symmetrical extension of F1.[26]

Difference from Fowlkes–Mallows index

While the F-measure is the harmonic mean of recall and precision, the Fowlkes–Mallows index is their geometric mean.[27]

Extension to multi-class classification

The F-score is also used for evaluating classification problems with more than two classes (Multiclass classification). In this setup, the final score is obtained by micro-averaging (biased by class frequency) or macro-averaging (taking all classes as equally important). For macro-averaging, two different formulas have been used by applicants: the F-score of (arithmetic) class-wise precision and recall means or the arithmetic mean of class-wise F-scores, where the latter exhibits more desirable properties.[28]

See also

- BLEU

- Confusion matrix

- Hypothesis tests for accuracy

- METEOR

- NIST (metric)

- Receiver operating characteristic

- ROUGE (metric)

- Uncertainty coefficient, aka Proficiency

- Word error rate

- LEPOR

References

- ^ Sasaki, Y. https://nicolasshu.com/assets/pdf/Sasaki_2007_The%20Truth%20of%20the%20F-measure.pdf.

{{cite news}}: Missing or empty|title=(help) - PMID 26263899.

- ^ Van Rijsbergen, C. J. (1979). Information Retrieval (2nd ed.). Butterworth-Heinemann.

- ^

Balayla, Jacques (2020). "Prevalence threshold (ϕe) and the geometry of screening curves". PLOS ONE. 15 (10): e0240215. PMID 33027310.

- ^

Fawcett, Tom (2006). "An Introduction to ROC Analysis" (PDF). Pattern Recognition Letters. 27 (8): 861–874. S2CID 2027090.

- ^

Piryonesi S. Madeh; El-Diraby Tamer E. (2020-03-01). "Data Analytics in Asset Management: Cost-Effective Prediction of the Pavement Condition Index". Journal of Infrastructure Systems. 26 (1): 04019036. S2CID 213782055.

- ^ Powers, David M. W. (2011). "Evaluation: From Precision, Recall and F-Measure to ROC, Informedness, Markedness & Correlation". Journal of Machine Learning Technologies. 2 (1): 37–63.

- ^

Ting, Kai Ming (2011). Sammut, Claude; Webb, Geoffrey I. (eds.). Encyclopedia of machine learning. Springer. ISBN 978-0-387-30164-8.

- ^ Brooks, Harold; Brown, Barb; Ebert, Beth; Ferro, Chris; Jolliffe, Ian; Koh, Tieh-Yong; Roebber, Paul; Stephenson, David (2015-01-26). "WWRP/WGNE Joint Working Group on Forecast Verification Research". Collaboration for Australian Weather and Climate Research. World Meteorological Organisation. Retrieved 2019-07-17.

- ^

Chicco D, Jurman G (January 2020). "The advantages of the Matthews correlation coefficient (MCC) over F1 score and accuracy in binary classification evaluation". BMC Genomics. 21 (1): 6-1–6-13. PMID 31898477.

- ^

Chicco D, Toetsch N, Jurman G (February 2021). "The Matthews correlation coefficient (MCC) is more reliable than balanced accuracy, bookmaker informedness, and markedness in two-class confusion matrix evaluation". BioData Mining. 14 (13): 13. PMID 33541410.

- ^ Tharwat A. (August 2018). "Classification assessment methods". Applied Computing and Informatics. 17: 168–192. .

- ^ Brabec, Jan; Komárek, Tomáš; Franc, Vojtěch; Machlica, Lukáš (2020). "On model evaluation under non-constant class imbalance". International Conference on Computational Science. Springer. pp. 74–87. .

- ^ Siblini, W.; Fréry, J.; He-Guelton, L.; Oblé, F.; Wang, Y. Q. (2020). "Master your metrics with calibration". In M. Berthold; A. Feelders; G. Krempl (eds.). Advances in Intelligent Data Analysis XVIII. Springer. pp. 457–469. .

- CiteSeerX 10.1.1.127.634.

- S2CID 8482989.

- ^ See, e.g., the evaluation of the [1].

- arXiv:1503.06410 [cs.IR].

- ^ Derczynski, L. (2016). Complementarity, F-score, and NLP Evaluation. Proceedings of the International Conference on Language Resources and Evaluation.

- ^ Manning, Christopher (April 1, 2009). An Introduction to Information Retrieval (PDF). Exercise 8.7: Cambridge University Press. p. 200. Retrieved 18 July 2022.

{{cite book}}: CS1 maint: location (link) - ^ "What is the baseline of the F1 score for a binary classifier?".

- ^ Lipton, Z.C., Elkan, C.P., & Narayanaswamy, B. (2014). F1-Optimal Thresholding in the Multi-Label Setting. ArXiv, abs/1402.1892.

- S2CID 38782128. Retrieved 2018-12-08.

- PMID 31898477.

- hdl:2328/27165.

- arXiv:2210.11997 [cs.LG].

- ^ Tharwat A (August 2018). "Classification assessment methods". Applied Computing and Informatics. 17: 168–192. .

- arXiv:1911.03347 [stat.ML].