Frequency modulation encoding

Frequency modulation encoding, or simply FM, is a method of storing data that saw widespread use in early floppy disk drives and hard disk drives. The data is modified using differential Manchester encoding when written to allow clock recovery to address timing effects known as "jitter" seen on disk media. It was introduced on IBM mainframe drives and was almost universal among early minicomputer and microcomputer floppies. In the case of floppies, FM encoding allowed about 80 kB of data to be stored on a 5+1⁄4-inch disk.

IBM began introducing the more efficient modified frequency modulation, or MFM, starting in 1970. They referred to this format as "double density", with the original FM retroactively becoming "single density". MFM was more difficult to implement and it was not until the early 1980s that low-cost all-in-one MFM floppy drive controllers like the WD1770 emerged. This led to the rapid demise of FM encoding in favor of MFM by the mid-1980s.

Underlying storage mechanism

In contrast,

In addition to the data being stored in patterns that require on-the-fly conversion to and from their internal format, the disk faces additional problems associated with being an analog system – noise, mechanical effects and other issues. In particular, disks suffer from an effect known as jitter due to small changes in timing as the media speeds up and slows down during rotation. One form of unavoidable jitter is due to the hysteresis of the magnetic media, which can lead to an effect known as bit shift that causes the strings of magnetic transition to be stretched out in time. These effects make it difficult to know which bit a particular transition belongs to.[3]

To address this problem, disks use some form of clock recovery using additional signals written to the disk. When the data is read, the clock signal is separated out and data bits can then be clearly seen in the signal and be cleanly lined up into the appropriate slots in memory.[3]

Encoding

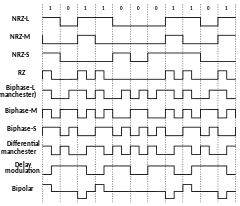

FM encoding uses a simple system to encode the original data in such a way that every bit of data will contain at least one transition, ensuring there are enough transitions during a given period for a successful clock recovery. To do this, it operates with a basic data period twice that of the maximum frequency of the recording media. These are known as "clock windows", with up to one clock transition and one data transition per window. Since each bit of data requires two minimum times, FM encoding stores about half the amount that is theoretically possible on that media.[3]

FM uses an implementation of the

Encoding these transitions requires the system to accept digital data from the host computer and then re-code it into the underlying FM format. On reading, the system has to separate out the clock signal again and leave only the data bits. Because the FM system is so simple, it could be implemented in single-chip forms using late 1970's

Data encoding vs format

The material above refers to bytes being written to disk, but this is a simplification. In most disks, the only unit of data is the sector, and the individual bytes within it have no meaning to the controller. When data is written, the controller is handed a full sector's worth of data and told to write it as a single atomic operation as a series of bits. The controller cannot align the bits with the bytes based solely on the FM information. Thus it is not only the bits within the data that have to be aligned on reading, but the starting point of the sector's data as a whole.[3]

This is not accomplished with the encoding scheme, but the

A1's in front of the header and data areas. These are not FM encoded, so the controller can easily identify them on-the-fly. The controller locks onto these signals to find the start of data, which immediately follows the last sync byte. After that, it reads out each eight bits into subsequent bytes in the buffer.[3]Replacement with MFM

As each bit of data requires two transition periods in the FM system, it makes use of only half the potential storage capacity of the disk. This led to a series of more advanced encodings that make better use of the available space. The most widely used replacement was modified frequency modulation, or MFM. This system recorded only a single bit in every window, which produced the underlying clock signal. The value of the bit, 1 or 0, was encoded by the location of the pulse within the window. 1's were encoded with pulses in the center of the window; 0's with the pulse at the end.[3]

MFM requires a more complex solution to recovering the clock signal. Generally this takes the form of a

References

Citations

- ^ St. Michael, Stephen (1 August 2019). "Introduction to DRAM". All About Circuits.

- ^ Lutz, Melloni & Wakeman 1995, p. 1.

- ^ a b c d e f g h i Lutz, Melloni & Wakeman 1995, p. 2.

- .

Bibliography

- Lutz, Bob; Melloni, Paolo; Wakeman, Larry (1995). TL/F/9419 Floppy Disk Data Separator Design Guide for the DP8473 (PDF) (Technical report). National Semiconductor.

- doi:10.1109/5.63306.