Texture mapping

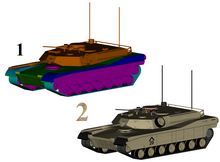

2: Same model with textures

Texture mapping[1][2][3] is a method for mapping a texture on a computer-generated graphic. Texture here can be high frequency detail, surface texture, or color.

History

The original technique was pioneered by Edwin Catmull in 1974 as part of his doctoral thesis.[4]

By 1983 work done by Johnson Yan, Nicholas Szabo, and Lish-Yaan Chen in their invention "Method and Apparatus for Texture Generation" provided for texture generation in real time where texture could be generated and superimposed on surfaces (curvilinear and planar) of any orientation. Texture patterns could be modeled suggestive of the real world material they were intended to represent in a continuous way and free of aliasing, ultimately providing level of detail and gradual (imperceptible) detail level transitions. Texture generating became repeatable and coherent from frame to frame and remained in correct perspective and appropriate occultation.[5] Because the application of real time texturing was applied to early three dimensional flight simulator CGI systems, many of these techniques were later widely used in graphics computing and gaming and applications for years to follow as Texture was often the first prerequisite for realistic looking graphics.

Also in 1983, in a paper "Pyramidial Parametrics", Lance Williams, another graphics pioneer introduced the concept of mapping images onto surfaces to increase the realism of such images.[6]

Texture mapping originally referred to diffuse mapping, a method that simply mapped

and lighting calculations needed to construct a realistic and functional 3D scene.1: Untextured sphere, 2: Texture and bump maps, 3: Texture map only, 4: Opacity and texture maps

Texture maps

A texture map

They may have 1-3 dimensions, although 2 dimensions are most common for visible surfaces. For use with modern hardware, texture map data may be stored in

They usually contain

Multiple texture maps (or

Multiple texture images may be combined in

Creation

Texture maps may be acquired by

Texture application

This process is akin to applying patterned paper to a plain white box. Every vertex in a polygon is assigned a

Texture space

Texture mapping maps the model surface (or

Multitexturing

Multitexturing is the use of more than one texture at a time on a polygon.

Texture filtering

The way that samples (e.g. when viewed as pixels on the screen) are calculated from the texels (texture pixels) is governed by texture filtering. The cheapest method is to use the nearest-neighbour interpolation, but bilinear interpolation or trilinear interpolation between mipmaps are two commonly used alternatives which reduce aliasing or jaggies. In the event of a texture coordinate being outside the texture, it is either clamped or wrapped. Anisotropic filtering better eliminates directional artefacts when viewing textures from oblique viewing angles.

Texture streaming

Texture streaming is a means of using data streams for textures, where each texture is available in two or more different resolutions, as to determine which texture should be loaded into memory and used based on draw distance from the viewer and how much memory is available for textures. Texture streaming allows a rendering engine to use low resolution textures for objects far away from the viewer's camera, and resolve those into more detailed textures, read from a data source, as the point of view nears the objects.

Baking

As an optimization, it is possible to render detail from a complex, high-resolution model or expensive process (such as

Baking can be used as a form of

Rasterisation algorithms

Various techniques have evolved in software and hardware implementations. Each offers different trade-offs in precision, versatility and performance.

Affine texture mapping

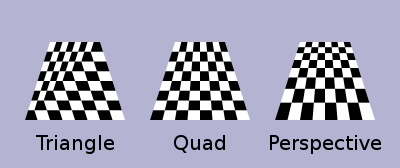

Affine texture mapping linearly interpolates texture coordinates across a surface, and so is the fastest form of texture mapping. Some software and hardware (such as the original

In contrast to perpendicular polygons, this leads to noticeable distortion with perspective transformations (see figure: the checker box texture appears bent), especially as primitives near the

For the case of rectangular objects, using quad primitives can look less incorrect than the same rectangle split into triangles, but because interpolating 4 points adds complexity to the rasterization, most early implementations preferred triangles only. Some hardware, such as the forward texture mapping used by the Nvidia NV1, was able to offer efficient quad primitives. With perspective correction (see below) triangles become equivalent and this advantage disappears.

For rectangular objects that are at right angles to the viewer, like floors and walls, the perspective only needs to be corrected in one direction across the screen, rather than both. The correct perspective mapping can be calculated at the left and right edges of the floor, and then an affine linear interpolation across that horizontal span will look correct, because every pixel along that line is the same distance from the viewer.

Perspective correctness

Perspective correct texturing accounts for the vertices' positions in 3D space, rather than simply interpolating coordinates in 2D screen space.[13] This achieves the correct visual effect but it is more expensive to calculate.[13]

To perform perspective correction of the texture coordinates and , with being the depth component from the viewer's point of view, we can take advantage of the fact that the values , , and are linear in screen space across the surface being textured. In contrast, the original , and , before the division, are not linear across the surface in screen space. We can therefore linearly interpolate these reciprocals across the surface, computing corrected values at each pixel, to result in a perspective correct texture mapping.

To do this, we first calculate the reciprocals at each vertex of our geometry (3 points for a triangle). For vertex we have . Then, we linearly interpolate these reciprocals between the vertices (e.g., using barycentric coordinates), resulting in interpolated values across the surface. At a given point, this yields the interpolated , and . Note that this cannot be yet used as our texture coordinates as our division by altered their coordinate system.

To correct back to the space we first calculate the corrected by again taking the reciprocal . Then we use this to correct our : and .[14]

This correction makes it so that in parts of the polygon that are closer to the viewer the difference from pixel to pixel between texture coordinates is smaller (stretching the texture wider) and in parts that are farther away this difference is larger (compressing the texture).

- Affine texture mapping directly interpolates a texture coordinate between two endpoints and :

- where

- Perspective correct mapping interpolates after dividing by depth , then uses its interpolated reciprocal to recover the correct coordinate:

3D graphics hardware typically supports perspective correct texturing.

Various techniques have evolved for rendering texture mapped geometry into images with different quality/precision tradeoffs, which can be applied to both software and hardware.

Classic software texture mappers generally did only simple mapping with at most one lighting effect (typically applied through a lookup table), and the perspective correctness was about 16 times more expensive.

Restricted camera rotation

The

Some engines were able to render texture mapped

Subdivision for perspective correction

Every triangle can be further subdivided into groups of about 16 pixels in order to achieve two goals. First, keeping the arithmetic mill busy at all times. Second, producing faster arithmetic results.[vague]

World space subdivision

For perspective texture mapping without hardware support, a triangle is broken down into smaller triangles for rendering and affine mapping is used on them. The reason this technique works is that the distortion of affine mapping becomes much less noticeable on smaller polygons. The

Screen space subdivision

Software renderers generally preferred screen subdivision because it has less overhead. Additionally, they try to do linear interpolation along a line of pixels to simplify the set-up (compared to 2d affine interpolation) and thus again the overhead (also affine texture-mapping does not fit into the low number of registers of the

A different approach was taken for

Other techniques

Another technique was approximating the perspective with a faster calculation, such as a polynomial. Still another technique uses 1/z value of the last two drawn pixels to linearly extrapolate the next value. The division is then done starting from those values so that only a small remainder has to be divided[17] but the amount of bookkeeping makes this method too slow on most systems.

Finally,

Hardware implementations

Texture mapping hardware was originally developed for simulation (e.g. as implemented in the

Modern

Some hardware combines texture mapping with hidden-surface determination in tile based deferred rendering or scanline rendering; such systems only fetch the visible texels at the expense of using greater workspace for transformed vertices. Most systems have settled on the Z-buffering approach, which can still reduce the texture mapping workload with front-to-back sorting.

Among earlier graphics hardware, there were two competing paradigms of how to deliver a texture to the screen:

- Forward texture mapping iterates through each texel on the texture, and decides where to place it on the screen.

- Inverse texture mapping instead iterates through pixels on the screen, and decides what texel to use for each.

Inverse texture mapping is the method which has become standard in modern hardware.

Inverse texture mapping

With this method, a pixel on the screen is mapped to a point on the texture. Each vertex of a

The primary advantage is that each pixel covered by a primitive will be traversed exactly once. Once a primitive's vertices are transformed, the amount of remaining work scales directly with how many pixels it covers on the screen.

The main disadvantage versus forward texture mapping is that the

The linear interpolation can be used directly for simple and efficient affine texture mapping, but can also be adapted for perspective correctness.

Forward texture mapping

Forward texture mapping maps each texel of the texture to a pixel on the screen. After transforming a rectangular primitive to a place on the screen, a forward texture mapping renderer iterates through each texel on the texture, splatting each one onto a pixel of the

This was used by some hardware, such as the 3DO, the Sega Saturn and the NV1.

The primary advantage is that the texture will be accessed in a simple linear order, allowing very efficient caching of the texture data. However, this benefit is also its disadvantage: as a primitive gets smaller on screen, it still has to iterate over every texel in the texture, causing many pixels to be overdrawn redundantly.

This method is also well suited for rendering quad primitives rather than reducing them to triangles, which provided an advantage when perspective correct texturing was not available in hardware. This is because the affine distortion of a quad looks less incorrect than the same quad split into two triangles (see affine texture mapping above). The NV1 hardware also allowed a quadratic interpolation mode to provide an even better approximation of perspective correctness.

The existing hardware implementations did not provide effective

Applications

Beyond 3D rendering, the availability of texture mapping hardware has inspired its use for accelerating other tasks:

Tomography

It is possible to use texture mapping hardware to accelerate both the reconstruction of voxel data sets from tomographic scans, and to visualize the results.[19]

User interfaces

Many user interfaces use texture mapping to accelerate animated transitions of screen elements, e.g.

See also

- 2.5D

- 3D computer graphics

- Mipmap

- Materials system

- Parametrization

- Texture synthesis

- Texture atlas

- Texture splatting – a technique for combining textures

- Shader (computer graphics)

References

- ^ Wang, Huamin. "Texture Mapping" (PDF). department of Computer Science and Engineering. Ohio State University. Archived from the original (PDF) on 2016-03-04. Retrieved 2016-01-15.

- ^ "Texture Mapping" (PDF). www.inf.pucrs.br. Retrieved September 15, 2019.

- ^ "CS 405 Texture Mapping". www.cs.uregina.ca. Retrieved 22 March 2018.

- ^ Catmull, E. (1974). A subdivision algorithm for computer display of curved surfaces (PDF) (PhD thesis). University of Utah.

- ^ "Method and Apparatus for Texture Generation".

- .

- ^ Fosner, Ron (January 1999). "DirectX 6.0 Goes Ballistic With Multiple New Features And Much Faster Code". Microsoft.com. Archived from the original on October 31, 2016. Retrieved September 15, 2019.

- ^ Hvidsten, Mike (Spring 2004). "The OpenGL Texture Mapping Guide". homepages.gac.edu. Archived from the original on 23 May 2019. Retrieved 22 March 2018.

- ^ Jon Radoff, Anatomy of an MMORPG, "Anatomy of an MMORPG". radoff.com. August 22, 2008. Archived from the original on 2009-12-13. Retrieved 2009-12-13.

- ^ Roberts, Susan. "How to use textures". Archived from the original on 24 September 2021. Retrieved 20 March 2021.

{{cite web}}: CS1 maint: unfit URL (link) - ^ Blythe, David. Advanced Graphics Programming Techniques Using OpenGL. Siggraph 1999. (PDF) (see: Multitexture)

- ^ Real-Time Bump Map Synthesis, Jan Kautz1, Wolfgang Heidrichy2 and Hans-Peter Seidel1, (1Max-Planck-Institut für Informatik, 2University of British Columbia)

- ^ Imagine Media. March 1996. p. 38.

- ^ Kalms, Mikael (1997). "Perspective Texturemapping". www.lysator.liu.se. Retrieved 2020-03-27.

- ^ "Voxel terrain engine", introduction. In a coder's mind, 2005 (archived 2013).

- ) (Chapter 70, pg. 1282)

- ^ US 5739818, Spackman, John Neil, "Apparatus and method for performing perspectively correct interpolation in computer graphics", issued 1998-04-14

- )

- ^ "texture mapping for tomography".

Software

- TexRecon Archived 2021-11-27 at the Wayback Machine — open-source software for texturing 3D models written in C++

External links

- Introduction into texture mapping using C and SDL (PDF)

- Programming a textured terrain using XNA/DirectX, from www.riemers.net

- Perspective correct texturing

- Time Texturing Texture mapping with bezier lines

- Polynomial Texture Mapping Archived 2019-03-07 at the Wayback Machine Interactive Relighting for Photos

- 3 Métodos de interpolación a partir de puntos (in spanish) Methods that can be used to interpolate a texture knowing the texture coords at the vertices of a polygon

- 3D Texturing Tools