Theoretical computer science

Theoretical computer science (TCS) is a subset of general

It is difficult to circumscribe the theoretical areas precisely. The ACM's Special Interest Group on Algorithms and Computation Theory (SIGACT) provides the following description:[1]

TCS covers a wide variety of topics including

rigor.

History

While logical inference and mathematical proof had existed previously, in 1931

Modern theoretical computer science research is based on these basic developments, but includes many other mathematical and interdisciplinary problems that have been posed, as shown below:

|

|

|

P = NP ? | ||

| Mathematical logic | Automata theory | Number theory | Graph theory | Computability theory | Computational complexity theory |

| GNITIRW-TERCES |

|

|

|

| |

| Cryptography | Type theory | Category theory | Computational geometry | Combinatorial optimization | Quantum computing theory

|

Topics

Algorithms

An algorithm is a step-by-step procedure for calculations. Algorithms are used for calculation, data processing, and automated reasoning.

An algorithm is an

Automata theory

Automata theory is the study of abstract machines and automata, as well as the computational problems that can be solved using them. It is a theory in theoretical computer science, under discrete mathematics (a section of mathematics and also of computer science). Automata comes from the Greek word αὐτόματα meaning "self-acting".

Automata Theory is the study of self-operating virtual machines to help in the logical understanding of input and output process, without or with intermediate stage(s) of computation (or any function/process).

Coding theory

Computational biology

Computational biology involves the development and application of data-analytical and theoretical methods, mathematical modeling and computational simulation techniques to the study of biological, behavioral, and social systems.[10] The field is broadly defined and includes foundations in computer science, applied mathematics, animation, statistics, biochemistry, chemistry, biophysics, molecular biology, genetics, genomics, ecology, evolution, anatomy, neuroscience, and visualization.[11]

Computational biology is different from

, which is an interdisciplinary science using computers to store and process biological data.Computational complexity theory

A problem is regarded as inherently difficult if its solution requires significant resources, whatever the

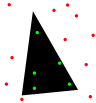

Computational geometry

Computational geometry is a branch of computer science devoted to the study of algorithms that can be stated in terms of geometry. Some purely geometrical problems arise out of the study of computational geometric algorithms, and such problems are also considered to be part of computational geometry.

The main impetus for the development of computational geometry as a discipline was progress in computer graphics and computer-aided design and manufacturing (CAD/CAM), but many problems in computational geometry are classical in nature, and may come from mathematical visualization.

Other important applications of computational geometry include robotics (motion planning and visibility problems), geographic information systems (GIS) (geometrical location and search, route planning), integrated circuit design (IC geometry design and verification), computer-aided engineering (CAE) (mesh generation), computer vision (3D reconstruction).

Computational learning theory

Theoretical results in machine learning mainly deal with a type of inductive learning called supervised learning. In supervised learning, an algorithm is given samples that are labeled in some useful way. For example, the samples might be descriptions of mushrooms, and the labels could be whether or not the mushrooms are edible. The algorithm takes these previously labeled samples and uses them to induce a classifier. This classifier is a function that assigns labels to samples including the samples that have never been previously seen by the algorithm. The goal of the supervised learning algorithm is to optimize some measure of performance such as minimizing the number of mistakes made on new samples.

Computational number theory

Computational number theory, also known as algorithmic number theory, is the study of algorithms for performing number theoretic computations. The best known problem in the field is integer factorization.

Cryptography

Modern cryptography is heavily based on mathematical theory and computer science practice; cryptographic algorithms are designed around

Data structures

A

Different kinds of data structures are suited to different kinds of applications, and some are highly specialized to specific tasks. For example, databases use B-tree indexes for small percentages of data retrieval and compilers and databases use dynamic hash tables as look up tables.

Data structures provide a means to manage large amounts of data efficiently for uses such as large

Distributed computation

Distributed computing studies distributed systems. A distributed system is a software system in which components located on networked computers communicate and coordinate their actions by passing messages.[17] The components interact with each other in order to achieve a common goal. Three significant characteristics of distributed systems are: concurrency of components, lack of a global clock, and independent failure of components.[17] Examples of distributed systems vary from SOA-based systems to massively multiplayer online games to peer-to-peer applications, and blockchain networks like Bitcoin.

A computer program that runs in a distributed system is called a distributed program, and distributed programming is the process of writing such programs.[18] There are many alternatives for the message passing mechanism, including RPC-like connectors and message queues. An important goal and challenge of distributed systems is location transparency.

Information-based complexity

Information-based complexity (IBC) studies optimal algorithms and computational complexity for continuous problems. IBC has studied continuous problems as path integration, partial differential equations, systems of ordinary differential equations, nonlinear equations, integral equations, fixed points, and very-high-dimensional integration.

Formal methods

Formal methods are a particular kind of mathematics based techniques for the specification, development and verification of software and hardware systems.[19] The use of formal methods for software and hardware design is motivated by the expectation that, as in other engineering disciplines, performing appropriate mathematical analysis can contribute to the reliability and robustness of a design.[20]

Formal methods are best described as the application of a fairly broad variety of theoretical computer science fundamentals, in particular

Information theory

Applications of fundamental topics of information theory include

Machine learning

Machine learning can be considered a subfield of computer science and

Parallel computation

The maximum possible speed-up of a single program as a result of parallelization is known as Amdahl's law.

Programming language theory and program semantics

Programming language theory is a branch of computer science that deals with the design, implementation, analysis, characterization, and classification of programming languages and their individual features. It falls within the discipline of theoretical computer science, both depending on and affecting mathematics, software engineering, and linguistics. It is an active research area, with numerous dedicated academic journals.

In

Quantum computation

A

As of 2014[update], quantum computing is still in its infancy but experiments have been carried out in which quantum computational operations were executed on a very small number of qubits.[44] Both practical and theoretical research continues, and many national governments and military funding agencies support quantum computing research to develop quantum computers for both civilian and national security purposes, such as cryptanalysis.[45]

Symbolic computation

Very-large-scale integration

Organizations

- European Association for Theoretical Computer Science

- SIGACT

- Simons Institute for the Theory of Computing

Journals and newsletters

- Discrete Mathematics and Theoretical Computer Science

- Information and Computation

- open accessjournal)

- Formal Aspects of Computing

- Journal of the ACM

- SIAM Journal on Computing (SICOMP)

- SIGACT News

- Theoretical Computer Science

- Theory of Computing Systems

- TheoretiCS (open accessjournal)

- International Journal of Foundations of Computer Science

- open accessjournal)

- Foundations and Trends in Theoretical Computer Science

- Journal of Automata, Languages and Combinatorics

- Acta Informatica

- Fundamenta Informaticae

- ACM Transactions on Computation Theory

- Computational Complexity

- Journal of Complexity

- ACM Transactions on Algorithms

- Information Processing Letters

- Open Computer Science (open accessjournal)

Conferences

- Annual ACM Symposium on Theory of Computing (STOC)[46]

- Annual IEEE Symposium on Foundations of Computer Science (FOCS)[46]

- Innovations in Theoretical Computer Science (ITCS)

- Mathematical Foundations of Computer Science (MFCS)[47]

- International Computer Science Symposium in Russia (CSR)[48]

- ACM–SIAM Symposium on Discrete Algorithms (SODA)[46]

- IEEE Symposium on Logic in Computer Science (LICS)[46]

- Computational Complexity Conference (CCC)[49]

- International Colloquium on Automata, Languages and Programming (ICALP)[49]

- Annual Symposium on Computational Geometry (SoCG)[49]

- ACM Symposium on Principles of Distributed Computing (PODC)[46]

- ACM Symposium on Parallelism in Algorithms and Architectures (SPAA)[49]

- Annual Conference on Learning Theory (COLT)[49]

- Symposium on Theoretical Aspects of Computer Science (STACS)[49]

- European Symposium on Algorithms (ESA)[49]

- Workshop on Approximation Algorithms for Combinatorial Optimization Problems (APPROX)[49]

- Workshop on Randomization and Computation (RANDOM)[49]

- International Symposium on Algorithms and Computation (ISAAC)[49]

- International Symposium on Fundamentals of Computation Theory (FCT)[50]

- International Workshop on Graph-Theoretic Concepts in Computer Science (WG)

See also

- Formal science

- Unsolved problems in computer science

- Sun–Ni law

Notes

- ^ "SIGACT". Retrieved 2017-01-19.

- ISBN 978-1-4503-7464-4.

- ^ "Any classical mathematical algorithm, for example, can be described in a finite number of English words". Rogers, Hartley Jr. (1967). Theory of Recursive Functions and Effective Computability. McGraw-Hill. Page 2.

- ^ Well defined with respect to the agent that executes the algorithm: "There is a computing agent, usually human, which can react to the instructions and carry out the computations" (Rogers 1967, p. 2).

- ^ "an algorithm is a procedure for computing a function (with respect to some chosen notation for integers) ... this limitation (to numerical functions) results in no loss of generality", (Rogers 1967, p. 1).

- zero or more inputs, i.e., quantitieswhich are given to it initially before the algorithm begins" (Knuth 1973:5).

- ^ "A procedure which has all the characteristics of an algorithm except that it possibly lacks finiteness may be called a 'computational method'" (Knuth 1973:5).

- ^ "An algorithm has one or more outputs, i.e. quantities which have a specified relation to the inputs" (Knuth 1973:5).

- ^ Whether or not a process with random interior processes (not including the input) is an algorithm is debatable. Rogers opines that: "a computation is carried out in a discrete stepwise fashion, without the use of continuous methods or analog devices . . . carried forward deterministically, without resort to random methods or devices, e.g., dice" (Rogers 1967, p. 2).

- ^ "NIH working definition of bioinformatics and computational biology" (PDF). Biomedical Information Science and Technology Initiative. 17 July 2000. Archived from the original (PDF) on 5 September 2012. Retrieved 18 August 2012.

- ^ "About the CCMB". Center for Computational Molecular Biology. Retrieved 18 August 2012.

- ^ Rivest, Ronald L. (1990). "Cryptology". In J. Van Leeuwen (ed.). Handbook of Theoretical Computer Science. Vol. 1. Elsevier.

- ^ Bellare, Mihir; Rogaway, Phillip (21 September 2005). "Introduction". Introduction to Modern Cryptography. p. 10.

- ISBN 978-0-8493-8523-0.

- Dictionary of Algorithms and Data Structures. U.S. National Institute of Standards and Technology. 15 December 2004. Online versionAccessed May 21, 2009.

- ^ Entry data structure in the Encyclopædia Britannica (2009) Online entry accessed on May 21, 2009.

- ^ ISBN 978-0-132-14301-1.

- ^ Andrews (2000). Dolev (2000). Ghosh (2007), p. 10.

- ^ R. W. Butler (2001-08-06). "What is Formal Methods?". Retrieved 2006-11-16.

- ^ C. Michael Holloway. "Why Engineers Should Consider Formal Methods" (PDF). 16th Digital Avionics Systems Conference (27–30 October 1997). Archived from the original (PDF) on 16 November 2006. Retrieved 2006-11-16.

- ^ Monin, pp.3–4

- ISBN 978-0262681087.

- S2CID 2138288.

- ^ Rando Allikmets, Wyeth W. Wasserman, Amy Hutchinson, Philip Smallwood, Jeremy Nathans, Peter K. Rogan, Thomas D. Schneider, Michael Dean (1998) Organization of the ABCR gene: analysis of promoter and splice junction sequences, Gene 215:1, 111–122

- ISBN 978-0-387-95364-9.

- S2CID 17870175.

- ^ Charles H. Bennett, Ming Li, and Bin Ma (2003) Chain Letters and Evolutionary Histories Archived 2007-10-07 at the Wayback Machine, Scientific American 288:6, 76–81

- ^ David R. Anderson (November 1, 2003). "Some background on why people in the empirical sciences may want to better understand the information-theoretic methods" (PDF). Archived from the original (PDF) on July 23, 2011. Retrieved 2010-06-23.

- .

- ^ ISBN 978-0-387-31073-2.

- ^ Wernick, Yang, Brankov, Yourganov and Strother, Machine Learning in Medical Imaging, IEEE Signal Processing Magazine, vol. 27, no. 4, July 2010, pp. 25–38

- ^ Mannila, Heikki (1996). Data mining: machine learning, statistics, and databases. Int'l Conf. Scientific and Statistical Database Management. IEEE Computer Society.

- ^ Friedman, Jerome H. (1998). "Data Mining and Statistics: What's the connection?". Computing Science and Statistics. 29 (1): 3–9.

- ISBN 978-0-8053-0177-9.

- ^ S.V. Adve et al. (November 2008). "Parallel Computing Research at Illinois: The UPCRC Agenda" Archived 2008-12-09 at the Wayback Machine (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits – increased clock frequency and smarter but increasingly complex architectures – are now hitting the so-called power wall. The computer industry has accepted that future performance increases must largely come from increasing the number of processors (or cores) on a die, rather than making a single core go faster."

- ^ Asanovic et al. Old [conventional wisdom]: Power is free, but transistors are expensive. New [conventional wisdom] is [that] power is expensive, but transistors are "free".

- Asanovic, Krste et al. (December 18, 2006). "The Landscape of Parallel Computing Research: A View from Berkeley"(PDF). University of California, Berkeley. Technical Report No. UCB/EECS-2006-183. "Old [conventional wisdom]: Increasing clock frequency is the primary method of improving processor performance. New [conventional wisdom]: Increasing parallelism is the primary method of improving processor performance ... Even representatives from Intel, a company generally associated with the 'higher clock-speed is better' position, warned that traditional approaches to maximizing performance through maximizing clock speed have been pushed to their limit."

- ISBN 978-1-55860-428-5.

- Isaac L. Chuang

- ^ Manin, Yu. I. (1980). Vychislimoe i nevychislimoe [Computable and Noncomputable] (in Russian). Sov.Radio. pp. 13–15. Archived from the original on 10 May 2013. Retrieved 4 March 2013.

- S2CID 124545445.

- .

- ^ Finkelstein, David (1968). "Space-Time Structure in High Energy Interactions". In Gudehus, T.; Kaiser, G. (eds.). Fundamental Interactions at High Energy. New York: Gordon & Breach.

- ^ "New qubit control bodes well for future of quantum computing". Retrieved 26 October 2014.

- ^ Quantum Information Science and Technology Roadmap for a sense of where the research is heading.

- ^ a b c d e The 2007 Australian Ranking of ICT Conferences Archived 2009-10-02 at the Wayback Machine: tier A+.

- ^ "MFCS 2017". Archived from the original on 2018-01-10. Retrieved 2018-01-09.

- ^ CSR 2018

- ^ a b c d e f g h i j The 2007 Australian Ranking of ICT Conferences Archived 2009-10-02 at the Wayback Machine: tier A.

- ^ FCT 2011 (retrieved 2013-06-03)

Further reading

- quantification theory. Aimed at graduate students.

External links

- SIGACT directory of additional theory links (archived 15 July 2017)

- Theory Matters Wiki Theoretical Computer Science (TCS) Advocacy Wiki

- List of academic conferences in the area of theoretical computer science at confsearch

- Theoretical Computer Science – StackExchange, a Question and Answer site for researchers in theoretical computer science

- Computer Science Animated

- Theory of computation at the Massachusetts Institute of Technology