Wikipedia:Wikipedia is succeeding

This is an essay. It contains the advice or opinions of one or more Wikipedia contributors. This page is not an encyclopedia article, nor is it one of Wikipedia's policies or guidelines, as it has not been thoroughly vetted by the community. Some essays represent widespread norms; others only represent minority viewpoints. |

This essay presents arguments to show that the Wikipedia is succeeding in the goal of becoming a reputable and reliable encyclopedia. A sister essay, Wikipedia is failing, presents arguments from another point of view that we respond to here. In some cases, we also argue a more conservative point of view that it is too early to decide failure or success.

After rebutting the arguments, we place the indications of Wikipedia's success and failure in the larger context of the standard accepted criteria for all encyclopedias: overall size, organization, ease of navigation, breadth of coverage, depth of coverage, timeliness, readability, biases, and reliability.[1][2][3] We evaluate Wikipedia according to these criteria, and give detailed comparisons to a standard encyclopedia, the Encyclopædia Britannica.

All editors are encouraged to add arguments showing that Wikipedia is not failing. The contest is between arguments, not people; we share a common goal of clarifying where Wikipedia works and where it does not. We should instead strive to outdo each other in supporting data, reliable references and clear thinking.

Arguments in support

Currently the Wikipedia has over 6,813,044 articles, making it the largest encyclopedia ever assembled, eclipsing even the Yongle Encyclopedia (completed in 1408), which held the record for nearly 600 years.[5] Traditionally, the problem of finding information in a large reference work is managed by organizing topics alphabetically, by topic, and/or by including an alphabetical index. A more subtle organization is also imposed by the editor's choice of main articles and system of cross-referencing. Print encyclopedias generally give short entries to smaller topics, then cross-reference to a larger article in which smaller topics are discussed. This grouping of topics into larger articles was a key part of the "new plan" that was the basis for the 1st edition of the Encyclopædia Britannica.

Online encyclopedias such as Wikipedia have different methods of organization. Information is usually found by following a hyperlink from one article to another or by using a search engine. Hyperlinks are roughly equivalent to cross-references in traditional works, although a typical Wikipedia article has many more such cross-references than a traditional encyclopedia article. Using a search engine is roughly equivalent to searching the index of a print encyclopedia; unlike such indices, however, there is no limit to the number of possible search terms. For example, in 2007 Encyclopædia Britannica contained 700,000 terms which was less than half the number of articles in Wikipedia.

Wikipedia also has its own topical organization. Articles are grouped into categories that may be searched;[6] this is analogous to the Outline of Knowledge found in the Propædia of the Encyclopædia Britannica. However, unlike that Outline, the categorization of Wikipedia articles is not strictly hierarchical, instead forming a network of ideas. Wikipedia has also developed portals intended to provide readers with an overview of a topic.[7]

Therefore, Wikipedia's overall size and navigation system seem superior to those of existing encyclopedias.

Breadth and depth of coverage

Wikipedia is a general

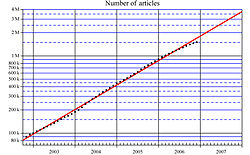

In addition to the roughly exponential growth in number of articles, the average length of each article has increased steadily,[8] as has the number of "featured articles" recognized for being of significant quality.[9] WikiProjects and automated article-assessment systems such as the MathBot have also fostered the improvement of articles in particular areas.

In general, Wikipedia's breadth and depth of coverage are superior to those of existing encyclopedias. However, not every topic found in other encyclopedias is present in Wikipedia yet; a WikiProject is dedicated to fixing these gaps in coverage.

Timeliness and readability

One strength of Wikipedia is its timeliness. Traditionally, new editions of print encyclopedias were released every few decades, as their information became noticeably outdated. In 1933,

Even the best reference work is of limited use if it is poorly written and unintelligible to its audience. Wikipedia benefits from a large community of proofreaders, who may detect errors and ambiguities; for comparison, the Encyclopædia Britannica employs only twelve copy editors.[12] It also fosters clearly written articles through various methods such as requiring "excellent writing" to be promoted to Featured Article status.[13] On the other hand, the number of individual contributors to the project – millions, if unregistered users are included – is far greater than any print encyclopedia; this can result in large variations in tone and style between (and sometimes within) articles; common policies and style guidelines within each language edition attempt to address this.[14]

In summary, Wikipedia is superior to existing encyclopedias in timeliness. However, its writing is uneven – sometimes better, sometimes worse than that of existing encyclopedias.

Biases

Encyclopedia editors have a responsibility to keep articles as free of bias as possible. Historically, even the best works have suffered from bias; for example,

Although Wikipedia has a policy on maintaining a neutral point of view,[17] a few zealous editors may seek to influence the presentation of an article in a biased way. This is usually dealt with swiftly and, in extreme cases, biased editors may be banned from editing.[18] In general, Wikipedia's editors also strive to be complete – to include all aspects of a topic and reflect the prevailing consensus in the scholarly community.

Being freely available, Wikipedia need not appeal to the tastes and interests of buyers and, thus, is able to avoid some bias that can skew commercial encyclopedias. As an example, the 2007 Macropædia tends to describe only the Western branch of a field, specifically in histories of architecture, literature, mathematics, music, dance, painting, philosophy, political philosophy, philosophical schools and doctrines, sculpture, theatre, and legal systems. Similarly, the 2007 Macropædia allots only one article each to Buddhism and most other religions, but devotes fourteen articles to Christianity, nearly half of its religion articles. Being international in scope and having an open-editor policy are likely factors that contribute to making Wikipedia articles less parochial than those of the Britannica.

This data suggests that Wikipedia's article coverage is less biased than existing encyclopedias, although it has not been studied whether Wikipedia's individual articles are less biased.

Reliability

Readers of an encyclopedia must have confidence that its assertions are true. In traditional scholarship, confidence is established by appealing to the authority of anonymously peer-reviewed publications and of experienced experts. However, as experts can disagree, and any one may be biased or mistaken, peer-reviewed publications are considered to have higher authority. Most encyclopedias include both of these; for example, the 699 Macropædia articles of the 2007 Encyclopædia Britannica give both references and the names of the authorities that wrote those articles, many of whom are leading experts in their fields. By contrast, most of the ~65,000 Micropædia articles neither give citations nor identify their authors; in such cases, the reader's confidence derives from the reputation of the Britannica itself.

Wikipedia appeals to the authority of peer-reviewed publications rather than the personal authority of experts.[19] It would be difficult to determine truly authoritative users with confidence, since Wikipedia does not require that its contributors give their legal names[20] or provide other information to establish their identity.[21] Although some contributors are authorities in their field, Wikipedia requires the contributions of even these contributors to be supported by published sources.[19] A drawback of this citation-only approach is that readers may be unable to judge the credibility of a cited source. The reader must be satisfied on two points: first, that the cited source is a genuine publication and, second, that it supports the assertion made by the article. Although the first point is usually easy to check, the second may require significant time, effort or training.

Wikipedia's reputation has improved in recent years, and its assertions are increasingly used as a source by organizations such as the U.S. Federal courts and the World Intellectual Property Office[22] – though mainly for supporting information rather than information decisive to a case.[23] Wikipedia has also been used as a source in journalism,[24] sometimes without attribution; several reporters have been dismissed for plagiarizing from Wikipedia.[25][26]

The English-language Wikipedia has introduced a scale against which the quality of articles is judged;[27] as of January 2021, more than 5900 have passed a rigorous set of criteria to acquire the highest "featured article" status; such articles are intended to provide a thorough, well-written coverage of their topic, and be supported by many references to peer-reviewed publications.[13] Wikipedia has been described as a "work in progress",[28] suggesting that it cannot claim to be authoritative in its own right, unlike more established encyclopedias. Despite its shortcomings, however, many hold out hope for its future.[29] An analogous historical example is provided by the Encyclopædia Britannica, whose first edition was of uneven scholarship,[30] but which rose to great heights in its later editions;[10] the elegant words of its first editor, William Smellie, pertain to Wikipedia as well:

With regard to errors in general, whether falling under the denomination of mental, typographical or accidental, we are conscious of being able to point out a greater number than any critic whatever. Men who are acquainted with the innumerable difficulties of attending the execution of a work of such an extensive nature will make proper allowances. To these we appeal, and shall rest satisfied with the judgment they pronounce.

Wikipedia's reputation has improved in recent years, and its assertions are increasingly used as a source – though mainly for supporting information or as a tertiary source.

Rebuttals to the Wikipedia is failing

Rebuttal to "relative fraction of FA articles" argument

The sister essay notes correctly that the relative percentage of Featured articles, A-level and good articles is low (~1.1%) and has been gradually declining, since articles are being created faster than they are being promoted to these higher levels. We do not dispute these statistics, but we challenge their interpretation as an indication of "failure", for the following reasons.

First, Wikipedia's success as an encyclopedia should be evaluated, not relative to itself, but relative to the more absolute scale of other encyclopedias, such as the

Second, independent tests of the random article test proposed in the sister essay have not confirmed its conclusions. This suggests that the statistical sample size is too small to draw reliable conclusions. It also raises a reasonable doubt that the argument is the result of confirmation bias.

Third, comparing the count of featured articles to total articles (and measuring the rate of article improvement strictly by time) ignores the varying popularity of individual articles. If we take the view that Wikipedia exists for the benefit of its readers, then some articles are more important than others, according to the number of people who view them (for example, we might say a page with a million views matters as much as one million pages with one view each). If we care about efficiently allocating resources, the optimal strategy would be to work on improving the quality of articles in proportion to the number of views they get. Wikipedia appears to do this automatically, because articles with the most views tend to get the most edits, and thus the most popular articles (probably) improve the fastest. While it is true that Wikipedia is gaining a huge number of new articles which improve slowly, if an article receives few edits, most likely that means the article is not interesting to very many Wikipedia users (yet). Since the popularity of Web pages in general follows a power law (or Pareto distribution), we would expect most articles on Wikipedia to be obscure and thus they will improve slowly in terms of clock time, even though they may be improving just as quickly as the most popular articles in terms of view count. Perhaps a better way to measure article improvement is to measure time in views rather than years. The harm done by a poor-quality article is proportional to the number of visitors who view it while the quality is still poor. By this measure, a popular article that takes six months to improve might actually create more harm than an obscure article that takes several years to improve, since few visitors will have been harmed by the obscure article they don't visit. The real measure of success for Wikipedia, then, should be the number of visits an article must receive before it reaches featured quality. If research finds a relationship between views and getting up to featured quality, then our efforts to improve the operation of Wikipedia should focus on finding ways to reduce the required number of views to reach featured quality. Worrying about clock time then becomes largely irrelevant. (Perhaps Wikipedia has obscured the importance of view count by disabling page hit counters to reduce server load.)

Finally and least importantly, the sister essay fails to note that the FA percentage is not declining in the German Wikipedia. We encourage it to address the reasons for this apparent contradiction.

Rebuttal to "Rate of FA production" argument

The sister essay notes correctly that the rate of producing Wikipedia:Featured articles is quite slow at present, typically one per day. It further notes that, at this rate, it would require 4,380 years for all existing Wikipedia articles to reach FA status. However, this argument seems to be based on the false supposition that Wikipedia is a failure unless all of its articles are made into Featured Articles.

Rather than comparing Wikipedia to itself, it seems more appropriate to judge its "failure" by comparing it to standard encyclopedias such as the

The sister essay also proposes a Special:Recentchanges experiment to show that encyclopedic content is being added slowly. According to one independent test, typically 5% and 30% of edits are major and minor contributions, respectively. A full 50% of edits is devoted to copy-editing, wiki-linking, formatting and tagging, whereas roughly 8% apiece is dedicated to vandalism and its reversion. We would argue that these data are not a sign of Wikipedia's "failure", but merely reflect the habits of its editors (and vandals). For example, the recently featured article on DNA was written piecemeal, with its major contributor making a minor edit roughly every five minutes; yet the final product is undoubtedly an article of benchmark quality for Wikipedia. Not all editors have to contribute wholesale, adding entire sections at a time, and it is entirely reasonable that editors should want to "tweak" their wording and fix typos. Therefore, rather than counting edits, a more informative study for predicting Wikipedia's destiny would monitor the rate at which useful bytes are being added.

Since articles on Wikipedia vary widely in popularity, it may be misleading to measure the time for an article to reach featured quality; a better measure may be the number of page views.

A subtle, hard-to-evaluate factor is the improvement in Wikipedia's underlying tools. Bringing an article to featured quality is quite difficult; the minority of editors who can do this have managed to master the arcana of Wikipedia's complex policies, guidelines, and procedures. However, the enabling tools are improving. For example, recent improvements include: the

One possible illustrative example of tool improvement has been the slight reduction in repetitive questions on the

The Help desk itself may be a useful indicator of Wikipedia's success. Almost every question that appears on the Help desk receives at least one useful answer in a reasonably short time, ranging from mere minutes for straightforward questions to (sometimes) several hours for difficult questions. The quality of Wikipedia's free technical support compares well to commercially-provided support. Given that technical support is costly for companies to provide, and often unpleasant for technical support representatives and customers alike, the fact that Wikipedia makes technical support enjoyable enough for people to do for free is a remarkable achievement in its own right. The surprising success of Wikipedia's Help desk suggests that Wikipedia is succeeding at something.

Finally, some Wikipedians believe that the standards being demanded currently at Wikipedia:Featured article candidates are overly strict, e.g., demanding too many inline citations and forbidding even trivial re-arrangements of published equations. Since a good-faith effort to address every concern must be made (whether well- or ill-advised), the fraction of articles satisfying everyone's concept of a "featured article" can be small. Thus, many excellent articles may have difficulty in reaching FA status. In addition, many editors may have written articles which could potentially pass FA, but have declined to participate in the process, perceiving it as unpleasant, and dominated by non-experts in the specialized topics which their articles cover.

Rebuttal to "Quality of vital articles" argument

The sister essay notes correctly that 17% of the 1182 recognized Vital Articles are featured articles or good articles, whereas 11% are stubs or have cleanup tags. The sister essay argues that the low percentage (17%) of high-quality articles is disappointing, and a sign of Wikipedia's failure. In a related assumption, the sister essay assumes that all articles below Good-article class are signs of Wikipedia's failure. While we agree that the stubs, etc. are disappointing, we do not agree with the sister essay's assumption, nor do we see the present state of the Vital Articles as proof that Wikipedia is failing.

First, this argument pertains to the present state of the Vital Articles and does not offer any data on the rate at which these articles are being improved to featured articles and good articles. For example, if they were on a path to being completed within two years, the present state would hardly qualify as proof of failure; instead, it would merely indicate that a significant fraction of the articles remain to be improved. Without knowing the prevailing trends, these data do not give any information on the future success or failure of Wikipedia.

- Recent note: In response to this essay, new data have been added to the sister essay on the rate of promotion of Vital Articles to Featured Article status; 30 were promoted in 2006, roughly doubling the number from 41 to 71. A key question is how to extrapolate this into the future. Assuming a doubling time of one year would suggest that 140 Vital Articles will be featured in January 2008, 280 by January 2009 and so on; by this reckoning, all Vital Articles will be featured within five years. However, predictions are always uncertain, especially of the future.

Second, the sister essay's assumption fails to recognize that many B- and Start-level articles are indeed superior to their counterparts in standard encyclopedias, such as the

Third, we argue that this will be a common occurrence among the B-level articles, which comprise roughly 8% of all articles, judging from

Therefore, it is wrong to assume that B-level and Start-class articles are inferior to their counterparts in other encyclopedias.

Rebuttal to "Maintenance of high-quality articles" argument

The sister essay notes correctly that roughly 20% of all articles promoted to Featured Article status have been demoted, 340 articles in all. It likewise notes that vandalism and poorly conceived edits to certain featured articles such as Mauna Loa, Sun and Ryanair has gone uncorrected for long times, once their principal author stopped watching over them. From these examples, it extrapolates that all high-quality articles will degrade inexorably if they are not watched over, due to vandalism and misguided edits. It is also noted that articles such as Windows 2000 and MDAC were not being watched by their principal author for quite some time with hardly any degradation in quality.

We acknowledge the danger that cited in the sister essay, and encourage Wikipedians to develop better article-custodial methods, e.g., an improved AntiVandalBot. However, we do not agree that this danger implies that Wikipedia will "fail", for the following reasons. It is quite rare that a Wikipedian will have been a major contributor to more than eight Featured Articles, and the present rate of major vandalism seems sufficiently low (say, once per month on average) that it can be dealt with "by hand". Moreover, most Featured Articles are now watched over by the WikiProjects, which gives a larger pool of well-meaning editors watching over the project's articles. However, one useful idea might be to give the Wikiprojects their own "Watch" lists to monitor changes in their articles.

Another mitigating factor is that most of the demoted Featured Articles appear to have been demoted because of rising standards, particularly for inline citations. Evidence of a significant number of Featured Articles being demoted for declining quality is lacking at present.

Responses

A response on performance on core topics

Critics claim that various lists of important or essential articles are not of the highest quality, such as the list of articles deemed vital at

A response to performance on broader topics

Critics maintain: There are about 1,050 featured articles. There are also about 39,522 good articles. However, there are currently 6,813,044 articles on Wikipedia. This means that slightly more than 99.8% of all the articles on Wikipedia are not considered well written...

Is this statistic valid? Proprietary non-free encyclopedias don't even have 10% of the number of articles! This "self-standardization" argument may be misleading on two counts. First, even if all the articles were well-written, that would not guarantee that they were of high quality. Second, Wikipedia is growing exponentially and, therefore, a significant fraction of its articles were started recently. Naturally, such new articles have not had sufficient time to be improved to high encyclopedic quality. Therefore, it is better to compare Wikipedia to an established standard encyclopedia, such as the Encyclopædia Britannica.

Even assuming that it is quality, not quantity that matters, we must be aware of what the above metric measures – the metric measures how many articles have passed through a complex and dreary nomination and review process. No impartial committee has examined every article on Wikipedia and decided that only 39,522 are good. That number just represents the number of articles that have been submitted to the 'good articles' project which have been approved by two people (the submitter and an impartial reviewer) as good. This is not necessarily statistically significant for any purpose of measuring Wikipedia's quality.

A response to the maintenance of standards

The sister article asks: Do articles which are judged to have reached the highest standards remain excellent for a long time, or does article quality decline as well-meant but poor quality edits cause article quality to fall over time?

This is an important question that needs to be asked and examined closely. However, to conclude at such an early stage that this will lead to a long term failure of the Wikipedia is premature. The main factor may actually be rising standards for featured articles; many articles are that are de-featured have improved over time, but were featured before the standards for referencing and style were as strict.

Maintenance projects aimed at examining solutions to long term problems demonstrate the interest of volunteers, editors, administrators and interested parties in a making positive difference.

A response to the rate of quality article production

Critics argue: About one article a day on average becomes featured; at this rate, it will take 4,380 years for all the currently existing articles to meet FA criteria.

Wikipedians are very patient. Furthermore, many of the articles currently not featured will most likely never be featured since there are many topics which meet Wikipedia notability criteria but do not have enough verifiable information about them to have as featured articles.

A response to bad editors

The sister essay suggests: "... unfortunately, "most dedicated" often ends up meaning "most emotionally invested".

This is another area that will need further research and thought. The efforts to remove systematic bias and individual POV are long term. It's far too early to claim failure or that the problems are insurmountable. The Wikipedia policy

Food for thought

Ask not what the Wikipedia can do for you, but what you can do for the Wikipedia. Here's an idea:

Open questions

- Are the statistical measures introduced by WP:FAILrelevant?

- Can stubs be counted as true articles, and therefore relevant to any statistical examination of the project?

- What can be done to improve the system?

- Is radical change required, or just small adjustments to the current set-up?

- Are articles really NPOV?

See also

- Wikipedia:100,000 feature-quality articles

- Wikipedia:Evaluating Wikipedia as an encyclopedia

- Wikipedia:Expert retention

- Wikipedia:Problems with Wikipedia

- Wikipedia:Requested articles

- Wikipedia:Why Wikipedia is so great

- Wikipedia:Why Wikipedia is not so great

- Wikipedia:Wikipedia is failing

- Wikipedia:Wikipedia may or may not be failing

References

- ^ ISBN 0-89774-744-5.

- ISBN 0-8352-3669-2.

- ISBN 0-8389-7823-1.

- ^ "Wikipedia:Modelling Wikipedia's growth",English Wikipedia. Retrieved on 2007-01-29.

- Encyclopædia Britannica Inc.2007. pp. 257–286.

- ^ "Wikipedia:Categorical index", English Wikipedia. Retrieved on 2007-01-27.

- ^ "Wikipedia:Portal", English Wikipedia. Retrieved on 2007-01-27.

- ^ "English Wikipedia statistics",English Wikipedia. Retrieved on 2007-01-29.

- ^ "Wikipedia:Featured article statistics",English Wikipedia. Retrieved on 2007-01-29.

- ^ a b c Kogan, Herman (1958). The Great EB: The Story of the Encyclopædia Britannica. Chicago: The University of Chicago Press. Library of Congress catalog number 58-8379.

- ^ Cited by the Chicago Sun-Times (January 16 2005)

- ^ The New Encyclopædia Britannica (15th edition, Propædia ed.). 2007. pp. final page.

- ^ a b "Featured article criteria", Wikipedia. Retrieved on 2007-01-27.

- ^ For example, "Manual of Style", English-language Wikipedia. Retrieved on 2007-01-28.

- Encyclopædia Britannica Inc.

- ISBN 0-8108-2567-8.

- ^ "Wikipedia:Neutral point of view, Wikipedia (21 January 2007)

- ^ "Banning policy", English Wikipedia. Retrieved on 2007-01-28.

- ^ a b "Wikipedia:Reliable sources", English Wikipedia. Retrieved on 2007-01-27.

- ^ "Wikipedia:Username", English Wikipedia. Retrieved on 2007-01-29.

- ^ "Wikipedia:Privacy", English Wikipedia. Retrieved on 2007-01-29.

- ^ Arias, Martha L. (29 January 2007). "Wikipedia: The Free Online Encyclopedia and its Use as Court Source". Internet Business Law Services.

- ^ Cohen, Noam (29 January 2007). "Courts Turn to Wikipedia, but Selectively". New York Times.

- 8 September 2004.

- 9 January 2007.

- 13 January 2007.

- ^ "Wikipedia:Version 1.0 Editorial Team/Assessment", Wikipedia. Retrieved on 2007-01-27.

- ^ Helm, Burt (14 December 2005). "Wikipedia: A Work in Progress". BusinessWeek. unknown volume: unknown pages.

- ^ Grimmelmann, James (27 August 2006). "Seven Wikipedia Fallacies". LawMeme. unknown volume: unknown pages.

- ^ Krapp, Philip; Balou, Patricia K. (1992). Collier's Encyclopedia. Vol. 9. New York: Macmillan Educational Company. p. 135. Library of Congress catalog number 91-61165. The Britannica's 1st edition is described as "deplorably inaccurate and unscientific" in places.