Criss-cross algorithm

In

Like the simplex algorithm of George B. Dantzig, the criss-cross algorithm is not a polynomial-time algorithm for linear programming. Both algorithms visit all 2D corners of a (perturbed) cube in dimension D, the Klee–Minty cube (after Victor Klee and George J. Minty), in the worst case.[4][5] However, when it is started at a random corner, the criss-cross algorithm on average visits only D additional corners.[6][7][8] Thus, for the three-dimensional cube, the algorithm visits all 8 corners in the worst case and exactly 3 additional corners on average.

History

The criss-cross algorithm was published independently by

Comparison with the simplex algorithm for linear optimization

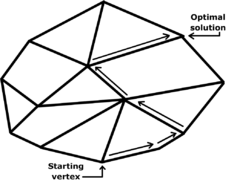

In linear programming, the criss-cross algorithm pivots between a sequence of bases but differs from the simplex algorithm. The simplex algorithm first finds a (primal-) feasible basis by solving a "phase-one problem"; in "phase two", the simplex algorithm pivots between a sequence of basic feasible solutions so that the objective function is non-decreasing with each pivot, terminating with an optimal solution (also finally finding a "dual feasible" solution).[3][11]

The criss-cross algorithm is simpler than the simplex algorithm, because the criss-cross algorithm only has one phase. Its pivoting rules are similar to the

While most simplex variants are monotonic in the objective (strictly in the non-degenerate case), most variants of the criss-cross algorithm lack a monotone merit function which can be a disadvantage in practice.

Description

The criss-cross algorithm works on a standard pivot tableau (or on-the-fly calculated parts of a tableau, if implemented like the revised simplex method). In a general step, if the tableau is primal or dual infeasible, it selects one of the infeasible rows / columns as the pivot row / column using an index selection rule. An important property is that the selection is made on the union of the infeasible indices and the standard version of the algorithm does not distinguish column and row indices (that is, the column indices basic in the rows). If a row is selected then the algorithm uses the index selection rule to identify a position to a dual type pivot, while if a column is selected then it uses the index selection rule to find a row position and carries out a primal type pivot.

Computational complexity: Worst and average cases

The

Several algorithms for linear programming—

However, like the simplex algorithm of Dantzig, the criss-cross algorithm is not a polynomial-time algorithm for linear programming. Terlaky's criss-cross algorithm visits all the 2D corners of a (perturbed) cube in dimension D, according to a paper of Roos; Roos's paper modifies the Klee–Minty construction of a cube on which the simplex algorithm takes 2D steps.[3][4][5] Like the simplex algorithm, the criss-cross algorithm visits all 8 corners of the three-dimensional cube in the worst case.

When it is initialized at a random corner of the cube, the criss-cross algorithm visits only D additional corners, however, according to a 1994 paper by Fukuda and Namiki.[6][7] Trivially, the simplex algorithm takes on average D steps for a cube.[8][15] Like the simplex algorithm, the criss-cross algorithm visits exactly 3 additional corners of the three-dimensional cube on average.

Variants

The criss-cross algorithm has been extended to solve more general problems than linear programming problems.

Other optimization problems with linear constraints

There are variants of the criss-cross algorithm for linear programming, for

Vertex enumeration

The criss-cross algorithm was used in an algorithm for

Oriented matroids

The criss-cross algorithm is often studied using the theory of oriented matroids (OMs), which is a combinatorial abstraction of linear-optimization theory.[17][23] Indeed, Bland's pivoting rule was based on his previous papers on oriented-matroid theory. However, Bland's rule exhibits cycling on some oriented-matroid linear-programming problems.[17] The first purely combinatorial algorithm for linear programming was devised by Michael J. Todd.[17][24] Todd's algorithm was developed not only for linear-programming in the setting of oriented matroids, but also for quadratic-programming problems and linear-complementarity problems.[17][24] Todd's algorithm is complicated even to state, unfortunately, and its finite-convergence proofs are somewhat complicated.[17]

The criss-cross algorithm and its proof of finite termination can be simply stated and readily extend the setting of oriented matroids. The algorithm can be further simplified for linear feasibility problems, that is for linear systems with nonnegative variables; these problems can be formulated for oriented matroids.[14] The criss-cross algorithm has been adapted for problems that are more complicated than linear programming: There are oriented-matroid variants also for the quadratic-programming problem and for the linear-complementarity problem.[3][16][17]

Summary

The criss-cross algorithm is a simply stated algorithm for linear programming. It was the second fully combinatorial algorithm for linear programming. The partially combinatorial simplex algorithm of Bland cycles on some (nonrealizable) oriented matroids. The first fully combinatorial algorithm was published by Todd, and it is also like the simplex algorithm in that it preserves feasibility after the first feasible basis is generated; however, Todd's rule is complicated. The criss-cross algorithm is not a simplex-like algorithm, because it need not maintain feasibility. The criss-cross algorithm does not have polynomial time-complexity, however.

Researchers have extended the criss-cross algorithm for many optimization-problems, including linear-fractional programming. The criss-cross algorithm can solve quadratic programming problems and linear complementarity problems, even in the setting of oriented matroids. Even when generalized, the criss-cross algorithm remains simply stated.

See also

- Jack Edmonds (pioneer of combinatorial optimization and oriented-matroid theorist; doctoral advisor of Komei Fukuda)

Notes

- ^ a b Illés, Szirmai & Terlaky (1999)

- ^ S2CID 62199788.

- ^ a b c d e f g Fukuda & Terlaky (1997)

- ^ a b Roos (1990)

- ^ MR 0332165.

- ^ a b c Fukuda & Terlaky (1997, p. 385)

- ^ a b Fukuda & Namiki (1994, p. 367)

- ^ MR 0868467.

- ^ Terlaky (1985) and Terlaky (1987)

- ^ Wang (1987)

- ^ a b Terlaky & Zhang (1993)

- ^ a b

Bland, Robert G. (May 1977). "New finite pivoting rules for the simplex method". Mathematics of Operations Research. 2 (2): 103–107. MR 0459599.

- ^ Bland's rule is also related to an earlier least-index rule, which was proposed by Katta G. Murty for the linear complementarity problem, according to Fukuda & Namiki (1994).

- ^ a b Klafszky & Terlaky (1991)

- Euclidean unit sphere, as proved by Borgwardt and by Smale.

- ^ a b Fukuda & Namiki (1994)

- ^ MR 1744046.

- ^ .

- ^ S2CID 24418835. Archived from the original(pdf) on 23 September 2015. Retrieved 30 August 2011.

- MR 0986877.

- ^ Avis & Fukuda (1992, p. 297)

- ^ The v vertices in a simple arrangement of n hyperplanes in D dimensions can be found in O(n2Dv) time and O(nD) space complexity.

- ^ The theory of oriented matroids was initiated by R. Tyrrell Rockafellar. (Rockafellar 1969):

Rockafellar, R. T. (1969). "The elementary vectors of a subspace of (1967)" (PDF). In

MR 0278972. PDF reprint.. Tucker and Minty had studied the sign patterns of the matrices arising through the pivoting operations of Dantzig's simplex algorithm.Rockafellar was influenced by the earlier studies of Albert W. Tucker and George J. Minty

- ^ MR 0811116.

References

- MR 1174359.

- Csizmadia, Zsolt; Illés, Tibor (2006). "New criss-cross type algorithms for linear complementarity problems with sufficient matrices" (PDF). Optimization Methods and Software. 21 (2): 247–266. S2CID 24418835. Archived from the original(pdf) on 23 September 2015. Retrieved 30 August 2011.

- S2CID 21476636.

- S2CID 2794181. Postscript preprint.

- den Hertog, D.; Roos, C.; Terlaky, T. (1 July 1993). "The linear complementarity problem, sufficient matrices, and the criss-cross method" (PDF). Linear Algebra and Its Applications. 187: 1–14. MR 1221693.

- Illés, Tibor; Szirmai, Ákos; Terlaky, Tamás (1999). "The finite criss-cross method for hyperbolic programming". European Journal of Operational Research. 114 (1): 198–214. .

- Klafszky, Emil; Terlaky, Tamás (June 1991). "The role of pivoting in proving some fundamental theorems of linear algebra". Linear Algebra and Its Applications. 151: 97–118. MR 1102142.

- Roos, C. (1990). "An exponential example for Terlaky's pivoting rule for the criss-cross simplex method". Mathematical Programming. Series A. 46 (1): 79–84. S2CID 33463483.

- Terlaky, T. (1985). "A convergent criss-cross method". Optimization: A Journal of Mathematical Programming and Operations Research. 16 (5): 683–690. MR 0798939.

- MR 0888684.

- S2CID 6058077.

- Wang, Zhe Min (1987). "A finite conformal-elimination free algorithm over oriented matroid programming". Chinese Annals of Mathematics (Shuxue Niankan B Ji). Series B. 8 (1): 120–125. MR 0886756.

External links

- Komei Fukuda (ETH Zentrum, Zurich) with publications

- Tamás Terlaky (Lehigh University) with publications Archived 28 September 2011 at the Wayback Machine