BCPNN

A Bayesian Confidence Propagation Neural Network (BCPNN) is an

The basic model is a

BCPNN has been used for machine learning classification[10] and data mining, for example for discovery of adverse drug reactions.[11] The BCPNN learning rule has also been used to model biological synaptic plasticity and intrinsic excitability in large-scale spiking neural network (SNN) models of cortical associative memory[12][13] and reward learning in Basal ganglia.[14]

Network architecture

The BCPNN network architecture is modular in terms of

Lateral inhibition within the hypercolumn makes it a soft winner-take-all module. Looking at real cortex, the number of minicolumns within a hypercolumn is on the order of a hundred, which makes the activity sparse, at the level of 1% or less, given that hypercolumns can also be silent.[16] A BCPNN network with a size of the human neocortex would have a couple of million hypercolumns, partitioned into some hundred areas. In addition to sparse activity, a large-scale BCPNN would also have very sparse connectivity, given that the real cortex is sparsely connected at the level of 0.01 - 0.001% on average.

Bayesian-Hebbian learning rule

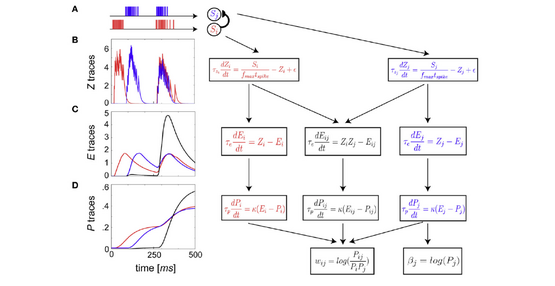

The BCPNN learning rule was derived from Bayes rule and is Hebbian such that neural units with activity correlated over time get excitatory connections between them whereas anti-correlation generates inhibition and lack of correlation gives zero connections. The independence assumptions are the same as in naïve Bayes formalism. BCPNN represents a straight-forward way of deriving a neural network from Bayes rule.[2][3][17] In order to allow the use the standard equation for propagating activity between neurons, transformation to log space was necessary. The basic equations for postsynaptic unit intrinsic excitability and synaptic weight between pre- and postsynaptic units, , are:

where the activation and co-activation probabilities are estimated from the training set, which can be done e.g. by exponentially weighted moving averages (see Figure).

There has been proposals for a biological interpretation of the BCPNN learning rule. may represent binding of glutamate to NMDA receptors, whereas could represent a back-propagating action potential reaching the synapse. The conjunction of these events lead to influx via NMDA channels,

The traces are further filtered into the traces, which serve as temporal buffers,

Models of brain systems and functions

The cortex inspired modular architecture of BCPNN has been the basis for several spiking neural network models of cortex aimed at studying its associative memory functions. In these models, minicolumns comprise about 30 model pyramidal cells and a hypercolumn comprises ten or more such minicolumns and a population of basket cells that mediate local feedback inhibition. A modelled network is composed of about ten or more such hypercolumns. Connectivity is excitatory within minicolumns and support feedback inhibition between minicolumns in the same hypercolumn via model basket cells. Long-range connectivity between hypercolumns is sparse and excitatory and is typically set up to form number of distributed cell assemblies representing earlier encoded memories. Neuron and synapse properties have been tuned to represent their real counterparts in terms of e.g. spike frequency adaptation and fast non-Hebbian synaptic plasticity.

These cortical models have mainly been used to provide a better understanding of the mechanisms underlying cortical dynamics and oscillatory structure associated with different activity states.[18] Cortical oscillations in the range from theta, over alpha and beta to gamma are generated by this model. The embedded memories can be recalled from partial input and when activated they show signs of fixpoint attractor dynamics, though neural adaptation and synaptic depression terminates activity within some hundred milliseconds. Notably, a few cycles of gamma oscillations are generated during such a brief memory recall. Cognitive phenomena like attentional blink and its modulation by benzodiazepine has also been replicated in this model.[19]

In recent years, Hebbian plasticity has been incorporated into this cortex model and simulated with abstract non-spiking as well as spiking neural units.[17] This made it possible to demonstrate online learning of temporal sequences[20] as well as one-shot encoding and immediate recall in human word list learning.[12] These findings further lead to the proposal and investigation of a novel theory of working memory based on fast Hebbian synaptic plasticity.[13]

A similar approach was applied to model reward learning and behavior selection in a Go-NoGo connected non-spiking and spiking neural network models of the Basal ganglia.[14][21]

Machine learning applications

The point-wise mutual information weights of BCPNN is since long one of the standard methods for detection of drug adverse reactions.[11]

BCPNN has recently been successfully applied to Machine Learning classification benchmarks, most notably the hand written digits of the MNIST database. The BCPNN approach uses biologically plausible learning and structural plasticity for unsupervised generation of a sparse hidden representation, followed by a one-layer classifier that associates this representation to the output layer.[10] It achieves a classification performance on the full MNIST test set around 98%, comparable to other methods based on unsupervised representation learning.[22] The performance is notably slightly lower than that of the best methods that employ end-to-end error back-propagation. However, the extreme performance comes with a cost of lower biological plausibility and higher complexity of the learning machinery. The BCPNN method is also quite well suited for semi-supervised learning.

Hardware designs for BCPNN

The structure of BCPNN with its cortex-like modular architecture and massively parallel correlation based Hebbian learning makes it quite hardware friendly. Implementation with reduced number of bits in synaptic state variables have been shown to be feasible.[23] BCPNN has further been the target for parallel simulators on cluster computers and GPU:s. It was recently implemented on the SpiNNaker compute platform[24] as well as in a series of dedicated neuromorphic VLSI designs.[25][26][27][28] From these it has been estimated that a human cortex sized BCPNN with continuous learning could be executed in real time with a power dissipation on the order of few kW.

References

- .

- ^ PMID 8823623.

- ^ S2CID 218898276.

- ^ Lansner A (June 1991). "A recurrent bayesian ANN capable of extracting prototypes from unlabeled and noisy examples.". Artificial Neural Networks. Proceedings of the 1991 International Conference on Artificial Neural Networks (ICANN-91). Vol. 1–2. Espoo, Finland: Elsevier.

- ^ Lansner, Anders (1986). INVESTIGATIONS INTO THE PATIERN PROCESSING CAPABILITIES OF ASSOCIATIVE NETS. KTH Royal Institute of Technology.

- PMID 9861988.

- ISSN 0954-898X.

- S2CID 11912288.

- S2CID 2044858.

- ^ )

- ^ .

- ^ PMID 28053032.

- ^ PMID 32127347.

- ^ PMID 23060764.

- PMID 31502234.

- S2CID 14848878.

- ^ PMID 24782758.

- PMID 20532199.

- PMID 21625630.

- PMID 27213810.

- PMID 27493625.

- arXiv:2005.03476 [cs.NE].

- PMID 25657618.

- PMID 27092061.

- S2CID 15069505.

- S2CID 18476370.

- S2CID 207870792.

- PMID 32982673.