t-distributed stochastic neighbor embedding

| Part of a series on Statistics |

| Data and information visualization |

|---|

| Major dimensions |

|

| Important figures |

| Information graphic types |

| Related topics |

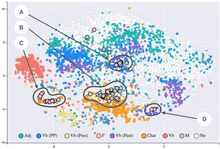

t-distributed stochastic neighbor embedding (t-SNE) is a

The t-SNE algorithm comprises two main stages. First, t-SNE constructs a

t-SNE has been used for visualization in a wide range of applications, including

While t-SNE plots often seem to display clusters, the visual clusters can be influenced strongly by the chosen parameterization and therefore a good understanding of the parameters for t-SNE is necessary. Such "clusters" can be shown to even appear in non-clustered data,[11] and thus may be false findings. Interactive exploration may thus be necessary to choose parameters and validate results.[12][13] It has been demonstrated that t-SNE is often able to recover well-separated clusters, and with special parameter choices, approximates a simple form of spectral clustering.[14]

For a data set with n elements, t-SNE runs in O(n2) time and requires O(n2) space.[15]

Details

Given a set of high-dimensional objects , t-SNE first computes probabilities that are proportional to the similarity of objects and , as follows.

For , define

and set . Note the above denominator ensures for all .

As van der Maaten and Hinton explained: "The similarity of datapoint to datapoint is the conditional probability, , that would pick as its neighbor if neighbors were picked in proportion to their probability density under a Gaussian centered at ."[2]

Now define

This is motivated because and from the N samples are estimated as 1/N, so the conditional probability can be written as and . Since , you can obtain previous formula.

Also note that and .

The bandwidth of the

Since the Gaussian kernel uses the Euclidean distance , it is affected by the curse of dimensionality, and in high dimensional data when distances lose the ability to discriminate, the become too similar (asymptotically, they would converge to a constant). It has been proposed to adjust the distances with a power transform, based on the intrinsic dimension of each point, to alleviate this.[16]

t-SNE aims to learn a -dimensional map (with and typically chosen as 2 or 3) that reflects the similarities as well as possible. To this end, it measures similarities between two points in the map and , using a very similar approach. Specifically, for , define as

and set . Herein a heavy-tailed

The locations of the points in the map are determined by minimizing the (non-symmetric) Kullback–Leibler divergence of the distribution from the distribution , that is:

The minimization of the Kullback–Leibler divergence with respect to the points is performed using gradient descent. The result of this optimization is a map that reflects the similarities between the high-dimensional inputs.

Software

- The R package Rtsne implements t-SNE in R.

- ELKI contains tSNE, also with Barnes-Hut approximation

- scikit-learn, a popular machine learning library in Python implements t-SNE with both exact solutions and the Barnes-Hut approximation.

- Tensorboard, the visualization kit associated with TensorFlow, also implements t-SNE (online version)

References

- Neural Information Processing Systems.

- ^ a b van der Maaten, L.J.P.; Hinton, G.E. (Nov 2008). "Visualizing Data Using t-SNE" (PDF). Journal of Machine Learning Research. 9: 2579–2605.

- ^ Gashi, I.; Stankovic, V.; Leita, C.; Thonnard, O. (2009). "An Experimental Study of Diversity with Off-the-shelf AntiVirus Engines". Proceedings of the IEEE International Symposium on Network Computing and Applications: 4–11.

- ^ Hamel, P.; Eck, D. (2010). "Learning Features from Music Audio with Deep Belief Networks". Proceedings of the International Society for Music Information Retrieval Conference: 339–344.

- PMID 20175497.

- PMID 19153135.

- S2CID 67926902.

- ISBN 978-3-319-46681-1.

- S2CID 208329378.

- S2CID 8074617.

- ^ "K-means clustering on the output of t-SNE". Cross Validated. Retrieved 2018-04-16.

- S2CID 353336.

- . Retrieved 4 December 2017.

- arXiv:1706.02582 [cs.LG].

- ^ Pezzotti, Nicola. "Approximated and User Steerable tSNE for Progressive Visual Analytics" (PDF). Retrieved 31 August 2023.

- .

External links

- Visualizing Data Using t-SNE, Google Tech Talk about t-SNE

- Implementations of t-SNE in various languages, A link collection maintained by Laurens van der Maaten