Data compression: Difference between revisions

Undo |

Minor historical points added for lossless and lossy data compression |

||

| Line 24: | Line 24: | ||

[[Lossless data compression]] [[algorithm]]s usually exploit [[Redundancy (information theory)|statistical redundancy]] to represent data without losing any [[Self-information|information]], so that the process is reversible. Lossless compression is possible because most real-world data exhibits statistical redundancy. For example, an image may have areas of color that do not change over several pixels; instead of coding "red pixel, red pixel, ..." the data may be encoded as "279 red pixels". This is a basic example of [[run-length encoding]]; there are many schemes to reduce file size by eliminating redundancy. |

[[Lossless data compression]] [[algorithm]]s usually exploit [[Redundancy (information theory)|statistical redundancy]] to represent data without losing any [[Self-information|information]], so that the process is reversible. Lossless compression is possible because most real-world data exhibits statistical redundancy. For example, an image may have areas of color that do not change over several pixels; instead of coding "red pixel, red pixel, ..." the data may be encoded as "279 red pixels". This is a basic example of [[run-length encoding]]; there are many schemes to reduce file size by eliminating redundancy. |

||

The [[Lempel–Ziv]] (LZ) compression methods are among the most popular algorithms for lossless storage.<ref>{{cite journal|last=Navqi|first=Saud|author2=Naqvi, R. |author3=Riaz, R.A. |author4= Siddiqui, F. |title=Optimized RTL design and implementation of LZW algorithm for high bandwidth applications|journal=Electrical Review|date=April 2011|volume=2011|issue=4|pages=279–285|url=http://pe.org.pl/articles/2011/4/68.pdf}}</ref> [[DEFLATE (algorithm)|DEFLATE]] is a variation on LZ optimized for decompression speed and compression ratio, but compression can be slow. DEFLATE is used in [[PKZIP]], [[Gzip]], and [[Portable Network Graphics|PNG]]. [[Lempel–Ziv–Welch|LZW]] (Lempel–Ziv–Welch) is used in [[Graphics Interchange Format|GIF]] images. LZ methods use a table-based compression model where table entries are substituted for repeated strings of data. For most LZ methods, this table is generated dynamically from earlier data in the input. The table itself is often [[Huffman coding|Huffman encoded]] (e.g. SHRI, LZX). |

The [[Lempel–Ziv]] (LZ) compression methods are among the most popular algorithms for lossless storage.<ref>{{cite journal|last=Navqi|first=Saud|author2=Naqvi, R. |author3=Riaz, R.A. |author4= Siddiqui, F. |title=Optimized RTL design and implementation of LZW algorithm for high bandwidth applications|journal=Electrical Review|date=April 2011|volume=2011|issue=4|pages=279–285|url=http://pe.org.pl/articles/2011/4/68.pdf}}</ref> [[DEFLATE (algorithm)|DEFLATE]] is a variation on LZ optimized for decompression speed and compression ratio, but compression can be slow. DEFLATE is used in [[PKZIP]], [[Gzip]], and [[Portable Network Graphics|PNG]]. In the mid-1980s, following work by [[Terry Welch]], the [[Lempel–Ziv–Welch|LZW]] (Lempel–Ziv–Welch) algorithm rapidly became the method of choice for most general-purpose compression systems. [[Lempel–Ziv–Welch|LZW]] is used in [[Graphics Interchange Format|GIF]] images, programs such as PKZIP, and hardware devices such as modems.<ref>{{cite book|last=Wolfram|first=Stephen|title=A New Kind of Science|publisher=Wolfram Media, Inc.|year=2002|page=1069|isbn=1-57955-008-8}}</ref> LZ methods use a table-based compression model where table entries are substituted for repeated strings of data. For most LZ methods, this table is generated dynamically from earlier data in the input. The table itself is often [[Huffman coding|Huffman encoded]] (e.g. SHRI, LZX). |

||

Current LZ-based coding schemes that perform well are [[Brotli]] and [[LZX (algorithm)|LZX]]. LZX is used in Microsoft's [[cabinet (file format)|CAB]] format.{{citation needed|date=March 2017}} |

Current LZ-based coding schemes that perform well are [[Brotli]] and [[LZX (algorithm)|LZX]]. LZX is used in Microsoft's [[cabinet (file format)|CAB]] format.{{citation needed|date=March 2017}} |

||

| Line 34: | Line 34: | ||

== Lossy == |

== Lossy == |

||

[[Lossy data compression]] is the converse of [[lossless data compression]]. In these schemes, some loss of information is acceptable. Dropping nonessential detail from the data source can save storage space. Lossy data compression schemes are designed by research on how people perceive the data in question. For example, the human eye is more sensitive to subtle variations in [[luminance]] than it is to the variations in color. [[JPEG]] [[image compression]] works in part by rounding off nonessential bits of information.<ref>{{cite web|last=Arcangel|first=Cory|title=On Compression|url=http://www.coryarcangel.com/downloads/Cory-Arcangel-OnC.pdf|accessdate=6 March 2013}}</ref> There is a corresponding [[trade-off]] between preserving information and reducing size. A number of popular compression formats exploit these perceptual differences, including [[Psychoacoustics|those used in music]] files, images, and video. |

[[Lossy data compression]] is the converse of [[lossless data compression]]. In the late 1980s, digital images became more common, and standards for compressing them emerged. In the early 1990s, lossy compression methods began to be widely used.<ref>{{cite book|last=Wolfram|first=Stephen|title=A New Kind of Science|publisher=Wolfram Media, Inc.|year=2002|page=1069|isbn=1-57955-008-8}}</ref> In these schemes, some loss of information is acceptable. Dropping nonessential detail from the data source can save storage space. Lossy data compression schemes are designed by research on how people perceive the data in question. For example, the human eye is more sensitive to subtle variations in [[luminance]] than it is to the variations in color. [[JPEG]] [[image compression]] works in part by rounding off nonessential bits of information.<ref>{{cite web|last=Arcangel|first=Cory|title=On Compression|url=http://www.coryarcangel.com/downloads/Cory-Arcangel-OnC.pdf|accessdate=6 March 2013}}</ref> There is a corresponding [[trade-off]] between preserving information and reducing size. A number of popular compression formats exploit these perceptual differences, including [[Psychoacoustics|those used in music]] files, images, and video. |

||

Lossy [[image compression]] can be used in [[digital camera]]s, to increase storage capacities with minimal degradation of picture quality. Similarly, [[DVD]]s use the lossy [[MPEG-2]] [[video coding format]] for [[video compression]]. |

Lossy [[image compression]] can be used in [[digital camera]]s, to increase storage capacities with minimal degradation of picture quality. Similarly, [[DVD]]s use the lossy [[MPEG-2]] [[video coding format]] for [[video compression]]. |

||

Revision as of 19:10, 27 March 2018

In signal processing, data compression, source coding,[1] or bit-rate reduction involves encoding information using fewer bits than the original representation.[2] Compression can be either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information.[3]

The process of reducing the size of a

Compression is useful because it reduces resources required to store and transmit data.

Lossless

The

The best modern lossless compressors use

The class of

In a further refinement of the direct use of

Lossy

Lossy

In lossy

Theory

The theoretical background of compression is provided by information theory (which is closely related to algorithmic information theory) for lossless compression and rate–distortion theory for lossy compression. These areas of study were essentially forged by Claude Shannon, who published fundamental papers on the topic in the late 1940s and early 1950s. Coding theory is also related to this. The idea of data compression is also deeply connected with statistical inference.[14]

Machine learning

There is a close connection between

Feature space vectors

However a new, alternative view can show compression algorithms implicitly map strings into implicit

Data differencing

Data compression can be viewed as a special case of

When one wishes to emphasize the connection, one may use the term differential compression to refer to data differencing.

Uses

Audio

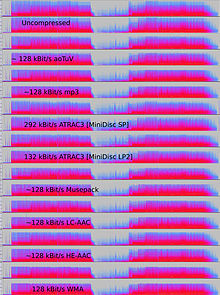

Audio data compression, not to be confused with dynamic range compression, has the potential to reduce the transmission bandwidth and storage requirements of audio data. Audio compression algorithms are implemented in software as audio codecs. Lossy audio compression algorithms provide higher compression at the cost of fidelity and are used in numerous audio applications. These algorithms almost all rely on psychoacoustics to eliminate or reduce fidelity of less audible sounds, thereby reducing the space required to store or transmit them.[2]

In both lossy and lossless compression, information redundancy is reduced, using methods such as coding, pattern recognition, and linear prediction to reduce the amount of information used to represent the uncompressed data.

The acceptable trade-off between loss of audio quality and transmission or storage size depends upon the application. For example, one 640MB compact disc (CD) holds approximately one hour of uncompressed high fidelity music, less than 2 hours of music compressed losslessly, or 7 hours of music compressed in the MP3 format at a medium bit rate. A digital sound recorder can typically store around 200 hours of clearly intelligible speech in 640MB.[21]

Lossless audio compression produces a representation of digital data that decompress to an exact digital duplicate of the original audio stream, unlike playback from lossy compression techniques such as

When audio files are to be processed, either by further compression or for

A number of lossless audio compression formats exist.

Some

Other formats are associated with a distinct system, such as:

- Direct Stream Transfer, used in Super Audio CD

- Meridian Lossless Packing, used in DVD-Audio, Dolby TrueHD, Blu-ray and HD DVD

Lossy audio compression

Lossy audio compression is used in a wide range of applications. In addition to the direct applications (MP3 players or computers), digitally compressed audio streams are used in most video DVDs, digital television, streaming media on the internet, satellite and cable radio, and increasingly in terrestrial radio broadcasts. Lossy compression typically achieves far greater compression than lossless compression (data of 5 percent to 20 percent of the original stream, rather than 50 percent to 60 percent), by discarding less-critical data.[23]

The innovation of lossy audio compression was to use psychoacoustics to recognize that not all data in an audio stream can be perceived by the human auditory system. Most lossy compression reduces perceptual redundancy by first identifying perceptually irrelevant sounds, that is, sounds that are very hard to hear. Typical examples include high frequencies or sounds that occur at the same time as louder sounds. Those sounds are coded with decreased accuracy or not at all.

Due to the nature of lossy algorithms,

Coding methods

To determine what information in an audio signal is perceptually irrelevant, most lossy compression algorithms use transforms such as the

Other types of lossy compressors, such as the linear predictive coding (LPC) used with speech, are source-based coders. These coders use a model of the sound's generator (such as the human vocal tract with LPC) to whiten the audio signal (i.e., flatten its spectrum) before quantization. LPC may be thought of as a basic perceptual coding technique: reconstruction of an audio signal using a linear predictor shapes the coder's quantization noise into the spectrum of the target signal, partially masking it.[23]

Lossy formats are often used for the distribution of streaming audio or interactive applications (such as the coding of speech for digital transmission in cell phone networks). In such applications, the data must be decompressed as the data flows, rather than after the entire data stream has been transmitted. Not all audio codecs can be used for streaming applications, and for such applications a codec designed to stream data effectively will usually be chosen.[23]

Latency results from the methods used to encode and decode the data. Some codecs will analyze a longer segment of the data to optimize efficiency, and then code it in a manner that requires a larger segment of data at one time to decode. (Often codecs create segments called a "frame" to create discrete data segments for encoding and decoding.) The inherent latency of the coding algorithm can be critical; for example, when there is a two-way transmission of data, such as with a telephone conversation, significant delays may seriously degrade the perceived quality.

In contrast to the speed of compression, which is proportional to the number of operations required by the algorithm, here latency refers to the number of samples that must be analysed before a block of audio is processed. In the minimum case, latency is zero samples (e.g., if the coder/decoder simply reduces the number of bits used to quantize the signal). Time domain algorithms such as LPC also often have low latencies, hence their popularity in speech coding for telephony. In algorithms such as MP3, however, a large number of samples have to be analyzed to implement a psychoacoustic model in the frequency domain, and latency is on the order of 23 ms (46 ms for two-way communication)).

Speech encoding

If the data to be compressed is analog (such as a voltage that varies with time), quantization is employed to digitize it into numbers (normally integers). This is referred to as analog-to-digital (A/D) conversion. If the integers generated by quantization are 8 bits each, then the entire range of the analog signal is divided into 256 intervals and all the signal values within an interval are quantized to the same number. If 16-bit integers are generated, then the range of the analog signal is divided into 65,536 intervals.

This relation illustrates the compromise between high resolution (a large number of analog intervals) and high compression (small integers generated). This application of quantization is used by several speech compression methods. This is accomplished, in general, by some combination of two approaches:

- Only encoding sounds that could be made by a single human voice.

- Throwing away more of the data in the signal—keeping just enough to reconstruct an "intelligible" voice rather than the full frequency range of human hearing.

Perhaps the earliest algorithms used in speech encoding (and audio data compression in general) were the A-law algorithm and the µ-law algorithm.

History

A literature compendium for a large variety of audio coding systems was published in the IEEE Journal on Selected Areas in Communications (JSAC), February 1988. While there were some papers from before that time, this collection documented an entire variety of finished, working audio coders, nearly all of them using perceptual (i.e. masking) techniques and some kind of frequency analysis and back-end noiseless coding.[25] Several of these papers remarked on the difficulty of obtaining good, clean digital audio for research purposes. Most, if not all, of the authors in the JSAC edition were also active in the MPEG-1 Audio committee.

The world's first commercial

Video

Video compression uses modern coding techniques to reduce redundancy in video data. Most video compression algorithms and codecs combine spatial image compression and temporal motion compensation. Video compression is a practical implementation of source coding in information theory. In practice, most video codecs also use audio compression techniques in parallel to compress the separate, but combined data streams as one package.[28]

The majority of video compression algorithms use lossy compression. Uncompressed video requires a very high data rate. Although lossless video compression codecs perform at a compression factor of 5-12, a typical MPEG-4 lossy compression video has a compression factor between 20 and 200.[29] As in all lossy compression, there is a trade-off between video quality, cost of processing the compression and decompression, and system requirements. Highly compressed video may present visible or distracting artifacts.

Some video compression schemes typically operate on square-shaped groups of neighboring pixels, often called macroblocks. These pixel groups or blocks of pixels are compared from one frame to the next, and the video compression codec sends only the differences within those blocks. In areas of video with more motion, the compression must encode more data to keep up with the larger number of pixels that are changing. Commonly during explosions, flames, flocks of animals, and in some panning shots, the high-frequency detail leads to quality decreases or to increases in the variable bitrate.

Encoding theory

Video data may be represented as a series of still image frames. The sequence of frames contains spatial and temporal redundancy that video compression algorithms attempt to eliminate or code in a smaller size. Similarities can be encoded by only storing differences between frames, or by using perceptual features of human vision. For example, small differences in color are more difficult to perceive than are changes in brightness. Compression algorithms can average a color across these similar areas to reduce space, in a manner similar to those used in JPEG image compression.[10] Some of these methods are inherently lossy while others may preserve all relevant information from the original, uncompressed video.

One of the most powerful techniques for compressing video is interframe compression. Interframe compression uses one or more earlier or later frames in a sequence to compress the current frame, while intraframe compression uses only the current frame, effectively being image compression.[30]

The most powerful used method works by comparing each frame in the video with the previous one. If the frame contains areas where nothing has moved, the system simply issues a short command that copies that part of the previous frame, bit-for-bit, into the next one. If sections of the frame move in a simple manner, the compressor emits a (slightly longer) command that tells the decompressor to shift, rotate, lighten, or darken the copy. This longer command still remains much shorter than intraframe compression. Interframe compression works well for programs that will simply be played back by the viewer, but can cause problems if the video sequence needs to be edited.[31]

Because interframe compression copies data from one frame to another, if the original frame is simply cut out (or lost in transmission), the following frames cannot be reconstructed properly. Some video formats, such as DV, compress each frame independently using intraframe compression. Making 'cuts' in intraframe-compressed video is almost as easy as editing uncompressed video: one finds the beginning and ending of each frame, and simply copies bit-for-bit each frame that one wants to keep, and discards the frames one doesn't want. Another difference between intraframe and interframe compression is that, with intraframe systems, each frame uses a similar amount of data. In most interframe systems, certain frames (such as "I frames" in MPEG-2) aren't allowed to copy data from other frames, so they require much more data than other frames nearby.[23]

It is possible to build a computer-based video editor that spots problems caused when I frames are edited out while other frames need them. This has allowed newer formats like HDV to be used for editing. However, this process demands a lot more computing power than editing intraframe compressed video with the same picture quality.

Today, nearly all commonly used video compression methods (e.g., those in standards approved by the

Timeline

The following table is a partial history of international video compression standards.

| Year | Standard | Publisher | Popular implementations |

|---|---|---|---|

| 1984 | H.120 | ITU-T | |

| 1988 | H.261 | ITU-T | Videoconferencing, videotelephony |

| 1993 | MPEG-1 Part 2 |

ISO, IEC | Video-CD

|

| 1995 | H.262/MPEG-2 Part 2 | ISO, IEC, ITU-T | SVCD

|

| 1996 | H.263 | ITU-T | Videoconferencing, videotelephony, video on mobile phones ( 3GP )

|

| 1999 | MPEG-4 Part 2 | ISO, IEC | Video on Internet (DivX, Xvid ...) |

| 2003 | H.264/MPEG-4 AVC |

Sony, Panasonic, Samsung, ISO, IEC, ITU-T | iPod Video, Apple TV , videoconferencing

|

| 2009 | VC-2 (Dirac) | SMPTE |

Video on Internet, HDTV broadcast, UHDTV |

| 2013 | H.265 |

ISO, IEC, ITU-T |

Genetics

Emulation

In order to emulate CD-based consoles such as the PlayStation 2, data compression is desirable to reduce huge amounts of disk space used by ISOs. For example, Final Fantasy XII (USA) is normally 2.9 gigabytes. With proper compression, it is reduced to around 90% of that size.[36]

Outlook and currently unused potential

It is estimated that the total amount of data that is stored on the world's storage devices could be further compressed with existing compression algorithms by a remaining average factor of 4.5:1.[

See also

- Auditory masking

- HTTP compression

- Kolmogorov complexity

- Magic compression algorithm

- Minimum description length

- Modulo-N code

- Range encoding

- Sub-band coding

- Universal code (data compression)

- Vector quantization

References

- ^

Wade, Graham (1994). Signal coding and processing (2 ed.). Cambridge University Press. p. 34. ISBN 978-0-521-42336-6. Retrieved 2011-12-22.source and thereby achieve a reduction in the overall source rate R.

The broad objective of source coding is to exploit or remove 'inefficient' redundancy in the PCM

- ^ a b Mahdi, O.A.; Mohammed, M.A.; Mohamed, A.J. (November 2012). "Implementing a Novel Approach an Convert Audio Compression to Text Coding via Hybrid Technique" (PDF). International Journal of Computer Science Issues. 9 (6, No. 3): 53–59. Retrieved 6 March 2013.

- ^ Pujar, J.H.; Kadlaskar, L.M. (May 2010). "A New Lossless Method of Image Compression and Decompression Using Huffman Coding Techniques" (PDF). Journal of Theoretical and Applied Information Technology. 15 (1): 18–23.

- ISBN 9781848000728.

- ^ S. Mittal; J. Vetter (2015), "A Survey Of Architectural Approaches for Data Compression in Cache and Main Memory Systems", IEEE Transactions on Parallel and Distributed Systems, IEEE

- ^ Tank, M.K. (2011). Implementation of Limpel-Ziv algorithm for lossless compression using VHDL. Berlin: Springer. pp. 275–283.

{{cite book}}:|work=ignored (help) - ^ Navqi, Saud; Naqvi, R.; Riaz, R.A.; Siddiqui, F. (April 2011). "Optimized RTL design and implementation of LZW algorithm for high bandwidth applications" (PDF). Electrical Review. 2011 (4): 279–285.

- ISBN 1-57955-008-8.

- ^ a b Mahmud, Salauddin (March 2012). "An Improved Data Compression Method for General Data" (PDF). International Journal of Scientific & Engineering Research. 3 (3): 2. Retrieved 6 March 2013.

- ^ a b Lane, Tom. "JPEG Image Compression FAQ, Part 1". Internet FAQ Archives. Independent JPEG Group. Retrieved 6 March 2013.

- IEEE. Retrieved 2017-08-12.

- ISBN 1-57955-008-8.

- ^ Arcangel, Cory. "On Compression" (PDF). Retrieved 6 March 2013.

- ^ Marak, Laszlo. "On image compression" (PDF). University of Marne la Vallee. Retrieved 6 March 2013.

- ^ Mahoney, Matt. "Rationale for a Large Text Compression Benchmark". Florida Institute of Technology. Retrieved 5 March 2013.

- ^ Shmilovici A.; Kahiri Y.; Ben-Gal I.; Hauser S. "Measuring the Efficiency of the Intraday Forex Market with a Universal Data Compression Algorithm" (PDF). Computational Economics, Vol. 33 (2), 131-154., 2009.

- ^ I. Ben-Gal. "On the Use of Data Compression Measures to Analyze Robust Designs" (PDF). IEEE Trans. on Reliability, Vol. 54, no. 3, 381-388, 2008.

- ^ Scully and Brodley, D. and Carla E. (2006). "Compression and machine learning: A new perspective on feature space vectors" (PDF). Data Compression Conference, 2006.

- ^ Korn, D.; et al. "RFC 3284: The VCDIFF Generic Differencing and Compression Data Format". Internet Engineering Task Force. Retrieved 5 March 2013.

- ^ Korn, D.G.; Vo, K.P. (1995), B. Krishnamurthy (ed.), Vdelta: Differencing and Compression, Practical Reusable Unix Software, New York: John Wiley & Sons, Inc.

- ^ The Olympus WS-120 digital speech recorder, according to its manual, can store about 178 hours of speech-quality audio in .WMA format in 500MB of flash memory.

- ^ Coalson, Josh. "FLAC Comparison". Retrieved 6 March 2013.

- ^ ISBN 9788190639675.

- ISBN 9783642126512.

- ^ "File Compression Possibilities". A Brief guide to compress a file in 4 different ways.

- ^ "Summary of some of Solidyne's contributions to Broadcast Engineering". Brief History of Solidyne. Buenos Aires: Solidyne. Archived from the original on 8 March 2013. Retrieved 6 March 2013.

{{cite web}}: Unknown parameter|deadurl=ignored (|url-status=suggested) (help) - ^ Zwicker, Eberhard (1967). The Ear As A Communication Receiver. Melville, NY: Acoustical Society of America.

{{cite book}}: Unknown parameter|displayauthors=ignored (|display-authors=suggested) (help) - ^ "Video Coding". Center for Signal and Information Processing Research. Georgia Institute of Technology. Retrieved 6 March 2013.

- ^ Graphics & Media Lab Video Group (2007). Lossless Video Codecs Comparison (PDF). Moscow State University.

- ^ ISBN 9783642126512.

- ^ Bhojani, D.R. "4.1 Video Compression" (PDF). Hypothesis. Retrieved 6 March 2013.

- .

- PMID 22844100.)

{{cite journal}}: CS1 maint: multiple names: authors list (link - PMID 18996942.)

{{cite journal}}: CS1 maint: multiple names: authors list (link - PMID 23793748.)

{{cite journal}}: CS1 maint: multiple names: authors list (link - ^ PCSX2 team (January 8, 2016). "1.4.0 released! Year end report". Retrieved April 27, 2016.

{{cite web}}: CS1 maint: numeric names: authors list (link) - PMID 21310967. Retrieved 6 March 2013.

External links

- Data Compression Basics (Video)

- Video compression 4:2:2 10-bit and its benefits

- Why does 10-bit save bandwidth (even when content is 8-bit)?

- Which compression technology should be used

- Wiley - Introduction to Compression Theory

- EBU subjective listening tests on low-bitrate audio codecs

- Audio Archiving Guide: Music Formats (Guide for helping a user pick out the right codec)

- MPEG 1&2 video compression intro (pdf format) at the Wayback Machine (archived September 28, 2007)

- hydrogenaudio wiki comparison

- Introduction to Data Compression by Guy E Blelloch from CMU

- HD Greetings - 1080p Uncompressed source material for compression testing and research

- Explanation of lossless signal compression method used by most codecs

- Interactive blind listening tests of audio codecs over the internet

- TestVid - 2,000+ HD and other uncompressed source video clips for compression testing

- Videsignline - Intro to Video Compression

- Data Footprint Reduction Technology[permanent dead link]

- What is Run length Coding in video compression.