Discrete cosine transform

This article may misquote or misrepresent many of its sources. Please see the cleanup page for more information. (July 2022) |

A discrete cosine transform (DCT) expresses a finite sequence of

A DCT is a Fourier-related transform similar to the discrete Fourier transform (DFT), but using only real numbers. The DCTs are generally related to Fourier series coefficients of a periodically and symmetrically extended sequence whereas DFTs are related to Fourier series coefficients of only periodically extended sequences. DCTs are equivalent to DFTs of roughly twice the length, operating on real data with even symmetry (since the Fourier transform of a real and even function is real and even), whereas in some variants the input or output data are shifted by half a sample.

There are eight standard DCT variants, of which four are common. The most common variant of discrete cosine transform is the type-II DCT, which is often called simply the DCT. This was the original DCT as first proposed by Ahmed. Its inverse, the type-III DCT, is correspondingly often called simply the inverse DCT or the IDCT. Two related transforms are the

DCT compression, also known as block compression, compresses data in sets of discrete DCT blocks.

History

The DCT was first conceived by Nasir Ahmed, T. Natarajan and K. R. Rao while working at Kansas State University. The concept was proposed to the National Science Foundation in 1972. The DCT was originally intended for image compression.[9][1] Ahmed developed a practical DCT algorithm with his PhD students T. Raj Natarajan, Wills Dietrich, and Jeremy Fries, and his friend Dr. K. R. Rao at the University of Texas at Arlington in 1973.[9] They presented their results in a January 1974 paper, titled Discrete Cosine Transform.[5][6][10] It described what is now called the type-II DCT (DCT-II),[2]: 51 as well as the type-III inverse DCT (IDCT).[5]

Since its introduction in 1974, there has been significant research on the DCT.[10] In 1977, Wen-Hsiung Chen published a paper with C. Harrison Smith and Stanley C. Fralick presenting a fast DCT algorithm.[11][10] Further developments include a 1978 paper by M. J. Narasimha and A. M. Peterson, and a 1984 paper by B. G. Lee.[10] These research papers, along with the original 1974 Ahmed paper and the 1977 Chen paper, were cited by the Joint Photographic Experts Group as the basis for JPEG's lossy image compression algorithm in 1992.[10][12]

The

In 1975, John A. Roese and Guner S. Robinson adapted the DCT for

A DCT variant, the

Nasir Ahmed also developed a lossless DCT algorithm with Giridhar Mandyam and Neeraj Magotra at the University of New Mexico in 1995. This allows the DCT technique to be used for lossless compression of images. It is a modification of the original DCT algorithm, and incorporates elements of inverse DCT and delta modulation. It is a more effective lossless compression algorithm than entropy coding.[27] Lossless DCT is also known as LDCT.[28]

Applications

The DCT is the most widely used transformation technique in

The DCT, and in particular the DCT-II, is often used in signal and image processing, especially for lossy compression, because it has a strong energy compaction property.

DCTs are widely employed in solving

DCTs are closely related to

General applications

The DCT is widely used in many applications, which include the following.

- time-domain aliasing cancellation (TDAC)[37]

- Digital audio[1]

- Digital radio — Digital Audio Broadcasting (DAB+),[38] HD Radio[39]

- Speech processing — speech coding[40][41] speech recognition, voice activity detection (VAD)[37]

- videoconferencing[1]

- facial recognition systems, biometric watermarking, fingerprint-based biometric watermarking, palm print identification/recognition[37]

-

- Network bandwidth usage reducation[1]

- Cryptography — encryption, steganography, copyright protection[37]

- Data compression — transform coding, lossy compression, lossless compression[36]

- variable bitrate encoding[1]

- Digital media[34] — digital distribution[43]

- video-on-demand (VOD)[8]

- Forgery detection[37]

- Geophysical transient electromagnetics (transient EM)[37]

- feature extraction[37]

- RGB)[1]

- high-dynamic-range imaging (HDR imaging)[44]

- progressive image transmission[37]

- spatiotemporal masking effects, foveated imaging[37]

- Image quality assessment — DCT-based quality degradation metric (DCT QM)[37]

- Medical technology

- Electrocardiography (ECG) — vectorcardiography (VCG)[37]

- compression classification[37]

- Pattern recognition[37]

- Region of interest (ROI) extraction[37]

- Surveillance[37]

- Vehicular event data recorder camera[37]

- Video

- Digital cinema[45] — digital cinematography, digital movie cameras, video editing, film editing,[47][48] Dolby Digital audio[1][22]

- ultra HDTV (UHDTV)[1]

- motion vectors,[1] 3D video coding, local distortion detection probability (LDDP) model, moving object detection, Multiview Video Coding (MVC)[37]

- Video processing — motion analysis, 3D-DCT motion analysis, video content analysis, data extraction,[37] video browsing,[49] professional video production[50]

- 3D video watermarking, reversible data hiding, watermarking detection[37]

- Wireless technology

- Wireless sensor network (WSN) — wireless acoustic sensor networks[37]

Visual media standards

The DCT-II is an important image compression technique. It is used in image compression standards such as

. There, the two-dimensional DCT-II of blocks are computed and the results areThe integer DCT, an integer approximation of the DCT,

Image formats

| Image compression standard | Year | Common applications |

|---|---|---|

| JPEG[1] | 1992 | The most widely used image compression standard image format.[46]

|

| JPEG XR | 2009 | Open XML Paper Specification |

| WebP | 2010 | A graphic format that supports the lossy compression of digital images. Developed by Google. |

High Efficiency Image Format (HEIF) |

2013 | animated GIF format.[55]

|

| BPG | 2014 | Based on HEVC compression |

| JPEG XL[56] | 2020 | A royalty-free raster-graphics file format that supports both lossy and lossless compression. |

Video formats

Video coding standard |

Year | Common applications |

|---|---|---|

| H.261[57][58] | 1988 | First of a family of video telephone products.

|

| Motion JPEG (MJPEG)[59] | 1992 | digital cameras

|

| MPEG-1 Video[60] | 1993 | CD or Internet video

|

MPEG-2 Video (H.262)[60] |

1995 | Storage and handling of digital images in broadcast applications, video distribution |

| DV | 1995 | Camcorders, digital cassettes

|

| H.263 (MPEG-4 Part 2)[57] | 1996 | |

| Advanced Video Coding (AVC, H.264, MPEG-4)[1][52] | 2003 | Popular |

| Theora | 2004 | Internet video, web browsers |

| VC-1 | 2006 | Blu-ray Discs

|

| Apple ProRes | 2007 | Professional video production.[50] |

| VP9 | 2010 | A video codec developed by Google used in the WebM container format with HTML5. |

| High Efficiency Video Coding (HEVC, H.265)[1][4] | 2013 | Successor to the H.264 standard, having substantially improved compression capability |

| Daala | 2013 | Research video format by Xiph.org

|

| AV1[63] | 2018 | An open source format based on VP10 (VP9's internal successor), Daala and Thor; used by content providers such as YouTube[64][65] and Netflix.[66][67] |

MDCT audio standards

General audio

| Audio compression standard | Year | Common applications |

|---|---|---|

| Dolby Digital (AC-3)[22][23] | 1991 | video games

|

Adaptive Transform Acoustic Coding (ATRAC)[22]

|

1992 | MiniDisc |

| MP3[24][1] | 1993 | portable media players, streaming media

|

| Perceptual Audio Coder (PAC)[22] | 1996 | Digital audio radio service (DARS) |

| 1997 | ||

| High-Efficiency Advanced Audio Coding (AAC+)[68][38]: 478 | 1997 | digital audio broadcasting (DAB+),[38] Digital Radio Mondiale (DRM)

|

| Cook Codec | 1998 | RealAudio |

| Windows Media Audio (WMA)[22] | 1999 | Windows Media |

| Vorbis[26][22] | 2000 | |

| High-Definition Coding (HDC)[39] | 2002 | Digital radio, HD Radio |

| Dynamic Resolution Adaptation (DRA)[22] | 2008 | China national audio standard, China Multimedia Mobile Broadcasting, DVB-H |

| Opus[69] | 2012 | VoIP,[70] mobile telephony, WhatsApp,[71][72][73] PlayStation 4[74] |

| Dolby AC-4[75] | 2015 | ATSC 3.0, ultra-high-definition television (UHD TV) |

| MPEG-H 3D Audio[76] |

Speech coding

| Speech coding standard | Year | Common applications |

|---|---|---|

| AAC-LD (LD-MDCT)[77] | 1999 | |

| Siren[40] | 1999 | VoIP, wideband audio, G.722.1

|

| G.722.1[78] | 1999 | VoIP, wideband audio, G.722 |

| G.729.1[79] | 2006 | G.729, VoIP, wideband audio,[79] mobile telephony |

| EVRC-WB[38]: 31, 478] | 2007 | Wideband audio |

| G.718[80] | 2008 | VoIP, wideband audio, mobile telephony |

| G.719[38] | 2008 | voice mail

|

| CELT[81] | 2011 | VoIP,[82][83] mobile telephony |

| Enhanced Voice Services (EVS)[84] | 2014 | Mobile telephony, VoIP, wideband audio |

MD DCT

Multidimensional DCTs (MD DCTs) have several applications, mainly 3-D DCTs such as the 3-D DCT-II, which has several new applications like Hyperspectral Imaging coding systems,

Digital signal processing

DCT plays a very important role in digital signal processing. By using the DCT, the signals can be compressed. DCT can be used in electrocardiography for the compression of ECG signals. DCT2 provides a better compression ratio than DCT.

The DCT is widely implemented in

Compression artifacts

A common issue with DCT compression in

DCT blocks are often used in glitch art.[3] The artist Rosa Menkman makes use of DCT-based compression artifacts in her glitch art,[96] particularly the DCT blocks found in most digital media formats such as JPEG digital images and MP3 digital audio.[3] Another example is Jpegs by German photographer Thomas Ruff, which uses intentional JPEG artifacts as the basis of the picture's style.[97][98]

Informal overview

Like any Fourier-related transform, discrete cosine transforms (DCTs) express a function or a signal in terms of a sum of

The Fourier-related transforms that operate on a function over a finite domain, such as the DFT or DCT or a Fourier series, can be thought of as implicitly defining an extension of that function outside the domain. That is, once you write a function as a sum of sinusoids, you can evaluate that sum at any , even for where the original was not specified. The DFT, like the Fourier series, implies a periodic extension of the original function. A DCT, like a cosine transform, implies an even extension of the original function.

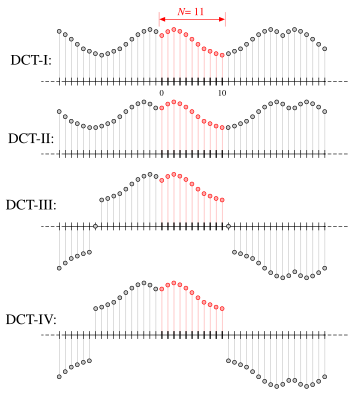

However, because DCTs operate on finite, discrete sequences, two issues arise that do not apply for the continuous cosine transform. First, one has to specify whether the function is even or odd at both the left and right boundaries of the domain (i.e. the min-n and max-n boundaries in the definitions below, respectively). Second, one has to specify around what point the function is even or odd. In particular, consider a sequence abcd of four equally spaced data points, and say that we specify an even left boundary. There are two sensible possibilities: either the data are even about the sample a, in which case the even extension is dcbabcd, or the data are even about the point halfway between a and the previous point, in which case the even extension is dcbaabcd (a is repeated).

These choices lead to all the standard variations of DCTs and also discrete sine transforms (DSTs). Each boundary can be either even or odd (2 choices per boundary) and can be symmetric about a data point or the point halfway between two data points (2 choices per boundary), for a total of 2 × 2 × 2 × 2 = 16 possibilities. Half of these possibilities, those where the left boundary is even, correspond to the 8 types of DCT; the other half are the 8 types of DST.

These different boundary conditions strongly affect the applications of the transform and lead to uniquely useful properties for the various DCT types. Most directly, when using Fourier-related transforms to solve partial differential equations by spectral methods, the boundary conditions are directly specified as a part of the problem being solved. Or, for the MDCT (based on the type-IV DCT), the boundary conditions are intimately involved in the MDCT's critical property of time-domain aliasing cancellation. In a more subtle fashion, the boundary conditions are responsible for the "energy compactification" properties that make DCTs useful for image and audio compression, because the boundaries affect the rate of convergence of any Fourier-like series.

In particular, it is well known that any

Formal definition

Formally, the discrete cosine transform is a

DCT-I

Some authors further multiply the and terms by and correspondingly multiply the and terms by which makes the DCT-I matrix orthogonal, if one further multiplies by an overall scale factor of but breaks the direct correspondence with a real-even DFT.

The DCT-I is exactly equivalent (up to an overall scale factor of 2), to a DFT of real numbers with even symmetry. For example, a DCT-I of real numbers is exactly equivalent to a DFT of eight real numbers (even symmetry), divided by two. (In contrast, DCT types II-IV involve a half-sample shift in the equivalent DFT.)

Note, however, that the DCT-I is not defined for less than 2, while all other DCT types are defined for any positive

Thus, the DCT-I corresponds to the boundary conditions: is even around and even around ; similarly for

DCT-II

The DCT-II is probably the most commonly used form, and is often simply referred to as "the DCT".[5][6]

This transform is exactly equivalent (up to an overall scale factor of 2) to a DFT of real inputs of even symmetry where the even-indexed elements are zero. That is, it is half of the DFT of the inputs where for and for DCT-II transformation is also possible using 2N signal followed by a multiplication by half shift. This is demonstrated by Makhoul.

Some authors further multiply the term by and multiply the rest of the matrix by an overall scale factor of (see below for the corresponding change in DCT-III). This makes the DCT-II matrix

The DCT-II implies the boundary conditions: is even around and even around is even around and odd around

DCT-III

Because it is the inverse of DCT-II up to a scale factor (see below), this form is sometimes simply referred to as "the inverse DCT" ("IDCT").[6]

Some authors divide the term by instead of by 2 (resulting in an overall term) and multiply the resulting matrix by an overall scale factor of (see above for the corresponding change in DCT-II), so that the DCT-II and DCT-III are transposes of one another. This makes the DCT-III matrix orthogonal, but breaks the direct correspondence with a real-even DFT of half-shifted output.

The DCT-III implies the boundary conditions: is even around and odd around is even around and even around

DCT-IV

The DCT-IV matrix becomes orthogonal (and thus, being clearly symmetric, its own inverse) if one further multiplies by an overall scale factor of

A variant of the DCT-IV, where data from different transforms are overlapped, is called the modified discrete cosine transform (MDCT).[103]

The DCT-IV implies the boundary conditions: is even around and odd around similarly for

DCT V-VIII

DCTs of types I–IV treat both boundaries consistently regarding the point of symmetry: they are even/odd around either a data point for both boundaries or halfway between two data points for both boundaries. By contrast, DCTs of types V-VIII imply boundaries that are even/odd around a data point for one boundary and halfway between two data points for the other boundary.

In other words, DCT types I–IV are equivalent to real-even DFTs of even order (regardless of whether is even or odd), since the corresponding DFT is of length (for DCT-I) or (for DCT-II & III) or (for DCT-IV). The four additional types of discrete cosine transform[104] correspond essentially to real-even DFTs of logically odd order, which have factors of in the denominators of the cosine arguments.

However, these variants seem to be rarely used in practice. One reason, perhaps, is that FFT algorithms for odd-length DFTs are generally more complicated than FFT algorithms for even-length DFTs (e.g. the simplest radix-2 algorithms are only for even lengths), and this increased intricacy carries over to the DCTs as described below.

(The trivial real-even array, a length-one DFT (odd length) of a single number a , corresponds to a DCT-V of length )

Inverse transforms

Using the normalization conventions above, the inverse of DCT-I is DCT-I multiplied by 2/(N − 1). The inverse of DCT-IV is DCT-IV multiplied by 2/N. The inverse of DCT-II is DCT-III multiplied by 2/N and vice versa.[6]

Like for the DFT, the normalization factor in front of these transform definitions is merely a convention and differs between treatments. For example, some authors multiply the transforms by so that the inverse does not require any additional multiplicative factor. Combined with appropriate factors of √2 (see above), this can be used to make the transform matrix orthogonal.

Multidimensional DCTs

Multidimensional variants of the various DCT types follow straightforwardly from the one-dimensional definitions: they are simply a separable product (equivalently, a composition) of DCTs along each dimension.

M-D DCT-II

For example, a two-dimensional DCT-II of an image or a matrix is simply the one-dimensional DCT-II, from above, performed along the rows and then along the columns (or vice versa). That is, the 2D DCT-II is given by the formula (omitting normalization and other scale factors, as above):

- The inverse of a multi-dimensional DCT is just a separable product of the inverses of the corresponding one-dimensional DCTs (see above), e.g. the one-dimensional inverses applied along one dimension at a time in a row-column algorithm.

The 3-D DCT-II is only the extension of 2-D DCT-II in three dimensional space and mathematically can be calculated by the formula

The inverse of 3-D DCT-II is 3-D DCT-III and can be computed from the formula given by

Technically, computing a two-, three- (or -multi) dimensional DCT by sequences of one-dimensional DCTs along each dimension is known as a row-column algorithm. As with multidimensional FFT algorithms, however, there exist other methods to compute the same thing while performing the computations in a different order (i.e. interleaving/combining the algorithms for the different dimensions). Owing to the rapid growth in the applications based on the 3-D DCT, several fast algorithms are developed for the computation of 3-D DCT-II. Vector-Radix algorithms are applied for computing M-D DCT to reduce the computational complexity and to increase the computational speed. To compute 3-D DCT-II efficiently, a fast algorithm, Vector-Radix Decimation in Frequency (VR DIF) algorithm was developed.

3-D DCT-II VR DIF

In order to apply the VR DIF algorithm the input data is to be formulated and rearranged as follows.[105][106] The transform size N × N × N is assumed to be 2.

- where

The figure to the adjacent shows the four stages that are involved in calculating 3-D DCT-II using VR DIF algorithm. The first stage is the 3-D reordering using the index mapping illustrated by the above equations. The second stage is the butterfly calculation. Each butterfly calculates eight points together as shown in the figure just below, where .

The original 3-D DCT-II now can be written as

where

If the even and the odd parts of and and are considered, the general formula for the calculation of the 3-D DCT-II can be expressed as

where

Arithmetic complexity

The whole 3-D DCT calculation needs stages, and each stage involves butterflies. The whole 3-D DCT requires butterflies to be computed. Each butterfly requires seven real multiplications (including trivial multiplications) and 24 real additions (including trivial additions). Therefore, the total number of real multiplications needed for this stage is and the total number of real additions i.e. including the post-additions (recursive additions) which can be calculated directly after the butterfly stage or after the bit-reverse stage are given by[106]

The conventional method to calculate MD-DCT-II is using a Row-Column-Frame (RCF) approach which is computationally complex and less productive on most advanced recent hardware platforms. The number of multiplications required to compute VR DIF Algorithm when compared to RCF algorithm are quite a few in number. The number of Multiplications and additions involved in RCF approach are given by and respectively. From Table 1, it can be seen that the total number

| Transform Size | 3D VR Mults | RCF Mults | 3D VR Adds | RCF Adds |

|---|---|---|---|---|

| 8 × 8 × 8 | 2.625 | 4.5 | 10.875 | 10.875 |

| 16 × 16 × 16 | 3.5 | 6 | 15.188 | 15.188 |

| 32 × 32 × 32 | 4.375 | 7.5 | 19.594 | 19.594 |

| 64 × 64 × 64 | 5.25 | 9 | 24.047 | 24.047 |

of multiplications associated with the 3-D DCT VR algorithm is less than that associated with the RCF approach by more than 40%. In addition, the RCF approach involves matrix transpose and more indexing and data swapping than the new VR algorithm. This makes the 3-D DCT VR algorithm more efficient and better suited for 3-D applications that involve the 3-D DCT-II such as video compression and other 3-D image processing applications.

The main consideration in choosing a fast algorithm is to avoid computational and structural complexities. As the technology of computers and DSPs advances, the execution time of arithmetic operations (multiplications and additions) is becoming very fast, and regular computational structure becomes the most important factor.[107] Therefore, although the above proposed 3-D VR algorithm does not achieve the theoretical lower bound on the number of multiplications,[108] it has a simpler computational structure as compared to other 3-D DCT algorithms. It can be implemented in place using a single butterfly and possesses the properties of the Cooley–Tukey FFT algorithm in 3-D. Hence, the 3-D VR presents a good choice for reducing arithmetic operations in the calculation of the 3-D DCT-II, while keeping the simple structure that characterize butterfly-style Cooley–Tukey FFT algorithms.

The image to the right shows a combination of horizontal and vertical frequencies for an 8 × 8 two-dimensional DCT. Each step from left to right and top to bottom is an increase in frequency by 1/2 cycle. For example, moving right one from the top-left square yields a half-cycle increase in the horizontal frequency. Another move to the right yields two half-cycles. A move down yields two half-cycles horizontally and a half-cycle vertically. The source data ( 8×8 ) is transformed to a linear combination of these 64 frequency squares.

MD-DCT-IV

The M-D DCT-IV is just an extension of 1-D DCT-IV on to M dimensional domain. The 2-D DCT-IV of a matrix or an image is given by

- for and

We can compute the MD DCT-IV using the regular row-column method or we can use the polynomial transform method[109] for the fast and efficient computation. The main idea of this algorithm is to use the Polynomial Transform to convert the multidimensional DCT into a series of 1-D DCTs directly. MD DCT-IV also has several applications in various fields.

Computation

Although the direct application of these formulas would require operations, it is possible to compute the same thing with only complexity by factorizing the computation similarly to the fast Fourier transform (FFT). One can also compute DCTs via FFTs combined with pre- and post-processing steps. In general, methods to compute DCTs are known as fast cosine transform (FCT) algorithms.

The most efficient algorithms, in principle, are usually those that are specialized directly for the DCT, as opposed to using an ordinary FFT plus extra operations (see below for an exception). However, even "specialized" DCT algorithms (including all of those that achieve the lowest known arithmetic counts, at least for power-of-two sizes) are typically closely related to FFT algorithms – since DCTs are essentially DFTs of real-even data, one can design a fast DCT algorithm by taking an FFT and eliminating the redundant operations due to this symmetry. This can even be done automatically (Frigo & Johnson 2005). Algorithms based on the Cooley–Tukey FFT algorithm are most common, but any other FFT algorithm is also applicable. For example, the Winograd FFT algorithm leads to minimal-multiplication algorithms for the DFT, albeit generally at the cost of more additions, and a similar algorithm was proposed by (Feig & Winograd 1992a) for the DCT. Because the algorithms for DFTs, DCTs, and similar transforms are all so closely related, any improvement in algorithms for one transform will theoretically lead to immediate gains for the other transforms as well (Duhamel & Vetterli 1990).

While DCT algorithms that employ an unmodified FFT often have some theoretical overhead compared to the best specialized DCT algorithms, the former also have a distinct advantage: Highly optimized FFT programs are widely available. Thus, in practice, it is often easier to obtain high performance for general lengths N with FFT-based algorithms.[a] Specialized DCT algorithms, on the other hand, see widespread use for transforms of small, fixed sizes such as the 8 × 8 DCT-II used in JPEG compression, or the small DCTs (or MDCTs) typically used in audio compression. (Reduced code size may also be a reason to use a specialized DCT for embedded-device applications.)

In fact, even the DCT algorithms using an ordinary FFT are sometimes equivalent to pruning the redundant operations from a larger FFT of real-symmetric data, and they can even be optimal from the perspective of arithmetic counts. For example, a type-II DCT is equivalent to a DFT of size with real-even symmetry whose even-indexed elements are zero. One of the most common methods for computing this via an FFT (e.g. the method used in FFTPACK and FFTW) was described by Narasimha & Peterson (1978) and Makhoul (1980), and this method in hindsight can be seen as one step of a radix-4 decimation-in-time Cooley–Tukey algorithm applied to the "logical" real-even DFT corresponding to the DCT-II.[b] Because the even-indexed elements are zero, this radix-4 step is exactly the same as a split-radix step. If the subsequent size real-data FFT is also performed by a real-data split-radix algorithm (as in Sorensen et al. (1987)), then the resulting algorithm actually matches what was long the lowest published arithmetic count for the power-of-two DCT-II ( real-arithmetic operations[c]).

A recent reduction in the operation count to also uses a real-data FFT.[110] So, there is nothing intrinsically bad about computing the DCT via an FFT from an arithmetic perspective – it is sometimes merely a question of whether the corresponding FFT algorithm is optimal. (As a practical matter, the function-call overhead in invoking a separate FFT routine might be significant for small but this is an implementation rather than an algorithmic question since it can be solved by unrolling or inlining.)

Example of IDCT

Consider this 8x8 grayscale image of capital letter A.

DCT of the image = .

Each basis function is multiplied by its coefficient and then this product is added to the final image.

See also

- Discrete wavelet transform

- JPEG - Discrete cosine transform - Contains a potentially easier to understand example of DCT transformation

- List of Fourier-related transforms

- Modified discrete cosine transform

Notes

- ^ Algorithmic performance on modern hardware is typically not principally determined by simple arithmetic counts, and optimization requires substantial engineering effort to make best use, within its intrinsic limits, of available built-in hardware optimization.

- ^

The radix-4 step reduces the size DFT to four size DFTs of real data, two of which are zero, and two of which are equal to one another by the even symmetry. Hence giving a single size FFT of real data plus butterflies, once the trivial and / or duplicate parts are eliminated and / or merged.

- ^ The precise count of real arithmetic operations, and in particular the count of real multiplications, depends somewhat on the scaling of the transform definition. The count is for the DCT-II definition shown here; two multiplications can be saved if the transform is scaled by an overall factor. Additional multiplications can be saved if one permits the outputs of the transform to be rescaled individually, as was shown by Arai, Agui & Nakajima (1988) for the size-8 case used in JPEG.

References

- ^ .

- ^ S2CID 118873224.

- ^ a b c d Alikhani, Darya (April 1, 2015). "Beyond resolution: Rosa Menkman's glitch art". POSTmatter. Archived from the original on 19 October 2019. Retrieved 19 October 2019.

- ^ a b c d e f Thomson, Gavin; Shah, Athar (2017). "Introducing HEIF and HEVC" (PDF). Apple Inc. Retrieved 5 August 2019.

- ^ S2CID 206619973.

- ^ S2CID 12270940.

- ^ a b c d e f g Barbero, M.; Hofmann, H.; Wells, N. D. (14 November 1991). "DCT source coding and current implementations for HDTV". EBU Technical Review (251). European Broadcasting Union: 22–33. Retrieved 4 November 2019.

- ^ a b c d e Lea, William (1994). "Video on demand: Research Paper 94/68". House of Commons Library. Retrieved 20 September 2019.

- ^ .

- ^ CCITT. September 1992. Retrieved 12 July 2019.

- .

- ISSN 0090-6778.

- ^ Dhamija, Swati; Jain, Priyanka (September 2011). "Comparative Analysis for Discrete Sine Transform as a suitable method for noise estimation". IJCSI International Journal of Computer Science. 8 (5, No. 3): 162–164 (162). Retrieved 4 November 2019.

- ISBN 9783642870378.

- S2CID 62725808.

- ISBN 9780786487974.

- ^ a b c "History of Video Compression". ITU-T. Joint Video Team (JVT) of ISO/IEC MPEG & ITU-T VCEG (ISO/IEC JTC1/SC29/WG11 and ITU-T SG16 Q.6). July 2002. pp. 11, 24–9, 33, 40–1, 53–6. Retrieved 3 November 2019.

- ^ ISBN 9780852967102.

- ISBN 9789812709998.

- S2CID 58446992.

- .

- ^ ISBN 9780387782638.

- ^ S2CID 897622.

- ^ a b Guckert, John (Spring 2012). "The Use of FFT and MDCT in MP3 Audio Compression" (PDF). University of Utah. Retrieved 14 July 2019.

- ^ a b Brandenburg, Karlheinz (1999). "MP3 and AAC Explained" (PDF). Archived (PDF) from the original on 2017-02-13.

- ^ a b Xiph.Org Foundation (2009-06-02). "Vorbis I specification - 1.1.2 Classification". Xiph.Org Foundation. Retrieved 2009-09-22.

- S2CID 13894279.

- S2CID 17045923.

- S2CID 16411333.

- ISBN 9780080477480.

- ^ a b c "What Is a JPEG? The Invisible Object You See Every Day". The Atlantic. 24 September 2013. Retrieved 13 September 2019.

- ^ a b c Pessina, Laure-Anne (12 December 2014). "JPEG changed our world". EPFL News. École Polytechnique Fédérale de Lausanne. Retrieved 13 September 2019.

- ^ ISSN 0018-1153.

- ^ ISBN 9780470857649.

- ^ ISBN 9781483298511.

- ^ ISBN 9781351396486.

- ^ ISBN 9781351396486.

- ^ ISBN 9783319610801.

- ^ ISBN 978-1-136-03410-7.

- ^ ISBN 9780470023631.

- ^ a b c d e Daniel Eran Dilger (June 8, 2010). "Inside iPhone 4: FaceTime video calling". AppleInsider. Retrieved June 9, 2010.

- ^ Medium.com. Netflix. Retrieved 20 October 2019.

- ^ a b "Video Developer Report 2019" (PDF). Bitmovin. 2019. Retrieved 5 November 2019.

- ISBN 9781351396486.

- ^ ISBN 978-0-07-142963-4.

DCT is used in most of the compression systems standardized by the Moving Picture Experts Group (MPEG), is the dominant technology for image compression. In particular, it is the core technology of MPEG-2, the system used for DVDs, digital television broadcasting, that has been used for many of the trials of digital cinema.

- ^ a b Baraniuk, Chris (15 October 2015). "Copy protections could come to JPegs". BBC News. BBC. Retrieved 13 September 2019.

- ISBN 978-1-101-61380-1.

- ISBN 978-1-4398-9928-1.

- ISBN 9780849318580.

- ^ a b "Apple ProRes 422 Codec Family". Library of Congress. 17 November 2014. Retrieved 13 October 2019.

- S2CID 119888170.

- ^ S2CID 2060937.

- .

- BT.com. BT Group. 31 May 2018. Retrieved 5 August 2019.

- Nokia Technologies. Retrieved 5 August 2019.

- ^ Alakuijala, Jyrki; Sneyers, Jon; Versari, Luca; Wassenberg, Jan (22 January 2021). "JPEG XL White Paper" (PDF). JPEG Org. Archived (PDF) from the original on 2 May 2021. Retrieved 14 Jan 2022.

Variable-sized DCT (square or rectangular from 2x2 to 256x256) serves as a fast approximation of the optimal decorrelating transform.

- ^ a b Wang, Yao (2006). "Video Coding Standards: Part I" (PDF). Archived from the original (PDF) on 2013-01-23.

- ^ Wang, Yao (2006). "Video Coding Standards: Part II" (PDF). Archived from the original (PDF) on 2013-01-23.

- ISBN 9781461560319.

- ^ S2CID 56983045.

- ^ Davis, Andrew (13 June 1997). "The H.320 Recommendation Overview". EE Times. Retrieved 7 November 2019.

- ISBN 9780780341470.

H.263 is similar to, but more complex than H.261. It is currently the most widely used international video compression standard for video telephony on ISDN (Integrated Services Digital Network) telephone lines.

- ^ Peter de Rivaz; Jack Haughton (2018). "AV1 Bitstream & Decoding Process Specification" (PDF). Alliance for Open Media. Retrieved 2022-01-14.

- ^ YouTube Developers (15 September 2018). "AV1 Beta Launch Playlist". YouTube. Retrieved 14 January 2022.

The first videos to receive YouTube's AV1 transcodes.

- ^ Brinkmann, Martin (13 September 2018). "How to enable AV1 support on YouTube". Retrieved 14 January 2022.

- ^ Netflix Technology Blog (5 February 2020). "Netflix Now Streaming AV1 on Android". Retrieved 14 January 2022.

- ^ Netflix Technology Blog (9 November 2021). "Bringing AV1 Streaming to Netflix Members' TVs". Retrieved 14 January 2022.

- .

- arXiv:1602.04845.

- ^ "Opus Codec". Opus (Home page). Xiph.org Foundation. Retrieved July 31, 2012.

- ^ Leyden, John (27 October 2015). "WhatsApp laid bare: Info-sucking app's innards probed". The Register. Retrieved 19 October 2019.

- ISBN 9789811068980.

- S2CID 214034702.

- ^ "Open Source Software used in PlayStation 4". Sony Interactive Entertainment Inc. Retrieved 2017-12-11.

- Dolby Laboratories. June 2015. Archived from the original(PDF) on 30 May 2019. Retrieved 11 November 2019.

- S2CID 30821673.

- Fraunhofer IIS. Audio Engineering Society. Retrieved 20 October 2019.

- Fraunhofer IIS. Audio Engineering Society. Retrieved 24 October 2019.

- ^ ISBN 9780470377864.

- ^ "ITU-T Work Programme". ITU.

- ^ Terriberry, Timothy B. Presentation of the CELT codec. Event occurs at 65 minutes. Archived from the original on 2011-08-07. Retrieved 2019-10-19., also "CELT codec presentation slides" (PDF).

- ^ "Ekiga 3.1.0 available". Archived from the original on 2011-09-30. Retrieved 2019-10-19.

- ^ "☏ FreeSWITCH". SignalWire.

- Fraunhofer IIS. March 2017. Retrieved 19 October 2019.

- PMID 18282969

- ^ Song, J.; SXiong, Z.; Liu, X.; Liu, Y., "An algorithm for layered video coding and transmission", Proc. Fourth Int. Conf./Exh. High Performance Comput. Asia-Pacific Region, 2: 700–703

- S2CID 18016215

- S2CID 1757438.

- ^ Queiroz, R. L.; Nguyen, T. Q. (1996). "Lapped transforms for efficient transform/subband coding". IEEE Trans. Signal Process. 44 (5): 497–507.

- ^ Malvar 1992.

- hdl:10722/42775.

- ^ ISBN 9780123744579.

- PC Magazine. Retrieved 19 October 2019.

- ISBN 978-90-816021-6-7. Retrieved 19 October 2019.

- ISBN 9781597110938.

- ^ Colberg, Jörg (April 17, 2009). "Review: jpegs by Thomas Ruff".

- ^ "Discrete cosine transform - MATLAB dct". www.mathworks.com. Retrieved 2019-07-11.

- ISBN 9780442012724.

- ^ Arai, Y.; Agui, T.; Nakajima, M. (1988). "A fast DCT-SQ scheme for images". IEICE Transactions. 71 (11): 1095–1097.

- S2CID 986733.

- ^ Malvar 1992

- ^ Martucci 1994

- .

- ^ a b Alshibami, O.; Boussakta, S. (July 2001). "Three-dimensional algorithm for the 3-D DCT-III". Proc. Sixth Int. Symp. Commun., Theory Applications: 104–107.

- .

- .

- ^ Nussbaumer, H.J. (1981). Fast Fourier transform and convolution algorithms (1st ed.). New York: Springer-Verlag.

- S2CID 986733.

Further reading

- Narasimha, M.; Peterson, A. (June 1978). "On the Computation of the Discrete Cosine Transform". IEEE Transactions on Communications. 26 (6): 934–936. .

- Makhoul, J. (February 1980). "A fast cosine transform in one and two dimensions". IEEE Transactions on Acoustics, Speech, and Signal Processing. 28 (1): 27–34. .

- Sorensen, H.; Jones, D.; Heideman, M.; Burrus, C. (June 1987). "Real-valued fast Fourier transform algorithms". IEEE Transactions on Acoustics, Speech, and Signal Processing. 35 (6): 849–863. .

- .

- Duhamel, P.; Vetterli, M. (April 1990). "Fast fourier transforms: A tutorial review and a state of the art". Signal Processing (Submitted manuscript). 19 (4): 259–299. .

- .

- Feig, E.; Winograd, S. (September 1992b). "Fast algorithms for the discrete cosine transform". IEEE Transactions on Signal Processing. 40 (9): 2174–2193. .

- Malvar, Henrique (1992), Signal Processing with Lapped Transforms, Boston: Artech House, ISBN 978-0-89006-467-2

- Martucci, S. A. (May 1994). "Symmetric convolution and the discrete sine and cosine transforms". IEEE Transactions on Signal Processing. 42 (5): 1038–1051. .

- Oppenheim, Alan; Schafer, Ronald; Buck, John (1999), Discrete-Time Signal Processing (2nd ed.), Upper Saddle River, N.J: Prentice Hall, ISBN 978-0-13-754920-7

- Frigo, M.; Johnson, S. G. (February 2005). "The Design and Implementation of FFTW3" (PDF). Proceedings of the IEEE. 93 (2): 216–231. S2CID 6644892.

- Boussakta, Said.; Alshibami, Hamoud O. (April 2004). "Fast Algorithm for the 3-D DCT-II" (PDF). IEEE Transactions on Signal Processing. 52 (4): 992–1000. S2CID 3385296.

- Cheng, L. Z.; Zeng, Y. H. (2003). "New fast algorithm for multidimensional type-IV DCT". IEEE Transactions on Signal Processing. 51 (1): 213–220. .

- Wen-Hsiung Chen; Smith, C.; Fralick, S. (September 1977). "A Fast Computational Algorithm for the Discrete Cosine Transform". IEEE Transactions on Communications. 25 (9): 1004–1009. .

- Press, WH; Teukolsky, SA; Vetterling, WT; Flannery, BP (2007), "Section 12.4.2. Cosine Transform", Numerical Recipes: The Art of Scientific Computing (3rd ed.), New York: Cambridge University Press, ISBN 978-0-521-88068-8, archived from the originalon 2011-08-11, retrieved 2011-08-13

External links

- Syed Ali Khayam: The Discrete Cosine Transform (DCT): Theory and Application

- Implementation of MPEG integer approximation of 8x8 IDCT (ISO/IEC 23002-2)

- Matteo Frigo and Steven G. Johnson: FFTW, FFTW Home Page. A free (GPL) C library that can compute fast DCTs (types I-IV) in one or more dimensions, of arbitrary size.

- Takuya Ooura: General Purpose FFT Package, FFT Package 1-dim / 2-dim. Free C & FORTRAN libraries for computing fast DCTs (types II–III) in one, two or three dimensions, power of 2 sizes.

- Tim Kientzle: Fast algorithms for computing the 8-point DCT and IDCT, Algorithm Alley.

- LTFAT is a free Matlab/Octave toolbox with interfaces to the FFTW implementation of the DCTs and DSTs of type I-IV.

![{\displaystyle X_{k}={\frac {1}{2}}(x_{0}+(-1)^{k}x_{N-1})+\sum _{n=1}^{N-2}x_{n}\cos \left[\,{\frac {\pi }{\,N-1\,}}\,n\,k\,\right]\qquad {\text{ for }}~k=0,\ \ldots \ N-1~.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/89deb76d2dfa16ac34d34397ec3c9d0652a4912d)

![{\displaystyle X_{k}=\sum _{n=0}^{N-1}x_{n}\cos \left[\,{\tfrac {\,\pi \,}{N}}\left(n+{\tfrac {1}{2}}\right)k\,\right]\qquad {\text{ for }}~k=0,\ \dots \ N-1~.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/0d6a9bb1a9eedda6874f60046dee69bbd4b94c93)

![{\displaystyle X_{k}={\tfrac {1}{2}}x_{0}+\sum _{n=1}^{N-1}x_{n}\cos \left[\,{\tfrac {\,\pi \,}{N}}\left(k+{\tfrac {1}{2}}\right)n\,\right]\qquad {\text{ for }}~k=0,\ \ldots \ N-1~.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/54964bf4edeb811b15c3455a127339bf4f7c87f0)

![{\displaystyle X_{k}=\sum _{n=0}^{N-1}x_{n}\cos \left[\,{\tfrac {\,\pi \,}{N}}\,\left(n+{\tfrac {1}{2}}\right)\left(k+{\tfrac {1}{2}}\right)\,\right]\qquad {\text{ for }}k=0,\ \ldots \ N-1~.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/dd046a94a30671a18f12d188598bcfb96e797eae)

![{\displaystyle {\begin{aligned}X_{k_{1},k_{2}}&=\sum _{n_{1}=0}^{N_{1}-1}\left(\sum _{n_{2}=0}^{N_{2}-1}x_{n_{1},n_{2}}\cos \left[{\frac {\pi }{N_{2}}}\left(n_{2}+{\frac {1}{2}}\right)k_{2}\right]\right)\cos \left[{\frac {\pi }{N_{1}}}\left(n_{1}+{\frac {1}{2}}\right)k_{1}\right]\\&=\sum _{n_{1}=0}^{N_{1}-1}\sum _{n_{2}=0}^{N_{2}-1}x_{n_{1},n_{2}}\cos \left[{\frac {\pi }{N_{1}}}\left(n_{1}+{\frac {1}{2}}\right)k_{1}\right]\cos \left[{\frac {\pi }{N_{2}}}\left(n_{2}+{\frac {1}{2}}\right)k_{2}\right].\end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/9b130f0c2ff55fcf6a7c26247d018e159abe7f6a)

![{\displaystyle X_{k_{1},k_{2},k_{3}}=\sum _{n_{1}=0}^{N_{1}-1}\sum _{n_{2}=0}^{N_{2}-1}\sum _{n_{3}=0}^{N_{3}-1}x_{n_{1},n_{2},n_{3}}\cos \left[{\frac {\pi }{N_{1}}}\left(n_{1}+{\frac {1}{2}}\right)k_{1}\right]\cos \left[{\frac {\pi }{N_{2}}}\left(n_{2}+{\frac {1}{2}}\right)k_{2}\right]\cos \left[{\frac {\pi }{N_{3}}}\left(n_{3}+{\frac {1}{2}}\right)k_{3}\right],\quad {\text{for }}k_{i}=0,1,2,\dots ,N_{i}-1.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/d83b579efc3f88bb1ea1ea9f9e7ce0b2cc938a92)

![{\displaystyle x_{n_{1},n_{2},n_{3}}=\sum _{k_{1}=0}^{N_{1}-1}\sum _{k_{2}=0}^{N_{2}-1}\sum _{k_{3}=0}^{N_{3}-1}X_{k_{1},k_{2},k_{3}}\cos \left[{\frac {\pi }{N_{1}}}\left(n_{1}+{\frac {1}{2}}\right)k_{1}\right]\cos \left[{\frac {\pi }{N_{2}}}\left(n_{2}+{\frac {1}{2}}\right)k_{2}\right]\cos \left[{\frac {\pi }{N_{3}}}\left(n_{3}+{\frac {1}{2}}\right)k_{3}\right],\quad {\text{for }}n_{i}=0,1,2,\dots ,N_{i}-1.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/85e084f4d392271aad0753fc1e18370df38d57c3)

![{\displaystyle ~[\log _{2}N]~}](https://wikimedia.org/api/rest_v1/media/math/render/svg/27d8bb5d31213b1734af79717a89534491e1f3c9)

![{\displaystyle ~\left[{\tfrac {1}{8}}\ N^{3}\log _{2}N\right]~}](https://wikimedia.org/api/rest_v1/media/math/render/svg/91efa02764854739533932d0917df6fbb8faad42)

![{\displaystyle ~\left[{\tfrac {7}{8}}\ N^{3}\ \log _{2}N\right]~,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/1eee6265f015b96f01166b9015dbe8228d41b6f3)

![{\displaystyle ~\underbrace {\left[{\frac {3}{2}}N^{3}\log _{2}N\right]} _{\text{Real}}+\underbrace {\left[{\frac {3}{2}}N^{3}\log _{2}N-3N^{3}+3N^{2}\right]} _{\text{Recursive}}=\left[{\frac {9}{2}}N^{3}\log _{2}N-3N^{3}+3N^{2}\right]~.}](https://wikimedia.org/api/rest_v1/media/math/render/svg/ced5ebac308145cfd6efa3c1b191749e4ae23f0a)

![{\displaystyle ~\left[{\frac {3}{2}}N^{3}\log _{2}N\right]~}](https://wikimedia.org/api/rest_v1/media/math/render/svg/a35aa8c853807e7646c3ab9aaaa804f9e92f519d)

![{\displaystyle ~\left[{\frac {9}{2}}N^{3}\log _{2}N-3N^{3}+3N^{2}\right]~,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/e0fcd1a48d8b3795e4c1f5cec76e8642e096d084)