Cognitive bias

| Part of a series on |

| Psychology |

|---|

A cognitive bias is a systematic pattern of deviation from

While cognitive biases may initially appear to be negative, some are adaptive. They may lead to more effective actions in a given context.[5] Furthermore, allowing cognitive biases enables faster decisions which can be desirable when timeliness is more valuable than accuracy, as illustrated in heuristics.[6] Other cognitive biases are a "by-product" of human processing limitations,[1] resulting from a lack of appropriate mental mechanisms (bounded rationality), the impact of an individual's constitution and biological state (see embodied cognition), or simply from a limited capacity for information processing.[7][8] Research suggests that cognitive biases can make individuals more inclined to endorsing pseudoscientific beliefs by requiring less evidence for claims that confirm their preconceptions. This can potentially distort their perceptions and lead to inaccurate judgments.[9]

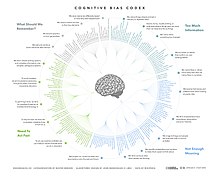

A continually evolving list of cognitive biases has been identified over the last six decades of research on human judgment and decision-making in cognitive science, social psychology, and behavioral economics. The study of cognitive biases has practical implications for areas including clinical judgment, entrepreneurship, finance, and management.[10][11]

Overview

The notion of cognitive biases was introduced by

The "Linda Problem" illustrates the representativeness heuristic (Tversky & Kahneman, 1983[14]). Participants were given a description of "Linda" that suggests Linda might well be a feminist (e.g., she is said to be concerned about discrimination and social justice issues). They were then asked whether they thought Linda was more likely to be (a) a "bank teller" or (b) a "bank teller and active in the feminist movement." A majority chose answer (b). Independent of the information given about Linda, though, the more restrictive answer (b) is under any circumstance statistically less likely than answer (a). This is an example of the "conjunction fallacy". Tversky and Kahneman argued that respondents chose (b) because it seemed more "representative" or typical of persons who might fit the description of Linda. The representativeness heuristic may lead to errors such as activating stereotypes and inaccurate judgments of others (Haselton et al., 2005, p. 726).

Critics of Kahneman and Tversky, such as

Definitions

| Definition | Source |

|---|---|

| "bias ... that occurs when humans are processing and interpreting information" | ISO/IEC TR 24027:2021(en), 3.2.4,[16] ISO/IEC TR 24368:2022(en), 3.8[17] |

Types

Biases can be distinguished on a number of dimensions. Examples of cognitive biases include -

- Biases specific to groups (such as the risky shift) versus biases at the individual level.

- Biases that affect sunk costsfallacy).

- Biases, such as illusory correlation, that affect judgment of how likely something is or whether one thing is the cause of another.

- Biases that affect memory,[18] such as consistency bias (remembering one's past attitudes and behavior as more similar to one's present attitudes).

- Biases that reflect a subject's motivation,[19] for example, the desire for a positive self-image leading to egocentric bias and the avoidance of unpleasant cognitive dissonance.[20]

Other biases are due to the particular way the brain perceives, forms memories and makes judgments. This distinction is sometimes described as "

- some are due to ignoring relevant information (e.g., neglect of probability),

- some involve a decision or judgment being affected by irrelevant information (for example the framing effect where the same problem receives different responses depending on how it is described; or the distinction bias where choices presented together have different outcomes than those presented separately), and

- others give excessive weight to an unimportant but salient feature of the problem (e.g., anchoring).

As some biases reflect motivation specifically the motivation to have positive attitudes to oneself.

Some cognitive biases belong to the subgroup of attentional biases, which refers to paying increased attention to certain stimuli. It has been shown, for example, that people addicted to alcohol and other drugs pay more attention to drug-related stimuli. Common psychological tests to measure those biases are the Stroop task[21][22] and the dot probe task.

Individuals' susceptibility to some types of cognitive biases can be measured by the Cognitive Reflection Test (CRT) developed by Shane Frederick (2005).[23][24]

List of biases

The following is a list of the more commonly studied cognitive biases:

| Name | Description |

|---|---|

| Fundamental attribution error (FAE, aka correspondence bias[25]) | Tendency to overemphasize personality-based explanations for behaviors observed in others. At the same time, individuals under-emphasize the role and power of situational influences on the same behavior. Edward E. Jones and Victor A. Harris' (1967)[26] classic study illustrates the FAE. Despite being made aware that the target's speech direction (pro-Castro/anti-Castro) was assigned to the writer, participants ignored the situational pressures and attributed pro-Castro attitudes to the writer when the speech represented such attitudes. |

| Implicit bias (aka implicit stereotype, unconscious bias) | Tendency to attribute positive or negative qualities to a group of individuals. It can be fully non-factual or be an abusive generalization of a frequent trait in a group to all individuals of that group. |

| Priming bias | Tendency to be influenced by the first presentation of an issue to create our preconceived idea of it, which we then can adjust with later information. |

| Confirmation bias | Tendency to search for or interpret information in a way that confirms one's preconceptions, and discredit information that does not support the initial opinion.[27] Related to the concept of cognitive dissonance, in that individuals may reduce inconsistency by searching for information which reconfirms their views (Jermias, 2001, p. 146).[28] |

| Affinity bias | Tendency to be favorably biased toward people most like ourselves.[29] |

| Self-serving bias | Tendency to claim more responsibility for successes than for failures. It may also manifest itself as a tendency for people to evaluate ambiguous information in a way beneficial to their interests. |

| Belief bias | Tendency to evaluate the logical strength of an argument based on current belief and perceived plausibility of the statement's conclusion. |

| Framing | Tendency to narrow the description of a situation in order to guide to a selected conclusion. The same primer can be framed differently and therefore lead to different conclusions. |

| Hindsight bias | Tendency to view past events as being predictable. Also called the "I-knew-it-all-along" effect. |

| Embodied cognition | Tendency to have selectivity in perception, attention, decision making, and motivation based on the biological state of the body. |

Anchoring bias

|

The inability of people to make appropriate adjustments from a starting point in response to a final answer. It can lead people to make sub-optimal decisions. Anchoring affects decision making in negotiations, medical diagnoses, and judicial sentencing.[30] |

| Status quo bias | Tendency to hold to the current situation rather than an alternative situation, to avoid risk and loss (loss aversion).[31] In status quo bias, a decision-maker has the increased propensity to choose an option because it is the default option or status quo. Has been shown to affect various important economic decisions, for example, a choice of car insurance or electrical service.[32] |

| Overconfidence effect | Tendency to overly trust one's own capability to make correct decisions. People tended to overrate their abilities and skills as decision makers.[33] See also the Dunning–Kruger effect. |

| Physical attractiveness stereotype | The tendency to assume people who are physically attractive also possess other desirable personality traits.[34] |

Practical significance

Many social institutions rely on individuals to make rational judgments.

The securities regulation regime largely assumes that all investors act as perfectly rational persons. In truth, actual investors face cognitive limitations from biases, heuristics, and framing effects.

A fair

In some academic disciplines, the study of bias is very popular. For instance, bias is a wide spread and well studied phenomenon because most decisions that concern the minds and hearts of entrepreneurs are computationally intractable.[11]

Cognitive biases can create other issues that arise in everyday life. One study showed the connection between cognitive bias, specifically approach bias, and inhibitory control on how much unhealthy snack food a person would eat.[36] They found that the participants who ate more of the unhealthy snack food, tended to have less inhibitory control and more reliance on approach bias. Others have also hypothesized that cognitive biases could be linked to various eating disorders and how people view their bodies and their body image.[37][38]

It has also been argued that cognitive biases can be used in destructive ways.[39] Some believe that there are people in authority who use cognitive biases and heuristics in order to manipulate others so that they can reach their end goals. Some medications and other health care treatments rely on cognitive biases in order to persuade others who are susceptible to cognitive biases to use their products. Many see this as taking advantage of one's natural struggle of judgement and decision-making. They also believe that it is the government's responsibility to regulate these misleading ads.

Cognitive biases also seem to play a role in property sale price and value. Participants in the experiment were shown a residential property.[40] Afterwards, they were shown another property that was completely unrelated to the first property. They were asked to say what they believed the value and the sale price of the second property would be. They found that showing the participants an unrelated property did have an effect on how they valued the second property.

Cognitive biases can be used in non-destructive ways. In team science and collective problem-solving, the

Reducing

Because they cause

Similar to Gigerenzer (1996),[43] Haselton et al. (2005) state the content and direction of cognitive biases are not "arbitrary" (p. 730).[1] Moreover, cognitive biases can be controlled. One debiasing technique aims to decrease biases by encouraging individuals to use controlled processing compared to automatic processing.[25] In relation to reducing the FAE, monetary incentives[44] and informing participants they will be held accountable for their attributions[45] have been linked to the increase of accurate attributions. Training has also shown to reduce cognitive bias. Carey K. Morewedge and colleagues (2015) found that research participants exposed to one-shot training interventions, such as educational videos and debiasing games that taught mitigating strategies, exhibited significant reductions in their commission of six cognitive biases immediately and up to 3 months later.[46]

Cognitive bias modification refers to the process of modifying cognitive biases in healthy people and also refers to a growing area of psychological (non-pharmaceutical) therapies for anxiety, depression and addiction called cognitive bias modification therapy (CBMT). CBMT is sub-group of therapies within a growing area of psychological therapies based on modifying cognitive processes with or without accompanying medication and talk therapy, sometimes referred to as applied cognitive processing therapies (ACPT). Although cognitive bias modification can refer to modifying cognitive processes in healthy individuals, CBMT is a growing area of evidence-based psychological therapy, in which cognitive processes are modified to relieve suffering[47][48] from serious depression,[49] anxiety,[50] and addiction.[51] CBMT techniques are technology-assisted therapies that are delivered via a computer with or without clinician support. CBM combines evidence and theory from the cognitive model of anxiety,[52] cognitive neuroscience,[53] and attentional models.[54]

Cognitive bias modification has also been used to help those with obsessive-compulsive beliefs and obsessive-compulsive disorder.[55][56] This therapy has shown that it decreases the obsessive-compulsive beliefs and behaviors.

Common theoretical causes of some cognitive biases

Bias arises from various processes that are sometimes difficult to distinguish. These include:

- Bounded rationality — limits on optimization and rationality

- Evolutionary psychology — Remnants from evolutionary adaptive mental functions.[57]

- Mental accounting

- Adaptive bias — basing decisions on limited information and biasing them based on the costs of being wrong

- Attribute substitution — making a complex, difficult judgment by unconsciously replacing it with an easier judgment[58]

- Attribution theory

- Cognitive dissonance, and related:

- Information-processing shortcuts (heuristics),[59]including:

- Availability heuristic — estimating what is more likely by what is more available in memory, which is biased toward vivid, unusual, or emotionally charged examples[6]

- Representativeness heuristic — judging probabilities based on resemblance[6]

- Affect heuristic — basing a decision on an emotional reaction rather than a calculation of risks and benefits[60]

- Emotional and moral motivations[61] deriving, for example, from:

- The two-factor theory of emotion

- The somatic markers hypothesis

- Introspection illusion

- Misinterpretations or innumeracy.

- Social influence[62]

- The brain's limited information processing capacity[63]

- Noisy information processing (distortions during storage in and retrieval from memory).overconfidence, and the hard–easy effect.

Individual differences in cognitive biases

People do appear to have stable individual differences in their susceptibility to decision biases such as

Individual differences in cognitive bias have also been linked to varying levels of cognitive abilities and functions.[67] The Cognitive Reflection Test (CRT) has been used to help understand the connection between cognitive biases and cognitive ability. There have been inconclusive results when using the Cognitive Reflection Test to understand ability. However, there does seem to be a correlation; those who gain a higher score on the Cognitive Reflection Test, have higher cognitive ability and rational-thinking skills. This in turn helps predict the performance on cognitive bias and heuristic tests. Those with higher CRT scores tend to be able to answer more correctly on different heuristic and cognitive bias tests and tasks.[68]

Age is another individual difference that has an effect on one's ability to be susceptible to cognitive bias. Older individuals tend to be more susceptible to cognitive biases and have less cognitive flexibility. However, older individuals were able to decrease their susceptibility to cognitive biases throughout ongoing trials.[69] These experiments had both young and older adults complete a framing task. Younger adults had more cognitive flexibility than older adults. Cognitive flexibility is linked to helping overcome pre-existing biases.

Criticism

Cognitive bias theory loses the sight of any distinction between reason and bias. If every bias can be seen as a reason, and every reason can be seen as a bias, then the distinction is lost.[70]

Criticism against theories of cognitive biases is usually founded in the fact that both sides of a debate often claim the other's thoughts to be subject to human nature and the result of cognitive bias, while claiming their own point of view to be above the cognitive bias and the correct way to "overcome" the issue. This rift ties to a more fundamental issue that stems from a lack of consensus in the field, thereby creating arguments that can be non-falsifiably used to validate any contradicting viewpoint.[citation needed]

See also

- Baconian method § Idols of the mind (idola mentis) – Investigative process

- Cognitive bias in animals – Influence of decision making by emotions or irrelevant stimulus in animals

- Cognitive bias mitigation – Reduction of the negative effects of cognitive biases

- Cognitive bias modification – process of modifying cognitive biases in healthy people or growing area of psychological therapies for cognitive bias modification therapy

- Cognitive dissonance – Stress from contradictory beliefs

- Cognitive distortion – Exaggerated or irrational thought pattern

- Cognitive inertia – Lack of motivation to mentally tackle a problem or issue

- Cognitive psychology – Subdiscipline of psychology

- Cognitive vulnerability – Concept in cognitive psychology

- Critical thinking – Analysis of facts to form a judgment

- Cultural cognition

- Emotional bias – distortion in cognition, judgement and decision making due to emotional factors

- Evolutionary psychology – Branch of psychology

- Expectation bias– Cognitive bias of experimental subject

- Fallacy – Argument that uses faulty reasoning

- False consensus effect – Attributional type of cognitive bias

- Halo effect – Tendency for positive impressions to contaminate other evaluations

- Implicit stereotype – Unreflected, mistaken attributions to and descriptions of social groups

- Jumping to conclusions – Psychological term

- List of cognitive biases – Systematic patterns of deviation from norm or rationality in judgment

- Magical thinking – Belief in the connection of unrelated events

- Prejudice – Attitudes based on preconceived categories

- Presumption of guilt – Presumption that a person is guilty of a crime

- Rationality – Quality of being agreeable to reason

- Systemic bias – Inherent tendency of a process to support particular outcomes

- Theory-ladenness – Degree to which an observation is affected by one's presuppositions

References

- ^ a b c Haselton MG, Nettle D, Andrews PW (2005). "The evolution of cognitive bias.". In Buss DM (ed.). The Handbook of Evolutionary Psychology. Hoboken, NJ, US: John Wiley & Sons Inc. pp. 724–746.

- doi:10.1016/0010-0285(72)90016-3. Archived from the original(PDF) on 2019-12-14. Retrieved 2017-04-01.

- ^ Baron J (2007). Thinking and Deciding (4th ed.). New York, NY: Cambridge University Press.

- ^ ISBN 978-0-06-135323-9.

- PMID 8888650.

- ^ S2CID 143452957.

- ^ Bless H, Fiedler K, Strack F (2004). Social cognition: How individuals construct social reality. Hove and New York: Psychology Press.

- PMID 20696611.

- PMID 34934119.

- PMID 8759048.

- ^ S2CID 146617323.

- ISBN 978-0-521-79679-8.

- ^ a b Baumeister RF, Bushman BJ (2010). Social psychology and human nature: International Edition. Belmont, US: Wadsworth. p. 141.

- (PDF) from the original on 2007-09-28.

- ISBN 978-1-4051-1304-5.

- ^ "3.2.4". ISO/IEC TR 24027:2021 Information technology — Artificial intelligence (AI) — Bias in AI systems and AI aided decision making. ISO. 2021. Retrieved 21 June 2023.

- ^ "3.8". ISO/IEC TR 24368:2022 Information technology — Artificial intelligence — Overview of ethical and societal concerns. ISO. 2022. Retrieved 21 June 2023.

- S2CID 14882268.

- S2CID 9703661. Archived from the original(PDF) on 2017-07-06. Retrieved 2017-10-27.

- ^ a b Hoorens V (1993). "Self-enhancement and Superiority Biases in Social Comparison". In Stroebe, W., Hewstone, Miles (eds.). European Review of Social Psychology 4. Wiley.

- PMID 5328883.

- PMID 2034749.

- ISSN 0895-3309.

- (PDF) from the original on 2016-08-03.

- ^ a b Baumeister RF, Bushman BJ (2010). Social psychology and human nature: International Edition. Belmont, USA: Wadsworth.

- .

- S2CID 7350256.

- .

- ^ Thakrar, Monica. "Council Post: Unconscious Bias And Three Ways To Overcome It". Forbes.

- .

- ^ Kahneman, D., Knetsch, J. L. and Thaler, R. H. (1991) Anomalies The Endowment Effect, Loss Aversion, and Status Quo Bias, Journal of Economic Perspectives.

- ^ Dean, M. (2008) 'Status quo bias in large and small choice sets', New York, p. 52. Available at: http://www.yorkshire-exile.co.uk/Dean_SQ.pdf Archived 2010-12-25 at the Wayback Machine.

- ISBN 978-3-540-77553-9, retrieved 2020-11-25

- ^ Lorenz, Kate. (2005). "Do Pretty People Earn More?" http://www.CNN.com.

- ISBN 978-1-905177-07-3.

- S2CID 31561602.

- S2CID 36189809.

- ISSN 1064-0266.

- S2CID 143783638.

- S2CID 154866472.

- .

- ^ Buckingham M, Goodall A. "The Feedback Fallacy". Harvard Business Review. No. March–April 2019.

- .

- S2CID 145752877.

- JSTOR 3033683.

- S2CID 4848978.

- PMID 3700842.

- S2CID 2861872.

- PMID 19222316.

- PMID 20887977.

- PMID 23218805.

- ^ Clark DA, Beck AT (2009). Cognitive Therapy of Anxiety Disorders: Science and Practice. London: Guildford.

- PMID 20034617.

- S2CID 33462708.

- S2CID 32259433.

- PMID 24106918.

- PMID 35967732.

- OCLC 47364085.

- ^ Kahneman, D., Slovic, P., & Tversky, A. (1982). Judgment under uncertainty: Heuristics and biases (1st ed.). Cambridge University Press.

- ISBN 978-0-521-79679-8.

- .

- .

- JSTOR 1884852.

- ^ PMID 22122235.

- .

- S2CID 4848978.

- S2CID 4933030.

- PMID 21541821.

- S2CID 13372385.

- ^ "Kahneman's Fallacies, "Thinking, Fast & Slow"". Wenglinsky Review. 2017-01-23. Retrieved 2023-11-19.

- S2CID 8097667.

- OCLC 352897263.

- OCLC 47009468.

Further reading

- Soprano, Michael; Roitero, Kevin (May 2024). "Cognitive Biases in Fact-Checking and Their Countermeasures: A Review". Information Processing & Management. 61 (3, 103672). .

- Eiser JR, van der Pligt J (1988). Attitudes and Decisions. London: Routledge. ISBN 978-0-415-01112-9.

- Fine C (2006). A Mind of its Own: How your brain distorts and deceives. Cambridge, UK: Icon Books. ISBN 1-84046-678-2.

- Gilovich T (1993). How We Know What Isn't So: The Fallibility of Human Reason in Everyday Life. New York: ISBN 0-02-911706-2.

- Haselton MG, Nettle D, Andrews PW (2005). "The evolution of cognitive bias" (PDF). In Buss DM (ed.). Handbook of Evolutionary Psychology. Hoboken: Wiley. pp. 724–746.

- Heuer RJ Jr (1999). "Psychology of Intelligence Analysis. Central Intelligence Agency".

- ISBN 978-0-374-27563-1.

- ISBN 978-0316451390.

- Kida T (2006). Don't Believe Everything You Think: The 6 Basic Mistakes We Make in Thinking. New York: Prometheus. ISBN 978-1-59102-408-8.

- Krueger JI, Funder DC (June 2004). "Towards a balanced social psychology: causes, consequences, and cures for the problem-seeking approach to social behavior and cognition". The Behavioral and Brain Sciences. 27 (3): 313–27, discussion 328–76. S2CID 6260477.

- Nisbett R, Ross L (1980). Human Inference: Strategies and shortcomings of human judgement. Englewood Cliffs, NJ: Prentice-Hall. ISBN 978-0-13-445130-5.

- Piatelli-Palmarini M (1994). Inevitable Illusions: How Mistakes of Reason Rule Our Minds. New York: John Wiley & Sons. ISBN 0-471-15962-X.

- Stanovich K (2009). What Intelligence Tests Miss: The Psychology of Rational Thought. New Haven (CT): Yale University Press. ISBN 978-0-300-12385-2.

- Tavris C, Aronson E (2007). Mistakes Were Made (But Not by Me): Why We Justify Foolish Beliefs, Bad Decisions and Hurtful Acts. Orlando, Florida: ISBN 978-0-15-101098-1.

- Young S (2007). Micromessaging - Why Great Leadership Is Beyond Words. New York: McGraw-Hill. ISBN 978-0-07-146757-5.

External links

Media related to Cognitive biases at Wikimedia Commons

Media related to Cognitive biases at Wikimedia Commons Quotations related to Cognitive bias at Wikiquote

Quotations related to Cognitive bias at Wikiquote- The Roots of Consciousness: To Err Is human

- Cognitive bias in the financial arena (archived 20 June 2006)

- A Visual Study Guide To Cognitive Biases