Artificial intelligence

- Afrikaans

- Alemannisch

- አማርኛ

- العربية

- Aragonés

- Արեւմտահայերէն

- অসমীয়া

- Asturianu

- Avañe'ẽ

- Azərbaycanca

- تۆرکجه

- বাংলা

- 閩南語 / Bân-lâm-gú

- Башҡортса

- Беларуская

- Беларуская (тарашкевіца)

- Bikol Central

- Български

- Boarisch

- བོད་ཡིག

- Bosanski

- Brezhoneg

- Буряад

- Català

- Чӑвашла

- Čeština

- Cymraeg

- Dansk

- الدارجة

- Deutsch

- Eesti

- Ελληνικά

- Español

- Esperanto

- Estremeñu

- Euskara

- فارسی

- Français

- Furlan

- Gaeilge

- Gaelg

- Gàidhlig

- Galego

- 贛語

- Gĩkũyũ

- 한국어

- Hausa

- Հայերեն

- हिन्दी

- Hrvatski

- Ido

- Igbo

- Ilokano

- Bahasa Indonesia

- Interlingua

- Interlingue

- IsiZulu

- Íslenska

- Italiano

- עברית

- Jawa

- ಕನ್ನಡ

- ქართული

- Қазақша

- Kiswahili

- Kreyòl ayisyen

- Kriyòl gwiyannen

- Кыргызча

- Latina

- Latviešu

- Lëtzebuergesch

- Lietuvių

- Limburgs

- La .lojban.

- Lombard

- Magyar

- Madhurâ

- Македонски

- Malagasy

- മലയാളം

- Malti

- मराठी

- მარგალური

- مصرى

- Bahasa Melayu

- Minangkabau

- Монгол

- မြန်မာဘာသာ

- Nederlands

- Nedersaksies

- नेपाली

- नेपाल भाषा

- 日本語

- Nordfriisk

- Norsk bokmål

- Norsk nynorsk

- Occitan

- ଓଡ଼ିଆ

- Oʻzbekcha / ўзбекча

- ਪੰਜਾਬੀ

- پنجابی

- پښتو

- Patois

- Polski

- Português

- Qırımtatarca

- Reo tahiti

- Ripoarisch

- Română

- Runa Simi

- Русиньскый

- Русский

- Саха тыла

- Scots

- Shqip

- සිංහල

- Simple English

- سنڌي

- Slovenčina

- Slovenščina

- Ślůnski

- کوردی

- Српски / srpski

- Srpskohrvatski / српскохрватски

- Suomi

- Svenska

- Tagalog

- தமிழ்

- Татарча / tatarça

- తెలుగు

- ไทย

- Тоҷикӣ

- Türkçe

- Türkmençe

- Українська

- اردو

- ئۇيغۇرچە / Uyghurche

- Vèneto

- Tiếng Việt

- Võro

- Walon

- Winaray

- 吴语

- ייִדיש

- 粵語

- Zazaki

- Žemaitėška

- 中文

- Fɔ̀ngbè

| Part of a series on |

| Artificial intelligence |

|---|

|

|

Glossary |

Artificial intelligence (AI), in its broadest sense, is

The growing use of artificial intelligence in the 21st century is influencing

The various sub-fields of AI research are centered around particular goals and the use of particular tools. The traditional goals of AI research include

To reach these goals, AI researchers have adapted and integrated a wide range of techniques, including

Goals

The general problem of simulating (or creating) intelligence has been broken into sub-problems. These consist of particular traits or capabilities that researchers expect an intelligent system to display. The traits described below have received the most attention and cover the scope of AI research.[a]

Reasoning and problem solving

Early researchers developed algorithms that imitated step-by-step reasoning that humans use when they solve puzzles or make logical deductions.[16] By the late 1980s and 1990s, methods were developed for dealing with uncertain or incomplete information, employing concepts from probability and economics.[17]

Many of these algorithms are insufficient for solving large reasoning problems because they experience a "combinatorial explosion": they became exponentially slower as the problems grew larger.[18] Even humans rarely use the step-by-step deduction that early AI research could model. They solve most of their problems using fast, intuitive judgments.[19] Accurate and efficient reasoning is an unsolved problem.

Knowledge representation

A

Among the most difficult problems in knowledge representation are: the breadth of commonsense knowledge (the set of atomic facts that the average person knows is enormous);[32] and the sub-symbolic form of most commonsense knowledge (much of what people know is not represented as "facts" or "statements" that they could express verbally).[19] There is also the difficulty of knowledge acquisition, the problem of obtaining knowledge for AI applications.[c]

Planning and decision making

An "agent" is anything that perceives and takes actions in the world. A

In classical planning, the agent knows exactly what the effect of any action will be.[38] In most real-world problems, however, the agent may not be certain about the situation they are in (it is "unknown" or "unobservable") and it may not know for certain what will happen after each possible action (it is not "deterministic"). It must choose an action by making a probabilistic guess and then reassess the situation to see if the action worked.[39]

In some problems, the agent's preferences may be uncertain, especially if there are other agents or humans involved. These can be learned (e.g., with

A

Game theory describes rational behavior of multiple interacting agents, and is used in AI programs that make decisions that involve other agents.[43]

Learning

Machine learning is the study of programs that can improve their performance on a given task automatically.[44] It has been a part of AI from the beginning.[e]

There are several kinds of machine learning. Unsupervised learning analyzes a stream of data and finds patterns and makes predictions without any other guidance.[47] Supervised learning requires a human to label the input data first, and comes in two main varieties: classification (where the program must learn to predict what category the input belongs in) and regression (where the program must deduce a numeric function based on numeric input).[48]

In

Natural language processing

Early work, based on

Modern deep learning techniques for NLP include

Perception

Machine perception is the ability to use input from sensors (such as cameras, microphones, wireless signals, active lidar, sonar, radar, and tactile sensors) to deduce aspects of the world. Computer vision is the ability to analyze visual input.[61]

The field includes

Social intelligence

Affective computing is an interdisciplinary umbrella that comprises systems that recognize, interpret, process or simulate human feeling, emotion and mood.[67] For example, some virtual assistants are programmed to speak conversationally or even to banter humorously; it makes them appear more sensitive to the emotional dynamics of human interaction, or to otherwise facilitate human–computer interaction.

However, this tends to give naïve users an unrealistic conception of the intelligence of existing computer agents.[68] Moderate successes related to affective computing include textual sentiment analysis and, more recently, multimodal sentiment analysis, wherein AI classifies the affects displayed by a videotaped subject.[69]

General intelligence

A machine with artificial general intelligence should be able to solve a wide variety of problems with breadth and versatility similar to human intelligence.[14]

Techniques

AI research uses a wide variety of techniques to accomplish the goals above.[b]

Search and optimization

AI can solve many problems by intelligently searching through many possible solutions.[70] There are two very different kinds of search used in AI: state space search and local search.

State space search

Local search

Local search uses mathematical optimization to find a solution to a problem. It begins with some form of guess and refines it incrementally.[76]

Gradient descent is a type of local search that optimizes a set of numerical parameters by incrementally adjusting them to minimize a loss function. Variants of gradient descent are commonly used to train neural networks.[77]

Another type of local search is

Distributed search processes can coordinate via

Logic

Formal

Given a problem and a set of premises, problem-solving reduces to searching for a proof tree whose root node is labelled by a solution of the problem and whose leaf nodes are labelled by premises or axioms. In the case of Horn clauses, problem-solving search can be performed by reasoning forwards from the premises or backwards from the problem.[84] In the more general case of the clausal form of first-order logic, resolution is a single, axiom-free rule of inference, in which a problem is solved by proving a contradiction from premises that include the negation of the problem to be solved.[85]

Inference in both Horn clause logic and first-order logic is

Fuzzy logic assigns a "degree of truth" between 0 and 1. It can therefore handle propositions that are vague and partially true.[87]

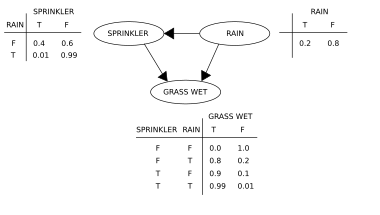

Probabilistic methods for uncertain reasoning

Many problems in AI (including in reasoning, planning, learning, perception, and robotics) require the agent to operate with incomplete or uncertain information. AI researchers have devised a number of tools to solve these problems using methods from

Probabilistic algorithms can also be used for filtering, prediction, smoothing and finding explanations for streams of data, helping

Classifiers and statistical learning methods

The simplest AI applications can be divided into two types: classifiers (e.g., "if shiny then diamond"), on one hand, and controllers (e.g., "if diamond then pick up"), on the other hand.

There are many kinds of classifiers in use. The

Artificial neural networks

An artificial neural network is based on a collection of nodes also known as

Learning algorithms for neural networks use local search to choose the weights that will get the right output for each input during training. The most common training technique is the backpropagation algorithm.[106] Neural networks learn to model complex relationships between inputs and outputs and find patterns in data. In theory, a neural network can learn any function.[107]

In

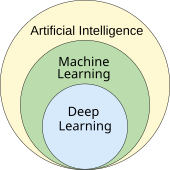

Deep learning

Deep learning[111] uses several layers of neurons between the network's inputs and outputs. The multiple layers can progressively extract higher-level features from the raw input. For example, in

Deep learning has profoundly improved the performance of programs in many important subfields of artificial intelligence, including

GPT

Generative pre-trained transformers (GPT) are large language models that are based on the semantic relationships between words in sentences (natural language processing). Text-based GPT models are pre-trained on a large corpus of text which can be from the internet. The pre-training consists in predicting the next token (a token being usually a word, subword, or punctuation). Throughout this pre-training, GPT models accumulate knowledge about the world, and can then generate human-like text by repeatedly predicting the next token. Typically, a subsequent training phase makes the model more truthful, useful and harmless, usually with a technique called reinforcement learning from human feedback (RLHF). Current GPT models are still prone to generating falsehoods called "hallucinations", although this can be reduced with RLHF and quality data. They are used in chatbots, which allow you to ask a question or request a task in simple text.[124][125]

Current models and services include: Gemini (formerly Bard), ChatGPT, Grok, Claude, Copilot and LLaMA.[126] Multimodal GPT models can process different types of data (modalities) such as images, videos, sound and text.[127]

Specialized hardware and software

In the late 2010s, graphics processing units (GPUs) that were increasingly designed with AI-specific enhancements and used with specialized TensorFlow software, had replaced previously used central processing unit (CPUs) as the dominant means for large-scale (commercial and academic) machine learning models' training.[128] Historically, specialized languages, such as Lisp, Prolog, Python and others, had been used.

Applications

Health and medicine

The application of AI in medicine and medical research has the potential to increase patient care and quality of life.[129] Through the lens of the Hippocratic Oath, medical professionals are ethically compelled to use AI, if applications can more accurately diagnose and treat patients.

For medical research, AI is an important tool for processing and integrating

Games

Military

Various countries are deploying AI military applications.[143] The main applications enhance command and control, communications, sensors, integration and interoperability.[144] Research is targeting intelligence collection and analysis, logistics, cyber operations, information operations, and semiautonomous and autonomous vehicles.[143] AI technologies enable coordination of sensors and effectors, threat detection and identification, marking of enemy positions, target acquisition, coordination and deconfliction of distributed Joint Fires between networked combat vehicles involving manned and unmanned teams.[144] AI was incorporated into military operations in Iraq and Syria.[143]

In November 2023, US Vice President Kamala Harris disclosed a declaration signed by 31 nations to set guardrails for the military use of AI. The commitments include using legal reviews to ensure the compliance of military AI with international laws, and being cautious and transparent in the development of this technology.[145]

Generative AI

In the early 2020s,

Industry-specific tasks

There are also thousands of successful AI applications used to solve specific problems for specific industries or institutions. In a 2017 survey, one in five companies reported they had incorporated "AI" in some offerings or processes.[149] A few examples are energy storage, medical diagnosis, military logistics, applications that predict the result of judicial decisions, foreign policy, or supply chain management.

In agriculture, AI has helped farmers identify areas that need irrigation, fertilization, pesticide treatments or increasing yield. Agronomists use AI to conduct research and development. AI has been used to predict the ripening time for crops such as tomatoes, monitor soil moisture, operate agricultural robots, conduct predictive analytics, classify livestock pig call emotions, automate greenhouses, detect diseases and pests, and save water.

Artificial intelligence is used in astronomy to analyze increasing amounts of available data and applications, mainly for "classification, regression, clustering, forecasting, generation, discovery, and the development of new scientific insights" for example for discovering exoplanets, forecasting solar activity, and distinguishing between signals and instrumental effects in gravitational wave astronomy. It could also be used for activities in space such as space exploration, including analysis of data from space missions, real-time science decisions of spacecraft, space debris avoidance, and more autonomous operation.

Ethics

AI has potential benefits and potential risks. AI may be able to advance science and find solutions for serious problems:

Risks and harm

Privacy and copyright

Machine-learning algorithms require large amounts of data. The techniques used to acquire this data have raised concerns about privacy, surveillance and copyright.

Technology companies collect a wide range of data from their users, including online activity, geolocation data, video and audio.[153] For example, in order to build

AI developers argue that this is the only way to deliver valuable applications. and have developed several techniques that attempt to preserve privacy while still obtaining the data, such as data aggregation, de-identification and differential privacy.[156] Since 2016, some privacy experts, such as Cynthia Dwork, have begun to view privacy in terms of fairness. Brian Christian wrote that experts have pivoted "from the question of 'what they know' to the question of 'what they're doing with it'."[157]

Generative AI is often trained on unlicensed copyrighted works, including in domains such as images or computer code; the output is then used under the rationale of "fair use". Experts disagree about how well and under what circumstances this rationale will hold up in courts of law; relevant factors may include "the purpose and character of the use of the copyrighted work" and "the effect upon the potential market for the copyrighted work".[158][159] Website owners who do not wish to have their content scraped can indicate it in a "robots.txt" file.[160] In 2023, leading authors (including John Grisham and Jonathan Franzen) sued AI companies for using their work to train generative AI.[161][162] Another discussed approach is to envision a separate sui generis system of protection for creations generated by AI to ensure fair attribution and compensation for human authors.[163]

Misinformation

In 2022,

Algorithmic bias and fairness

Machine learning applications will be biased if they learn from biased data.[168] The developers may not be aware that the bias exists.[169] Bias can be introduced by the way

On June 28, 2015, Google Photos's new image labeling feature mistakenly identified Jacky Alcine and a friend as "gorillas" because they were black. The system was trained on a dataset that contained very few images of black people,[173] a problem called "sample size disparity".[174] Google "fixed" this problem by preventing the system from labelling anything as a "gorilla". Eight years later, in 2023, Google Photos still could not identify a gorilla, and neither could similar products from Apple, Facebook, Microsoft and Amazon.[175]

A program can make biased decisions even if the data does not explicitly mention a problematic feature (such as "race" or "gender"). The feature will correlate with other features (like "address", "shopping history" or "first name"), and the program will make the same decisions based on these features as it would on "race" or "gender".[179] Moritz Hardt said "the most robust fact in this research area is that fairness through blindness doesn't work."[180]

Criticism of COMPAS highlighted that machine learning models are designed to make "predictions" that are only valid if we assume that the future will resemble the past. If they are trained on data that includes the results of racist decisions in the past, machine learning models must predict that racist decisions will be made in the future. If an application then uses these predictions as recommendations, some of these "recommendations" will likely be racist.[181] Thus, machine learning is not well suited to help make decisions in areas where there is hope that the future will be better than the past. It is necessarily descriptive and not proscriptive.[l]

Bias and unfairness may go undetected because the developers are overwhelmingly white and male: among AI engineers, about 4% are black and 20% are women.[174]

At its 2022 Conference on Fairness, Accountability, and Transparency (ACM FAccT 2022), the Association for Computing Machinery, in Seoul, South Korea, presented and published findings that recommend that until AI and robotics systems are demonstrated to be free of bias mistakes, they are unsafe, and the use of self-learning neural networks trained on vast, unregulated sources of flawed internet data should be curtailed.[183]

Lack of transparency

Many AI systems are so complex that their designers cannot explain how they reach their decisions.

It is impossible to be certain that a program is operating correctly if no one knows how exactly it works. There have been many cases where a machine learning program passed rigorous tests, but nevertheless learned something different than what the programmers intended. For example, a system that could identify skin diseases better than medical professionals was found to actually have a strong tendency to classify images with a ruler as "cancerous", because pictures of malignancies typically include a ruler to show the scale.[186] Another machine learning system designed to help effectively allocate medical resources was found to classify patients with asthma as being at "low risk" of dying from pneumonia. Having asthma is actually a severe risk factor, but since the patients having asthma would usually get much more medical care, they were relatively unlikely to die according to the training data. The correlation between asthma and low risk of dying from pneumonia was real, but misleading.[187]

People who have been harmed by an algorithm's decision have a right to an explanation.[188] Doctors, for example, are expected to clearly and completely explain to their colleagues the reasoning behind any decision they make. Early drafts of the European Union's General Data Protection Regulation in 2016 included an explicit statement that this right exists.[m] Industry experts noted that this is an unsolved problem with no solution in sight. Regulators argued that nevertheless the harm is real: if the problem has no solution, the tools should not be used.[189]

There are several possible solutions to the transparency problem. SHAP tried to solve the transparency problems by visualising the contribution of each feature to the output.

Bad actors and weaponized AI

Artificial intelligence provides a number of tools that are useful to

A lethal autonomous weapon is a machine that locates, selects and engages human targets without human supervision.

AI tools make it easier for

There many other ways that AI is expected to help bad actors, some of which can not be foreseen. For example, machine-learning AI is able to design tens of thousands of toxic molecules in a matter of hours.[202]

Reliance on industry giants

Training AI systems requires an enormous amount of computing power. Usually only

Technological unemployment

Economists have frequently highlighted the risks of redundancies from AI, and speculated about unemployment if there is no adequate social policy for full employment.[204]

In the past, technology has tended to increase rather than reduce total employment, but economists acknowledge that "we're in uncharted territory" with AI.[205] A survey of economists showed disagreement about whether the increasing use of robots and AI will cause a substantial increase in long-term unemployment, but they generally agree that it could be a net benefit if productivity gains are redistributed.[206] Risk estimates vary; for example, in the 2010s, Michael Osborne and Carl Benedikt Frey estimated 47% of U.S. jobs are at "high risk" of potential automation, while an OECD report classified only 9% of U.S. jobs as "high risk".[o][208] The methodology of speculating about future employment levels has been criticised as lacking evidential foundation, and for implying that technology, rather than social policy, creates unemployment, as opposed to redundancies.[204] In April 2023, it was reported that 70% of the jobs for Chinese video game illustrators had been eliminated by generative artificial intelligence.[209][210]

Unlike previous waves of automation, many middle-class jobs may be eliminated by artificial intelligence; The Economist stated in 2015 that "the worry that AI could do to white-collar jobs what steam power did to blue-collar ones during the Industrial Revolution" is "worth taking seriously".[211] Jobs at extreme risk range from paralegals to fast food cooks, while job demand is likely to increase for care-related professions ranging from personal healthcare to the clergy.[212]

From the early days of the development of artificial intelligence, there have been arguments, for example, those put forward by Joseph Weizenbaum, about whether tasks that can be done by computers actually should be done by them, given the difference between computers and humans, and between quantitative calculation and qualitative, value-based judgement.[213]

Existential risk

It has been argued AI will become so powerful that humanity may irreversibly lose control of it. This could, as physicist Stephen Hawking stated, "spell the end of the human race".[214] This scenario has been common in science fiction, when a computer or robot suddenly develops a human-like "self-awareness" (or "sentience" or "consciousness") and becomes a malevolent character.[p] These sci-fi scenarios are misleading in several ways.

First, AI does not require human-like "sentience" to be an existential risk. Modern AI programs are given specific goals and use learning and intelligence to achieve them. Philosopher Nick Bostrom argued that if one gives almost any goal to a sufficiently powerful AI, it may choose to destroy humanity to achieve it (he used the example of a paperclip factory manager).[216] Stuart Russell gives the example of household robot that tries to find a way to kill its owner to prevent it from being unplugged, reasoning that "you can't fetch the coffee if you're dead."[217] In order to be safe for humanity, a superintelligence would have to be genuinely aligned with humanity's morality and values so that it is "fundamentally on our side".[218]

Second, Yuval Noah Harari argues that AI does not require a robot body or physical control to pose an existential risk. The essential parts of civilization are not physical. Things like ideologies, law, government, money and the economy are made of language; they exist because there are stories that billions of people believe. The current prevalence of misinformation suggests that an AI could use language to convince people to believe anything, even to take actions that are destructive.[219]

The opinions amongst experts and industry insiders are mixed, with sizable fractions both concerned and unconcerned by risk from eventual superintelligent AI.[220] Personalities such as Stephen Hawking, Bill Gates, and Elon Musk have expressed concern about existential risk from AI.[221] AI pioneers including Fei-Fei Li, Geoffrey Hinton, Yoshua Bengio, Cynthia Breazeal, Rana el Kaliouby, Demis Hassabis, Joy Buolamwini, and Sam Altman have expressed concerns about the risks of AI. In 2023, many leading AI experts issued the joint statement that "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war".[222]

Other researchers, however, spoke in favor of a less dystopian view. AI pioneer

Ethical machines and alignment

Friendly AI are machines that have been designed from the beginning to minimize risks and to make choices that benefit humans. Eliezer Yudkowsky, who coined the term, argues that developing friendly AI should be a higher research priority: it may require a large investment and it must be completed before AI becomes an existential risk.[230]

Machines with intelligence have the potential to use their intelligence to make ethical decisions. The field of machine ethics provides machines with ethical principles and procedures for resolving ethical dilemmas.[231] The field of machine ethics is also called computational morality,[231] and was founded at an

Other approaches include Wendell Wallach's "artificial moral agents"[233] and Stuart J. Russell's three principles for developing provably beneficial machines.[234]

Open Source

Active organizations in the AI open-source community include Hugging Face,[235] Google,[236] EleutherAI and Meta.[237] Various AI models, such as Llama 2, Mistral or Stable Diffusion, have been made open-weight,[238][239] meaning that their architecture and trained parameters (the "weights") are publicly available. Open-weight models can be freely fine-tuned, which allows companies to specialize them with their own data and for their own use-case.[240] Open-weight models are useful for research and innovation, but can also be misused. Since they can be fine-tuned, any built-in security measure, such as objecting to harmful requests, can be trained away until it becomes ineffective. Some researchers warn that future AI models may develop dangerous capabilities (such as the potential to drastically facilitate bioterrorism), and that once released on the Internet, they can't be deleted everywhere if needed. They recommend pre-release audits and cost-benefit analyses.[241]

Frameworks

Artificial Intelligence projects can have their ethical permissibility tested while designing, developing, and implementing an AI system. An AI framework such as the Care and Act Framework containing the SUM values—developed by the Alan Turing Institute tests projects in four main areas:[242][243]

- RESPECT the dignity of individual people

- CONNECT with other people sincerely, openly and inclusively

- CARE for the wellbeing of everyone

- PROTECT social values, justice and the public interest

Other developments in ethical frameworks include those decided upon during the Asilomar Conference, the Montreal Declaration for Responsible AI, and the IEEE's Ethics of Autonomous Systems initiative, among others;[244] however, these principles do not go without their criticisms, especially regards to the people chosen contributes to these frameworks.[245]

Promotion of the wellbeing of the people and communities that these technologies affect requires consideration of the social and ethical implications at all stages of AI system design, development and implementation, and collaboration between job roles such as data scientists, product managers, data engineers, domain experts, and delivery managers.[246]

Regulation

The regulation of artificial intelligence is the development of public sector policies and laws for promoting and regulating artificial intelligence (AI); it is therefore related to the broader regulation of algorithms.

In a 2022 Ipsos survey, attitudes towards AI varied greatly by country; 78% of Chinese citizens, but only 35% of Americans, agreed that "products and services using AI have more benefits than drawbacks".[249] A 2023 Reuters/Ipsos poll found that 61% of Americans agree, and 22% disagree, that AI poses risks to humanity.[255] In a 2023 Fox News poll, 35% of Americans thought it "very important", and an additional 41% thought it "somewhat important", for the federal government to regulate AI, versus 13% responding "not very important" and 8% responding "not at all important".[256][257]

In November 2023, the first global

History

The study of mechanical or "formal" reasoning began with philosophers and mathematicians in antiquity. The study of logic led directly to

The field of AI research was founded at

Researchers in the 1960s and the 1970s were convinced that their methods would eventually succeed in creating a machine with

In the early 1980s, AI research was revived by the commercial success of

Up to this point, most of AI's funding had gone to projects which used high level

AI gradually restored its reputation in the late 1990s and early 21st century by exploiting formal mathematical methods and by finding specific solutions to specific problems. This "

Deep learning began to dominate industry benchmarks in 2012 and was adopted throughout the field.[11] For many specific tasks, other methods were abandoned.[x] Deep learning's success was based on both hardware improvements (faster computers,[289] graphics processing units, cloud computing[290]) and access to large amounts of data[291] (including curated datasets,[290] such as ImageNet). Deep learning's success led to an enormous increase in interest and funding in AI.[y] The amount of machine learning research (measured by total publications) increased by 50% in the years 2015–2019.[251]

In 2016, issues of

In the late teens and early 2020s,

Philosophy

Defining artificial intelligence

Alan Turing wrote in 1950 "I propose to consider the question 'can machines think'?"[295] He advised changing the question from whether a machine "thinks", to "whether or not it is possible for machinery to show intelligent behaviour".[295] He devised the Turing test, which measures the ability of a machine to simulate human conversation.[265] Since we can only observe the behavior of the machine, it does not matter if it is "actually" thinking or literally has a "mind". Turing notes that we can not determine these things about other people but "it is usual to have a polite convention that everyone thinks"[296]

McCarthy defines intelligence as "the computational part of the ability to achieve goals in the world."[299] Another AI founder, Marvin Minsky similarly describes it as "the ability to solve hard problems".[300] The leading AI textbook defines it as the study of agents that perceive their environment and take actions that maximize their chances of achieving defined goals.[301] These definitions view intelligence in terms of well-defined problems with well-defined solutions, where both the difficulty of the problem and the performance of the program are direct measures of the "intelligence" of the machine—and no other philosophical discussion is required, or may not even be possible.

Another definition has been adopted by Google,[302] a major practitioner in the field of AI. This definition stipulates the ability of systems to synthesize information as the manifestation of intelligence, similar to the way it is defined in biological intelligence.

Evaluating approaches to AI

No established unifying theory or

. Critics argue that these questions may have to be revisited by future generations of AI researchers.Symbolic AI and its limits

However, the symbolic approach failed on many tasks that humans solve easily, such as learning, recognizing an object or commonsense reasoning.

The issue is not resolved:

Neat vs. scruffy

"Neats" hope that intelligent behavior is described using simple, elegant principles (such as

Soft vs. hard computing

Finding a provably correct or optimal solution is

Narrow vs. general AI

AI researchers are divided as to whether to pursue the goals of artificial general intelligence and superintelligence directly or to solve as many specific problems as possible (narrow AI) in hopes these solutions will lead indirectly to the field's long-term goals.[312][313] General intelligence is difficult to define and difficult to measure, and modern AI has had more verifiable successes by focusing on specific problems with specific solutions. The experimental sub-field of artificial general intelligence studies this area exclusively.

Machine consciousness, sentience and mind

The philosophy of mind does not know whether a machine can have a mind, consciousness and mental states, in the same sense that human beings do. This issue considers the internal experiences of the machine, rather than its external behavior. Mainstream AI research considers this issue irrelevant because it does not affect the goals of the field: to build machines that can solve problems using intelligence. Russell and Norvig add that "[t]he additional project of making a machine conscious in exactly the way humans are is not one that we are equipped to take on."[314] However, the question has become central to the philosophy of mind. It is also typically the central question at issue in artificial intelligence in fiction.

Consciousness

Computationalism and functionalism

Computationalism is the position in the philosophy of mind that the human mind is an information processing system and that thinking is a form of computing. Computationalism argues that the relationship between mind and body is similar or identical to the relationship between software and hardware and thus may be a solution to the mind–body problem. This philosophical position was inspired by the work of AI researchers and cognitive scientists in the 1960s and was originally proposed by philosophers Jerry Fodor and Hilary Putnam.[317]

Philosopher

AI welfare and rights

It is difficult or impossible to reliably evaluate whether an advanced

In 2017, the European Union considered granting "electronic personhood" to some of the most capable AI systems. Similarly to the legal status of companies, it would have conferred rights but also responsibilities.[326] Critics argued in 2018 that granting rights to AI systems would downplay the importance of human rights, and that legislation should focus on user needs rather than speculative futuristic scenarios. They also noted that robots lacked the autonomy to take part to society on their own.[327][328]

Progress in AI increased interest in the topic. Proponents of AI welfare and rights often argue that AI sentience, if it emerges, would be particularly easy to deny. They warn that this may be a

Future

Superintelligence and the singularity

A superintelligence is a hypothetical agent that would possess intelligence far surpassing that of the brightest and most gifted human mind.[313]

If research into

However, technologies cannot improve exponentially indefinitely, and typically follow an

Transhumanism

Robot designer Hans Moravec, cyberneticist Kevin Warwick, and inventor Ray Kurzweil have predicted that humans and machines will merge in the future into cyborgs that are more capable and powerful than either. This idea, called transhumanism, has roots in Aldous Huxley and Robert Ettinger.[331]

Edward Fredkin argues that "artificial intelligence is the next stage in evolution", an idea first proposed by Samuel Butler's "Darwin among the Machines" as far back as 1863, and expanded upon by George Dyson in his book of the same name in 1998.[332]

In fiction

Thought-capable artificial beings have appeared as storytelling devices since antiquity,[333] and have been a persistent theme in science fiction.[334]

A common

Isaac Asimov introduced the Three Laws of Robotics in many books and stories, most notably the "Multivac" series about a super-intelligent computer of the same name. Asimov's laws are often brought up during lay discussions of machine ethics;[336] while almost all artificial intelligence researchers are familiar with Asimov's laws through popular culture, they generally consider the laws useless for many reasons, one of which is their ambiguity.[337]

Several works use AI to force us to confront the fundamental question of what makes us human, showing us artificial beings that have the ability to feel, and thus to suffer. This appears in Karel Čapek's R.U.R., the films A.I. Artificial Intelligence and Ex Machina, as well as the novel Do Androids Dream of Electric Sheep?, by Philip K. Dick. Dick considers the idea that our understanding of human subjectivity is altered by technology created with artificial intelligence.[338]

See also

- Artificial intelligence detection software– Software to detect AI-generated contentPages displaying short descriptions of redirect targets

- Behavior selection algorithm – Algorithm that selects actions for intelligent agents

- Business process automation – Technology-enabled automation of complex business processes

- Case-based reasoning – Process of solving new problems based on the solutions of similar past problems

- Computational intelligence – Ability of a computer to learn a specific task from data or experimental observation

- Digital immortality – Hypothetical concept of storing a personality in digital form

- Emergent algorithm – Algorithm exhibiting emergent behavior

- Female gendering of AI technologies– Gender biases in digital technologyPages displaying short descriptions of redirect targets

- Glossary of artificial intelligence – List of definitions of terms and concepts commonly used in the study of artificial intelligence

- Intelligence amplification – Use of information technology to augment human intelligence

- Mind uploading – Hypothetical process of digitally emulating a brain

- Robotic process automation – Form of business process automation technology

- Weak artificial intelligence – Form of artificial intelligence

- Wetware computer – Computer composed of organic material

Explanatory notes

- ^ a b This list of intelligent traits is based on the topics covered by the major AI textbooks, including: Russell & Norvig (2021), Luger & Stubblefield (2004), Poole, Mackworth & Goebel (1998) and Nilsson (1998)

- ^ a b This list of tools is based on the topics covered by the major AI textbooks, including: Russell & Norvig (2021), Luger & Stubblefield (2004), Poole, Mackworth & Goebel (1998) and Nilsson (1998)

- ^ It is among the reasons that expert systems proved to be inefficient for capturing knowledge.[33][34]

- ^ "Rational agent" is general term used in economics, philosophy and theoretical artificial intelligence. It can refer to anything that directs its behavior to accomplish goals, such as a person, an animal, a corporation, a nation, or, in the case of AI, a computer program.

- ^ Alan Turing discussed the centrality of learning as early as 1950, in his classic paper "Computing Machinery and Intelligence".[45] In 1956, at the original Dartmouth AI summer conference, Ray Solomonoff wrote a report on unsupervised probabilistic machine learning: "An Inductive Inference Machine".[46]

- ^ See AI winter § Machine translation and the ALPAC report of 1966

- ^ Compared with symbolic logic, formal Bayesian inference is computationally expensive. For inference to be tractable, most observations must be

- latent variables.[97]

- ^

Some form of deep neural networks (without a specific learning algorithm) were described by:

Alan Turing (1948);[116]

Frank Rosenblatt(1957);[116]

Karl Steinbuch and Roger David Joseph (1961).[117]

Deep or recurrent networks that learned (or used gradient descent) were developed by:

Ernst Ising and Wilhelm Lenz (1925);[118]

Oliver Selfridge (1959);[117]

Alexey Ivakhnenko and Valentin Lapa (1965);[118]

Kaoru Nakano (1977);[119]

Shun-Ichi Amari (1972);[119]John Joseph Hopfield (1982).[119]Backpropagation was independently discovered by: Henry J. Kelley (1960);[116] Arthur E. Bryson (1962);[116] Stuart Dreyfus (1962);[116] Arthur E. Bryson and Yu-Chi Ho (1969);[116] Seppo Linnainmaa (1970);[120] Paul Werbos (1974).[116] In fact, backpropagation and gradient descent are straight forward applications ofJohann Carl Friedrich Gauss (1795) and Adrien-Marie Legendre (1805).[122]There are probably many others, yet to be discovered by historians of science.

- ^ Geoffrey Hinton said, of his work on neural networks in the 1990s, "our labeled datasets were thousands of times too small. [And] our computers were millions of times too slow"[123]

- Stanford)[177]

- ^ Moritz Hardt (a director at the Max Planck Institute for Intelligent Systems) argues that machine learning "is fundamentally the wrong tool for a lot of domains, where you're trying to design interventions and mechanisms that change the world."[182]

- ^ When the law was passed in 2018, it still contained a form of this provision.

- land mines as well.[195]

- ^ See table 4; 9% is both the OECD average and the U.S. average.[207]

- ^ Sometimes called a "robopocalypse".[215]

- ^ "Electronic brain" was the term used by the press around this time.[261][262]

- ^ Daniel Crevier wrote, "the conference is generally recognized as the official birthdate of the new science."[266] Russell and Norvig called the conference "the inception of artificial intelligence."[264]

- ^ Russell and Norvig wrote "for the next 20 years the field would be dominated by these people and their students."[267]

- ^ Russell and Norvig wrote "it was astonishing whenever a computer did anything kind of smartish".[268]

- ^ The programs described are .

- ^ Russell and Norvig write: "in almost all cases, these early systems failed on more difficult problems"[272]

- ^

Embodied approaches to AI[279] were championed by Hans Moravec[280] and Rodney Brooks[281] and went by many names: Nouvelle AI.[281] Developmental robotics,[282]

- ^ Matteo Wong wrote in The Atlantic: "Whereas for decades, computer-science fields such as natural-language processing, computer vision, and robotics used extremely different methods, now they all use a programming method called "deep learning." As a result, their code and approaches have become more similar, and their models are easier to integrate into one another."[288]

- ^ Jack Clark wrote in Bloomberg: "After a half-decade of quiet breakthroughs in artificial intelligence, 2015 has been a landmark year. Computers are smarter and learning faster than ever", and noted that the number of software projects that use machine learning at Google increased from a "sporadic usage" in 2012 to more than 2,700 projects in 2015.[290]

- Nils Nilsson wrote in 1983: "Simply put, there is wide disagreement in the field about what AI is all about."[303]

- ^ Daniel Crevier wrote that "time has proven the accuracy and perceptiveness of some of Dreyfus's comments. Had he formulated them less aggressively, constructive actions they suggested might have been taken much earlier."[308]

- ^ Searle presented this definition of "Strong AI" in 1999.[318] Searle's original formulation was "The appropriately programmed computer really is a mind, in the sense that computers given the right programs can be literally said to understand and have other cognitive states."[319] Strong AI is defined similarly by Russell and Norvig: "Stong AI – the assertion that machines that do so are actually thinking (as opposed to simulating thinking)."[320]

References

- ^ a b Russell & Norvig (2021), pp. 1–4.

- ^ Google (2016).

- ^ AI set to exceed human brain power Archived 2008-02-19 at the Wayback Machine CNN.com (July 26, 2006)

- S2CID 158433736.

- ^ ISBN 0-19-825079-7.

- ^ a b

Dartmouth workshop:

- Russell & Norvig (2021, p. 18)

- McCorduck (2004, pp. 111–136)

- NRC (1999, pp. 200–201)

- ^ a b

Successful programs the 1960s:

- McCorduck (2004, pp. 243–252)

- Crevier (1993, pp. 52–107)

- Moravec (1988, p. 9)

- Russell & Norvig (2021, pp. 19–21)

- ^ a b

Funding initiatives in the early 1980s: Fifth Generation Project (Japan), Alvey (UK), Microelectronics and Computer Technology Corporation (US), Strategic Computing Initiative(US):

- McCorduck (2004, pp. 426–441)

- Crevier (1993, pp. 161–162, 197–203, 211, 240)

- Russell & Norvig (2021, p. 23)

- NRC (1999, pp. 210–211)

- Newquist (1994, pp. 235–248)

- ^ a b

First Mansfield Amendment

- Crevier (1993, pp. 115–117)

- Russell & Norvig (2021, pp. 21–22)

- NRC (1999, pp. 212–213)

- Howe (1994)

- Newquist (1994, pp. 189–201)

- ^ a b

Second AI Winter:

- Russell & Norvig (2021, p. 24)

- McCorduck (2004, pp. 430–435)

- Crevier (1993, pp. 209–210)

- NRC (1999, pp. 214–216)

- Newquist (1994, pp. 301–318)

- ^ a b Deep learning revolution, AlexNet:

- ^ Toews (2023).

- ^ Frank (2023).

- ^ a b c

Artificial general intelligence:

- Russell & Norvig (2021, pp. 32–33, 1020–1021)

- ^ Russell & Norvig (2021, §1.2).

- ^

Problem solving, puzzle solving, game playing and deduction:

- Russell & Norvig (2021, chpt. 3–5)

- Russell & Norvig (2021, chpt. 6) (constraint satisfaction)

- Poole, Mackworth & Goebel (1998, chpt. 2, 3, 7, 9)

- Luger & Stubblefield (2004, chpt. 3, 4, 6, 8)

- Nilsson (1998, chpt. 7–12)

- ^

Uncertain reasoning:

- Russell & Norvig (2021, chpt. 12–18)

- Poole, Mackworth & Goebel (1998, pp. 345–395)

- Luger & Stubblefield (2004, pp. 333–381)

- Nilsson (1998, chpt. 7–12)

- ^ a b c

Intractability and efficiency and the combinatorial explosion:

- Russell & Norvig (2021, p. 21)

- ^ a b c Psychological evidence of the prevalence sub-symbolic reasoning and knowledge:

- ^

Knowledge representation and knowledge engineering:

- Russell & Norvig (2021, chpt. 10)

- Poole, Mackworth & Goebel (1998, pp. 23–46, 69–81, 169–233, 235–277, 281–298, 319–345)

- Luger & Stubblefield (2004, pp. 227–243),

- Nilsson (1998, chpt. 17.1–17.4, 18)

- ^ Smoliar & Zhang (1994).

- ^ Neumann & Möller (2008).

- ^ Kuperman, Reichley & Bailey (2006).

- ^ McGarry (2005).

- ^ Bertini, Del Bimbo & Torniai (2006).

- ^ Russell & Norvig (2021), pp. 272.

- ^

Representing categories and relations: scripts):

- Russell & Norvig (2021, §10.2 & 10.5),

- Poole, Mackworth & Goebel (1998, pp. 174–177),

- Luger & Stubblefield (2004, pp. 248–258),

- Nilsson (1998, chpt. 18.3)

- ^ Representing events and time:Situation calculus, event calculus, fluent calculus (including solving the frame problem):

- Russell & Norvig (2021, §10.3),

- Poole, Mackworth & Goebel (1998, pp. 281–298),

- Nilsson (1998, chpt. 18.2)

- ^

Causal calculus:

- Poole, Mackworth & Goebel (1998, pp. 335–337)

- ^

Representing knowledge about knowledge: Belief calculus, modal logics:

- Russell & Norvig (2021, §10.4),

- Poole, Mackworth & Goebel (1998, pp. 275–277)

- ^ a b

closed world assumption, abduction:

- Russell & Norvig (2021, §10.6)

- Poole, Mackworth & Goebel (1998, pp. 248–256, 323–335)

- Luger & Stubblefield (2004, pp. 335–363)

- Nilsson (1998, ~18.3.3)

- ^ a b

Breadth of commonsense knowledge:

- Lenat & Guha (1989, Introduction)

- Crevier (1993, pp. 113–114),

- Moravec (1988, p. 13),

- Russell & Norvig (2021, pp. 241, 385, 982) (qualification problem)

- ^ Newquist (1994), p. 296.

- ^ Crevier (1993), pp. 204–208.

- ^ Russell & Norvig (2021), p. 528.

- ^

Automated planning:

- Russell & Norvig (2021, chpt. 11).

- ^

Automated decision making, Decision theory:

- Russell & Norvig (2021, chpt. 16–18).

- ^

Classical planning:

- Russell & Norvig (2021, Section 11.2).

- ^

Sensorless or "conformant" planning, contingent planning, replanning (a.k.a online planning):

- Russell & Norvig (2021, Section 11.5).

- ^

Uncertain preferences:

- Russell & Norvig (2021, Section 16.7)

Inverse reinforcement learning:- Russell & Norvig (2021, Section 22.6)

- ^

Information value theory:

- Russell & Norvig (2021, Section 16.6).

- ^

Markov decision process:

- Russell & Norvig (2021, chpt. 17).

- ^

Game theory and multi-agent decision theory:

- Russell & Norvig (2021, chpt. 18).

- ^

Learning:

- Russell & Norvig (2021, chpt. 19–22)

- Poole, Mackworth & Goebel (1998, pp. 397–438)

- Luger & Stubblefield (2004, pp. 385–542)

- Nilsson (1998, chpt. 3.3, 10.3, 17.5, 20)

- ^ Turing (1950).

- ^ Solomonoff (1956).

- ^

Unsupervised learning:

- Russell & Norvig (2021, pp. 653) (definition)

- Russell & Norvig (2021, pp. 738–740) (cluster analysis)

- Russell & Norvig (2021, pp. 846–860) (word embedding)

- ^ a b

Supervised learning:

- Russell & Norvig (2021, §19.2) (Definition)

- Russell & Norvig (2021, Chpt. 19–20) (Techniques)

- ^

Reinforcement learning:

- Russell & Norvig (2021, chpt. 22)

- Luger & Stubblefield (2004, pp. 442–449)

- ^

Transfer learning:

- Russell & Norvig (2021, pp. 281)

- The Economist (2016)

- ^ "Artificial Intelligence (AI): What Is AI and How Does It Work? | Built In". builtin.com. Retrieved 30 October 2023.

- ^

Computational learning theory:

- Russell & Norvig (2021, pp. 672–674)

- Jordan & Mitchell (2015)

- ^

Natural language processing (NLP):

- Russell & Norvig (2021, chpt. 23–24)

- Poole, Mackworth & Goebel (1998, pp. 91–104)

- Luger & Stubblefield (2004, pp. 591–632)

- ^

Subproblems of NLP:

- Russell & Norvig (2021, pp. 849–850)

- ^ Russell & Norvig (2021), p. 856–858.

- ^ Dickson (2022).

- ^ Modern statistical and deep learning approaches to NLP:

- Russell & Norvig (2021, chpt. 24)

- Cambria & White (2014)

- ^ Vincent (2019).

- ^ Russell & Norvig (2021), p. 875–878.

- ^ Bushwick (2023).

- ^

Computer vision:

- Russell & Norvig (2021, chpt. 25)

- Nilsson (1998, chpt. 6)

- ^ Russell & Norvig (2021), pp. 849–850.

- ^ Russell & Norvig (2021), pp. 895–899.

- ^ Russell & Norvig (2021), pp. 899–901.

- ^ Russell & Norvig (2021), pp. 931–938.

- ^ MIT AIL (2014).

- ^ Affective computing:

- ^ Waddell (2018).

- ^ Poria et al. (2017).

- ^

Search algorithms:

- Russell & Norvig (2021, Chpt. 3–5)

- Poole, Mackworth & Goebel (1998, pp. 113–163)

- Luger & Stubblefield (2004, pp. 79–164, 193–219)

- Nilsson (1998, chpt. 7–12)

- ^

State space search:

- Russell & Norvig (2021, chpt. 3)

- ^ Russell & Norvig (2021), §11.2.

- breadth first search, depth-first search and general state space search):

- Russell & Norvig (2021, §3.4)

- Poole, Mackworth & Goebel (1998, pp. 113–132)

- Luger & Stubblefield (2004, pp. 79–121)

- Nilsson (1998, chpt. 8)

- ^

Heuristic or informed searches (e.g., greedy best first and A*):

- Russell & Norvig (2021, s§3.5)

- Poole, Mackworth & Goebel (1998, pp. 132–147)

- Poole & Mackworth (2017, §3.6)

- Luger & Stubblefield (2004, pp. 133–150)

- ^

Adversarial search:

- Russell & Norvig (2021, chpt. 5)

- ^ Local or "optimization" search:

- Russell & Norvig (2021, chpt. 4)

- ^ Singh Chauhan, Nagesh (18 December 2020). "Optimization Algorithms in Neural Networks". KDnuggets. Retrieved 13 January 2024.

- ^

Evolutionary computation:

- Russell & Norvig (2021, §4.1.2)

- ^ Merkle & Middendorf (2013).

- ^

Logic:

- Russell & Norvig (2021, chpt. 6–9)

- Luger & Stubblefield (2004, pp. 35–77)

- Nilsson (1998, chpt. 13–16)

- ^

Propositional logic:

- Russell & Norvig (2021, chpt. 6)

- Luger & Stubblefield (2004, pp. 45–50)

- Nilsson (1998, chpt. 13)

- ^

First-order logic and features such as equality:

- Russell & Norvig (2021, chpt. 7)

- Poole, Mackworth & Goebel (1998, pp. 268–275),

- Luger & Stubblefield (2004, pp. 50–62),

- Nilsson (1998, chpt. 15)

- ^

Logical inference:

- Russell & Norvig (2021, chpt. 10)

- ^ logical deduction as search:

- Russell & Norvig (2021, §9.3, §9.4)

- Poole, Mackworth & Goebel (1998, pp. ~46–52)

- Luger & Stubblefield (2004, pp. 62–73)

- Nilsson (1998, chpt. 4.2, 7.2)

- ^

Resolution and unification:

- Russell & Norvig (2021, §7.5.2, §9.2, §9.5)

- .

- ^

Fuzzy logic:

- Russell & Norvig (2021, pp. 214, 255, 459)

- Scientific American (1999)

- ^ a b

Stochastic methods for uncertain reasoning:

- Russell & Norvig (2021, Chpt. 12–18 and 20),

- Poole, Mackworth & Goebel (1998, pp. 345–395),

- Luger & Stubblefield (2004, pp. 165–191, 333–381),

- Nilsson (1998, chpt. 19)

- ^

decision theory and decision analysis:

- Russell & Norvig (2021, Chpt. 16–18),

- Poole, Mackworth & Goebel (1998, pp. 381–394)

- ^

Information value theory:

- Russell & Norvig (2021, §16.6)

- decision networks:

- Russell & Norvig (2021, chpt. 17)

- ^ a b c

Stochastic temporal models:

- Russell & Norvig (2021, Chpt. 14)

- Russell & Norvig (2021, §14.3)

- Russell & Norvig (2021, §14.4)

- Russell & Norvig (2021, §14.5)

- ^ Game theory and mechanism design:

- Russell & Norvig (2021, chpt. 18)

- ^

Bayesian networks:

- Russell & Norvig (2021, §12.5–12.6, §13.4–13.5, §14.3–14.5, §16.5, §20.2 -20.3),

- Poole, Mackworth & Goebel (1998, pp. 361–381),

- Luger & Stubblefield (2004, pp. ~182–190, ≈363–379),

- Nilsson (1998, chpt. 19.3–4)

- ^ Domingos (2015), chapter 6.

- ^

Bayesian inference algorithm:

- Russell & Norvig (2021, §13.3–13.5),

- Poole, Mackworth & Goebel (1998, pp. 361–381),

- Luger & Stubblefield (2004, pp. ~363–379),

- Nilsson (1998, chpt. 19.4 & 7)

- ^ Domingos (2015), p. 210.

- ^

expectation-maximization algorithm:

- Russell & Norvig (2021, Chpt. 20),

- Poole, Mackworth & Goebel (1998, pp. 424–433),

- Nilsson (1998, chpt. 20)

- Domingos (2015, p. 210)

- decision networks:

- Russell & Norvig (2021, §16.5)

- ^

Statistical learning methods and classifiers:

- Russell & Norvig (2021, chpt. 20),

- ^

Decision trees:

- Russell & Norvig (2021, §19.3)

- Domingos (2015, p. 88)

- ^

support vector machines:

- Russell & Norvig (2021, §19.7)

- Domingos (2015, p. 187) (k-nearest neighbor)

- Domingos (2015, p. 88) (kernel methods)

- ^ Domingos (2015), p. 152.

- ^

Naive Bayes classifier:

- Russell & Norvig (2021, §12.6)

- Domingos (2015, p. 152)

- ^ a b

Neural networks:

- Russell & Norvig (2021, Chpt. 21),

- Domingos (2015, Chapter 4)

- ^

Gradient calculation in computational graphs, backpropagation, automatic differentiation:

- Russell & Norvig (2021, §21.2),

- Luger & Stubblefield (2004, pp. 467–474),

- Nilsson (1998, chpt. 3.3)

- ^

Universal approximation theorem:

- Russell & Norvig (2021, p. 752)

- ^

Feedforward neural networks:

- Russell & Norvig (2021, §21.1)

- ^

Recurrent neural networks:

- Russell & Norvig (2021, §21.6)

- ^

Perceptrons:

- Russell & Norvig (2021, pp. 21, 22, 683, 22)

- ^ a b Deep learning:

- ^

Convolutional neural networks:

- Russell & Norvig (2021, §21.3)

- ^ Deng & Yu (2014), pp. 199–200.

- ^ Ciresan, Meier & Schmidhuber (2012).

- ^ Russell & Norvig (2021), p. 751.

- ^ a b c d e f g Russell & Norvig (2021), p. 785.

- ^ a b Schmidhuber (2022), §5.

- ^ a b Schmidhuber (2022), §6.

- ^ a b c Schmidhuber (2022), §7.

- ^ Schmidhuber (2022), §8.

- ^ Schmidhuber (2022), §2.

- ^ Schmidhuber (2022), §3.

- ^ Quoted in Christian (2020, p. 22)

- ^ Smith (2023).

- ^ "Explained: Generative AI". 9 November 2023.

- ^ "AI Writing and Content Creation Tools". MIT Sloan Teaching & Learning Technologies. Retrieved 25 December 2023.

- ^ Marmouyet (2023).

- ^ Kobielus (2019).

- PMID 31363513.

- ^ ISSN 2673-5067.

- PMID 34265844.

- ^ "AI discovers new class of antibiotics to kill drug-resistant bacteria". 20 December 2023.

- ^ "AI speeds up drug design for Parkinson's ten-fold". Cambridge University. 17 April 2024.

- ^ "Discovery of potent inhibitors of α-synuclein aggregation using structure-based iterative learning". Nature. 17 April 2024.

- ISSN 0028-792X. Retrieved 28 January 2024.

- ^ Anderson, Mark Robert (11 May 2017). "Twenty years on from Deep Blue vs Kasparov: how a chess match started the big data revolution". The Conversation. Retrieved 28 January 2024.

- ISSN 0362-4331. Retrieved 28 January 2024.

- ^ Byford, Sam (27 May 2017). "AlphaGo retires from competitive Go after defeating world number one 3-0". The Verge. Retrieved 28 January 2024.

- PMID 31296650.

- ^ "MuZero: Mastering Go, chess, shogi and Atari without rules". Google DeepMind. 23 December 2020. Retrieved 28 January 2024.

- ISSN 0261-3077. Retrieved 28 January 2024.

- PMID 35140384.

- ^ PD-notice

- ^ .

- ISSN 1059-1028. Retrieved 24 January 2024.

- ^ Marcelline, Marco (27 May 2023). "ChatGPT: Most Americans Know About It, But Few Actually Use the AI Chatbot". PCMag. Retrieved 28 January 2024.

- ISSN 0261-3077. Retrieved 28 January 2024.

- ^ Hurst, Luke (23 May 2023). "How a fake image of a Pentagon explosion shared on Twitter caused a real dip on Wall Street". euronews. Retrieved 28 January 2024.

- ^ Ransbotham, Sam; Kiron, David; Gerbert, Philipp; Reeves, Martin (6 September 2017). "Reshaping Business With Artificial Intelligence". MIT Sloan Management Review. Archived from the original on 13 February 2024.

- ^ Simonite (2016).

- ^ Russell & Norvig (2021), p. 987.

- ^ Laskowski (2023).

- ^ GAO (2022).

- ^ Valinsky (2019).

- ^ Russell & Norvig (2021), p. 991.

- ^ Russell & Norvig (2021), p. 991–992.

- ^ Christian (2020), p. 63.

- ^ Vincent (2022).

- ^ Kopel, Matthew. "Copyright Services: Fair Use". Cornell University Library. Retrieved 26 April 2024.

- ISSN 1059-1028. Retrieved 26 April 2024.

- ^ Reisner (2023).

- ^ Alter & Harris (2023).

- WIPO.

- ^ Nicas (2018).

- ^ Rainie, Lee; Keeter, Scott; Perrin, Andrew (22 July 2019). "Trust and Distrust in America". Pew Research Center. Archived from the original on 22 February 2024.

- ^ Williams (2023).

- ^ Taylor & Hern (2023).

- ^ a b Rose (2023).

- ^ CNA (2019).

- ^ Goffrey (2008), p. 17.

- ^ Berdahl et al. (2023); Goffrey (2008, p. 17); Rose (2023); Russell & Norvig (2021, p. 995)

- ^

Algorithmic bias and Fairness (machine learning):

- Russell & Norvig (2021, section 27.3.3)

- Christian (2020, Fairness)

- ^ Christian (2020), p. 25.

- ^ a b Russell & Norvig (2021), p. 995.

- ^ Grant & Hill (2023).

- ^ Larson & Angwin (2016).

- ^ Christian (2020), p. 67–70.

- ^ Christian (2020, pp. 67–70); Russell & Norvig (2021, pp. 993–994)

- ^ Russell & Norvig (2021, p. 995); Lipartito (2011, p. 36); Goodman & Flaxman (2017, p. 6); Christian (2020, pp. 39–40, 65)

- ^ Quoted in Christian (2020, p. 65).

- ^ Russell & Norvig (2021, p. 994); Christian (2020, pp. 40, 80–81)

- ^ Quoted in Christian (2020, p. 80)

- ^ Dockrill (2022).

- ^ Sample (2017).

- ^ "Black Box AI". 16 June 2023.

- ^ Christian (2020), p. 110.

- ^ Christian (2020), pp. 88–91.

- ^ Christian (2020, p. 83); Russell & Norvig (2021, p. 997)

- ^ Christian (2020), p. 91.

- ^ Christian (2020), p. 83.

- ^ Verma (2021).

- ^ Rothman (2020).

- ^ Christian (2020), p. 105-108.

- ^ Christian (2020), pp. 108–112.

- ^ Russell & Norvig (2021), p. 989.

- ^ a b Russell & Norvig (2021), p. 987-990.

- ^ Russell & Norvig (2021), p. 988.

- ^ Robitzski (2018); Sainato (2015)

- ^ Harari (2018).

- ^ Buckley, Chris; Mozur, Paul (22 May 2019). "How China Uses High-Tech Surveillance to Subdue Minorities". The New York Times.

- ^ "Security lapse exposed a Chinese smart city surveillance system". 3 May 2019. Archived from the original on 7 March 2021. Retrieved 14 September 2020.

- ^ Urbina et al. (2022).

- ^ Metz, Cade (10 July 2023). "In the Age of A.I., Tech's Little Guys Need Big Friends". New York Times.

- ^ a b E McGaughey, 'Will Robots Automate Your Job Away? Full Employment, Basic Income, and Economic Democracy' (2022) 51(3) Industrial Law Journal 511–559 Archived 27 May 2023 at the Wayback Machine

- ^ Ford & Colvin (2015);McGaughey (2022)

- ^ IGM Chicago (2017).

- ^ Arntz, Gregory & Zierahn (2016), p. 33.

- ^ Lohr (2017); Frey & Osborne (2017); Arntz, Gregory & Zierahn (2016, p. 33)

- ^ Zhou, Viola (11 April 2023). "AI is already taking video game illustrators' jobs in China". Rest of World. Retrieved 17 August 2023.

- ^ Carter, Justin (11 April 2023). "China's game art industry reportedly decimated by growing AI use". Game Developer. Retrieved 17 August 2023.

- ^ Morgenstern (2015).

- ^ Mahdawi (2017); Thompson (2014)

- ^ Tarnoff, Ben (4 August 2023). "Lessons from Eliza". The Guardian Weekly. pp. 34–9.

- ^ Cellan-Jones (2014).

- ^ Russell & Norvig 2021, p. 1001.

- ^ Bostrom (2014).

- ^ Russell (2019).

- ^ Bostrom (2014); Müller & Bostrom (2014); Bostrom (2015).

- ^ Harari (2023).

- ^ Müller & Bostrom (2014).

- ^ Leaders' concerns about the existential risks of AI around 2015:

- ^ Valance (2023).

- ^ Taylor, Josh (7 May 2023). "Rise of artificial intelligence is inevitable but should not be feared, 'father of AI' says". The Guardian. Retrieved 26 May 2023.

- ^ Colton, Emma (7 May 2023). "'Father of AI' says tech fears misplaced: 'You cannot stop it'". Fox News. Retrieved 26 May 2023.

- ^ Jones, Hessie (23 May 2023). "Juergen Schmidhuber, Renowned 'Father Of Modern AI,' Says His Life's Work Won't Lead To Dystopia". Forbes. Retrieved 26 May 2023.

- ^ McMorrow, Ryan (19 December 2023). "Andrew Ng: 'Do we think the world is better off with more or less intelligence?'". Financial Times. Retrieved 30 December 2023.

- ^ Levy, Steven (22 December 2023). "How Not to Be Stupid About AI, With Yann LeCun". Wired. Retrieved 30 December 2023.

- ^ Arguments that AI is not an imminent risk:

- ^ a b Christian (2020), pp. 67, 73.

- ^ Yudkowsky (2008).

- ^ a b Anderson & Anderson (2011).

- ^ AAAI (2014).

- ^ Wallach (2010).

- ^ Russell (2019), p. 173.

- ^ Melton, Ashley Stewart, Monica. "Hugging Face CEO says he's focused on building a 'sustainable model' for the $4.5 billion open-source-AI startup". Business Insider. Retrieved 14 April 2024.

{{cite web}}: CS1 maint: multiple names: authors list (link) - ^ Wiggers, Kyle (9 April 2024). "Google open sources tools to support AI model development". TechCrunch. Retrieved 14 April 2024.

- ^ Heaven, Will Douglas (12 May 2023). "The open-source AI boom is built on Big Tech's handouts. How long will it last?". MIT Technology Review. Retrieved 14 April 2024.

- ^ Brodsky, Sascha (19 December 2023). "Mistral AI's New Language Model Aims for Open Source Supremacy". AI Business.

- ^ Edwards, Benj (22 February 2024). "Stability announces Stable Diffusion 3, a next-gen AI image generator". Ars Technica. Retrieved 14 April 2024.

- ^ Marshall, Matt (29 January 2024). "How enterprises are using open source LLMs: 16 examples". VentureBeat.

- ^ Piper, Kelsey (2 February 2024). "Should we make our most powerful AI models open source to all?". Vox. Retrieved 14 April 2024.

- ^ Alan Turing Institute (2019). "Understanding artificial intelligence ethics and safety" (PDF).

- ^ Alan Turing Institute (2023). "AI Ethics and Governance in Practice" (PDF).

- S2CID 198775713.

- S2CID 214766800.

- S2CID 259614124.

- ^ Regulation of AI to mitigate risks:

- ^ Law Library of Congress (U.S.). Global Legal Research Directorate (2019).

- ^ a b Vincent (2023).

- ^ Stanford University (2023).

- ^ a b c d UNESCO (2021).

- ^ Kissinger (2021).

- ^ Altman, Brockman & Sutskever (2023).

- ^ VOA News (25 October 2023). "UN Announces Advisory Body on Artificial Intelligence".

- ^ Edwards (2023).

- ^ Kasperowicz (2023).

- ^ Fox News (2023).

- ^ Milmo, Dan (3 November 2023). "Hope or Horror? The great AI debate dividing its pioneers". The Guardian Weekly. pp. 10–12.

- ^ "The Bletchley Declaration by Countries Attending the AI Safety Summit, 1-2 November 2023". GOV.UK. 1 November 2023. Archived from the original on 1 November 2023. Retrieved 2 November 2023.

- ^ "Countries agree to safe and responsible development of frontier AI in landmark Bletchley Declaration". GOV.UK (Press release). Archived from the original on 1 November 2023. Retrieved 1 November 2023.

- ^ a b Russell & Norvig 2021, p. 9.

- ^ "Google books ngram".

- ^

AI's immediate precursors:

- McCorduck (2004, pp. 51–107)

- Crevier (1993, pp. 27–32)

- Russell & Norvig (2021, pp. 8–17)

- Moravec (1988, p. 3)

- ^ a b Russell & Norvig (2021), p. 17.

- ^ a b

Turing's original publication of the Computing machinery and intelligence": Historical influence and philosophical implications:

- Haugeland (1985, pp. 6–9)

- Crevier (1993, p. 24)

- McCorduck (2004, pp. 70–71)

- Russell & Norvig (2021, pp. 2 and 984)

- ^ Crevier (1993), pp. 47–49.

- ^ Russell & Norvig (2003), p. 17.

- ^ Russell & Norvig (2003), p. 18.

- ^ Newquist (1994), pp. 86–86.

- ^ Simon (1965, p. 96) quoted in Crevier (1993, p. 109)

- ^ Minsky (1967, p. 2) quoted in Crevier (1993, p. 109)

- ^ Russell & Norvig (2021), p. 21.

- ^ Lighthill (1973).

- ^ NRC 1999, pp. 212–213.

- ^ Russell & Norvig (2021), p. 22.

- ^

Expert systems:

- Russell & Norvig (2021, pp. 23, 292)

- Luger & Stubblefield (2004, pp. 227–331)

- Nilsson (1998, chpt. 17.4)

- McCorduck (2004, pp. 327–335, 434–435)

- Crevier (1993, pp. 145–62, 197–203)

- Newquist (1994, pp. 155–183)

- ^ Russell & Norvig (2021), p. 24.

- ^ Nilsson (1998), p. 7.

- ^ McCorduck (2004), pp. 454–462.

- ^ Moravec (1988).

- ^ a b Brooks (1990).

- ^ Developmental robotics:

- ^ Russell & Norvig (2021), p. 25.

- ^

- Crevier (1993, pp. 214–215)

- Russell & Norvig (2021, pp. 24, 26)

- ^ Russell & Norvig (2021), p. 26.

- ^

Formal and narrow methods adopted in the 1990s:

- Russell & Norvig (2021, pp. 24–26)

- McCorduck (2004, pp. 486–487)

- ^

AI widely used in the late 1990s:

- Kurzweil (2005, p. 265)

- NRC (1999, pp. 216–222)

- Newquist (1994, pp. 189–201)

- ^ Wong (2023).

- ^

Moore's Lawand AI:

- Russell & Norvig (2021, pp. 14, 27)

- ^ a b c Clark (2015b).

- ^

Big data:

- Russell & Norvig (2021, p. 26)

- ^ Sagar, Ram (3 June 2020). "OpenAI Releases GPT-3, The Largest Model So Far". Analytics India Magazine. Archived from the original on 4 August 2020. Retrieved 15 March 2023.

- ^ DiFeliciantonio (2023).

- ^ Goswami (2023).

- ^ a b Turing (1950), p. 1.

- ^ Turing (1950), Under "The Argument from Consciousness".

- ^ Russell & Norvig (2021), p. 3.

- ^ Maker (2006).

- ^ McCarthy (1999).

- ^ Minsky (1986).

- ^ Russell & Norvig (2021), p. 1-4.

- ^ "What Is Artificial Intelligence (AI)?". Google Cloud Platform. Archived from the original on 31 July 2023. Retrieved 16 October 2023.

- ^ Nilsson (1983), p. 10.

- ^ Haugeland (1985), pp. 112–117.

- ^

Physical symbol system hypothesis:

- Newell & Simon (1976, p. 116)

- McCorduck (2004, p. 153)

- Russell & Norvig (2021, p. 19)

- ^

Moravec's paradox:

- Moravec (1988, pp. 15–16)

- Minsky (1986, p. 29)

- Pinker (2007, pp. 190–91)

- ^

Dreyfus' critique of AI: Historical significance and philosophical implications:

- Crevier (1993, pp. 120–132)

- McCorduck (2004, pp. 211–239)

- Russell & Norvig (2021, pp. 981–982)

- Fearn (2007, Chpt. 3)

- ^ Crevier (1993), p. 125.

- ^ Langley (2011).

- ^ Katz (2012).

- ^

Neats vs. scruffies, the historic debate:

- McCorduck (2004, pp. 421–424, 486–489)

- Crevier (1993, p. 168)

- Nilsson (1983, pp. 10–11)

- Russell & Norvig (2021, p. 24)

- ^ Pennachin & Goertzel (2007).

- ^ a b Roberts (2016).

- ^ Russell & Norvig (2021), p. 986.

- ^ Chalmers (1995).

- ^ Dennett (1991).

- ^ Horst (2005).

- ^ Searle (1999).

- ^ Searle (1980), p. 1.

- ^ Russell & Norvig (2021), p. 9817.

- ^

Searle's Chinese room argument:

- Searle (1980). Searle's original presentation of the thought experiment.

- Searle (1999).

- Russell & Norvig (2021, pp. 985)

- McCorduck (2004, pp. 443–445)

- Crevier (1993, pp. 269–271)

- ^ Leith, Sam (7 July 2022). "Nick Bostrom: How can we be certain a machine isn't conscious?". The Spectator. Retrieved 23 February 2024.

- ^ a b c Thomson, Jonny (31 October 2022). "Why don't robots have rights?". Big Think. Retrieved 23 February 2024.

- ^ a b Kateman, Brian (24 July 2023). "AI Should Be Terrified of Humans". TIME. Retrieved 23 February 2024.

- ^ Wong, Jeff (10 July 2023). "What leaders need to know about robot rights". Fast Company.

- ISSN 0261-3077. Retrieved 23 February 2024.

- ^ Dovey, Dana (14 April 2018). "Experts Don't Think Robots Should Have Rights". Newsweek. Retrieved 23 February 2024.

- ^ Cuddy, Alice (13 April 2018). "Robot rights violate human rights, experts warn EU". euronews. Retrieved 23 February 2024.

- ^

The Intelligence explosion and technological singularity:

- Russell & Norvig (2021, pp. 1004–1005)

- Omohundro (2008)

- Kurzweil (2005)

- ^ Russell & Norvig (2021), p. 1005.

- ^ Transhumanism:

- ^

AI as evolution:

- Edward Fredkin is quoted in McCorduck (2004, p. 401)

- Butler (1863)

- Dyson (1998)

- ^

AI in myth:

- McCorduck (2004, pp. 4–5)

- ^ McCorduck (2004), pp. 340–400.

- ^ Buttazzo (2001).

- ^ Anderson (2008).

- ^ McCauley (2007).

- ^ Galvan (1997).

AI textbooks

The two most widely used textbooks in 2023. (See the Open Syllabus).

- LCCN 20190474.

- ISBN 978-0070087705.

These were the four of the most widely used AI textbooks in 2008:

- ISBN 978-0-8053-4780-7. Archivedfrom the original on 26 July 2020. Retrieved 17 December 2019.

- ISBN 978-1-55860-467-4. Archivedfrom the original on 26 July 2020. Retrieved 18 November 2019.

- ISBN 0-13-790395-2.

- ISBN 978-0-19-510270-3. Archivedfrom the original on 26 July 2020. Retrieved 22 August 2020.

Later editions.

- Poole, David; ISBN 978-1-107-19539-4. Archivedfrom the original on 7 December 2017. Retrieved 6 December 2017.

History of AI

- ISBN 0-465-02997-3..

- ISBN 1-56881-205-1.

- ISBN 978-0-672-30412-5.

Other sources

- AI & ML in Fusion

- AI & ML in Fusion, video lecture Archived 2 July 2023 at the Wayback Machine

- "AlphaGo – Google DeepMind". Archived from the original on 10 March 2016.

- Alter, Alexandra; Harris, Elizabeth A. (20 September 2023), "Franzen, Grisham and Other Prominent Authors Sue OpenAI", The New York Times

- Altman, Sam; Brockman, Greg; Sutskever, Ilya (22 May 2023). "Governance of Superintelligence". openai.com. Archived from the original on 27 May 2023. Retrieved 27 May 2023.

- Anderson, Susan Leigh (2008). "Asimov's "three laws of robotics" and machine metaethics". AI & Society. 22 (4): 477–493. S2CID 1809459.

- Anderson, Michael; Anderson, Susan Leigh (2011). Machine Ethics. Cambridge University Press.

- Arntz, Melanie; Gregory, Terry; Zierahn, Ulrich (2016), "The risk of automation for jobs in OECD countries: A comparative analysis", OECD Social, Employment, and Migration Working Papers 189

- Asada, M.; Hosoda, K.; Kuniyoshi, Y.; Ishiguro, H.; Inui, T.; Yoshikawa, Y.; Ogino, M.; Yoshida, C. (2009). "Cognitive developmental robotics: a survey". IEEE Transactions on Autonomous Mental Development. 1 (1): 12–34. S2CID 10168773.

- "Ask the AI experts: What's driving today's progress in AI?". McKinsey & Company. Archived from the original on 13 April 2018. Retrieved 13 April 2018.

- Barfield, Woodrow; Pagallo, Ugo (2018). Research handbook on the law of artificial intelligence. Cheltenham, UK: Edward Elgar Publishing. OCLC 1039480085.

- Beal, J.; S2CID 32437713

- Berdahl, Carl Thomas; Baker, Lawrence; Mann, Sean; Osoba, Osonde; Girosi, Federico (7 February 2023). "Strategies to Improve the Impact of Artificial Intelligence on Health Equity: Scoping Review". JMIR AI. 2: e42936. from the original on 21 February 2023. Retrieved 21 February 2023.

- from the original on 26 July 2020. Retrieved 22 August 2020.

- Berryhill, Jamie; Heang, Kévin Kok; Clogher, Rob; McBride, Keegan (2019). Hello, World: Artificial Intelligence and its Use in the Public Sector (PDF). Paris: OECD Observatory of Public Sector Innovation. Archived (PDF) from the original on 20 December 2019. Retrieved 9 August 2020.

- Bertini, M; Del Bimbo, A; Torniai, C (2006). "Automatic annotation and semantic retrieval of video sequences using multimedia ontologies". MM '06 Proceedings of the 14th ACM international conference on Multimedia. 14th ACM international conference on Multimedia. Santa Barbara: ACM. pp. 679–682.

- Bostrom, Nick (2014). Superintelligence: Paths, Dangers, Strategies. Oxford University Press.

- Bostrom, Nick (2015). "What happens when our computers get smarter than we are?". TED (conference). Archived from the original on 25 July 2020. Retrieved 30 January 2020.

- Brooks, Rodney (10 November 2014). "artificial intelligence is a tool, not a threat". Archived from the original on 12 November 2014.

- (PDF) from the original on 9 August 2007.

- Buiten, Miriam C (2019). "Towards Intelligent Regulation of Artificial Intelligence". European Journal of Risk Regulation. 10 (1): 41–59. ISSN 1867-299X.

- Bushwick, Sophie (16 March 2023), "What the New GPT-4 AI Can Do", Scientific American

- Butler, Samuel (13 June 1863). "Darwin among the Machines". Letters to the Editor. The Press. Christchurch, New Zealand. Archived from the original on 19 September 2008. Retrieved 16 October 2014 – via Victoria University of Wellington.

- Buttazzo, G. (July 2001). "Artificial consciousness: Utopia or real possibility?". doi:10.1109/2.933500.

- Cambria, Erik; White, Bebo (May 2014). "Jumping NLP Curves: A Review of Natural Language Processing Research [Review Article]". IEEE Computational Intelligence Magazine. 9 (2): 48–57. S2CID 206451986.

- Cellan-Jones, Rory (2 December 2014). "Stephen Hawking warns artificial intelligence could end mankind". BBC News. Archived from the original on 30 October 2015. Retrieved 30 October 2015.